深度學習中Dropout和Layer Normalization技術的使用

兩者的論文:

Dropout:http://www.jmlr.org/papers/volume15/srivastava14a/srivastava14a.pdf

兩者的實現(以nematus為例子):

https://github.com/EdinburghNLP/nematus/blob/master/nematus/layers.py

GUR中搞Dropout的地方:

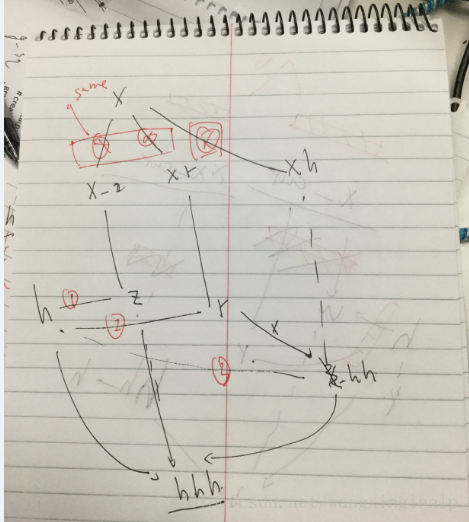

readout那一層的操作:

疑問:

1. 為什麼Dropout放在LN前面?

其他人不是這個順序

https://stackoverflow.com/questions/39691902/ordering-of-batch-normalization-and-dropout-in-tensorflow

BatchNorm -> ReLu(or other activation) -> Dropout

2. 為什麼 state_below_,pctx_也要做LN?(後面沒有直接上啟用函式呢?)

在gru_layer中,state_below_做LN(輸入的是src):

在gru_cond_layer中,state_below_又不做LN(輸入的是trg):

3. Dropout以在Scan裡面生成不行:https://groups.google.com/forum/#!topic/lasagne-users/3eyaV3P0Y-E

https://groups.google.com/forum/#!topic/theano-users/KAN1j7iey68

4. Dropout in RNN

RECURRENT NEURAL NETWORK REGULARIZATION裡介紹上一個hidden state傳進來不要記性dropout(Figure 2),但是Nematus裡面卻搞了...

5. residual connections

關於residual connections,https://github.com/harvardnlp/seq2seq-attn寫著:res_net: Use residual connections between LSTM stacks whereby the input to the l-th LSTM layer of the hidden state of the l-1-th LSTM layer summed with hidden state of the l-2th LSTM layer. We didn't find this to really help in our experiments.