Windows下用c++來呼叫tensorflow訓練好的模型

歷經千辛萬苦終於把這一關給過了,對一個菜鳥來說,終於算是鬆了一口氣。。。

首先我想說明的一下是常見的tensorflow訓練好的模型儲存方式有兩種:ckpt格式和pb格式,其中前者主要用於暫存我們訓練的臨時資料,避免發生意外導致訓練終止,前面的努力全部白費掉了。而後者常用於將模型固化,提供離線預測,使用者只要提供一個輸入,通過模型就可以得到一個預測結果。很顯然,我們想要的是後者。

下面就一個小栗子來詳細說下具體的操作過程吧:

(1)訓練生成pb檔案

這裡的圖片是採用的貓狗識別的圖片, ,先將圖片轉化成tfrecorder格式。

import os importnumpy as np from PIL import Image import tensorflow as tf def get_files(file_dir): cat = [] label_cat = [] dog = [] label_dog = [] for file in os.listdir(file_dir): pp=os.path.join(file_dir,file) for pic in os.listdir(pp): pic_path=os.path.join(pp,pic) iffile=="cat": cat.append(pic_path)#讀取所在位置名稱 label_cat.append(0)#labels標籤為0 else: dog.append(pic_path)#讀取所在位置名稱 label_dog.append(1)#labels標籤為1 print("There are %d cat \nThere are %d dod"%(len(cat),len(dog))) image_list = np.hstack((cat,dog)) label_list = np.hstack((label_cat,label_dog)) temp = np.array([image_list,label_list]) temp = temp.transpose()#原來transpose的操作依賴於shape引數,對於一維的shape,轉置是不起作用的. np.random.shuffle(temp)#隨機排列 注意除錯時不用 image_list = list(temp[:,0]) label_list = list(temp[:,1]) label_list = [int(i) for i in label_list] return image_list,label_list def image2tfrecord(image_list,label_list,str_name): len2 = len(image_list) print("len=",len2) writer = tf.python_io.TFRecordWriter(str_name) for i in range(len2): #讀取圖片並解碼 image = Image.open(image_list[i]) image = image.resize((224,224)) #轉化為原始位元組 image_bytes = image.tobytes() #建立字典 features = {} #用bytes來儲存image features['image_raw'] = tf.train.Feature(bytes_list=tf.train.BytesList(value=[image_bytes])) # 用int64來表達label features['label'] = tf.train.Feature(int64_list=tf.train.Int64List(value=[int(label_list[i])])) #將所有的feature合成features tf_features = tf.train.Features(feature=features) #轉成example tf_example = tf.train.Example(features=tf_features) #序列化樣本 tf_serialized = tf_example.SerializeToString() #將序列化的樣本寫入rfrecord writer.write(tf_serialized) writer.close() if __name__=="__main__": path="newdata" img_list,label_list=get_files(path) length=len(img_list ) ratio = 0.8 s = np.int(length * ratio) train_img_list=img_list[:s] train_lab_list=label_list[:s] val_img_list=img_list[s:] val_lab_list=label_list[s:] image2tfrecord(train_img_list,train_lab_list,"train.tfrecords") image2tfrecord(val_img_list,val_lab_list,"val.tfrecords")

接下來你會發現生成了兩個檔案,分別是train.tfrecorder和val.tfrecorder,這就是你的驗證集和測試集,至於標籤也包含在裡面了。然後就是開始訓練了:

import numpy as np import math import tensorflow as tf from tensorflow.python.framework import graph_util tra_data_dir = 'train.tfrecords' val_data_dir = 'val.tfrecords' max_learning_rate = 0.0002 #0.0002 min_learning_rate = 0.0001 decay_speed = 2000.0 lr = tf.placeholder(tf.float32) learning_rate = lr W = 224H = 224Channels = 3 n_classes = 2 def read_and_decode2stand(tfrecords_file, batch_size): '''read and decode tfrecord file, generate (image, label) batches Args: tfrecords_file: the directory of tfrecord file batch_size: number of images in each batch Returns: image_batch: 4D tensor - [batch_size, height, width, channel] label_batch: 2D tensor - [batch_size, n_classes] ''' # make an input queue from the tfrecord file filename_queue = tf.train.string_input_producer([tfrecords_file]) reader = tf.TFRecordReader() _, serialized_example = reader.read(filename_queue) img_features = tf.parse_single_example( serialized_example, features={ 'label': tf.FixedLenFeature([], tf.int64), 'image_raw': tf.FixedLenFeature([], tf.string), }) image = tf.decode_raw(img_features['image_raw'], tf.uint8) image = tf.reshape(image, [H, W,Channels]) image = tf.cast(image, tf.float32) * (1.0 /255) image = tf.image.per_image_standardization(image)#standardization # all the images of notMNIST are 200*150, you need to change the image size if you use other dataset. label = tf.cast(img_features['label'], tf.int32) image_batch, label_batch = tf.train.batch([image, label], batch_size= batch_size, num_threads= 64, capacity = 2000) #Change to ONE-HOT label_batch = tf.one_hot(label_batch, depth= n_classes) label_batch = tf.cast(label_batch, dtype=tf.int32) label_batch = tf.reshape(label_batch, [batch_size, n_classes]) print(label_batch) return image_batch, label_batch def my_batch_norm(inputs): scale = tf.Variable(tf.ones([inputs.get_shape()[-1]]),dtype=tf.float32) beta = tf.Variable(tf.zeros([inputs.get_shape()[-1]]),dtype=tf.float32) batch_mean = tf.Variable(tf.zeros([inputs.get_shape()[-1]]), trainable=False) batch_var = tf.Variable(tf.ones([inputs.get_shape()[-1]]), trainable=False) batch_mean, batch_var = tf.nn.moments(inputs,[0,1,2]) return inputs, batch_mean, batch_var, beta, scale def build_network(height, width, channel): x = tf.placeholder(tf.float32, shape=[None, height, width, channel], name="input") ####這個名稱很重要!!! y = tf.placeholder(tf.int32, shape=[None, n_classes], name="labels_placeholder") def weight_variable(shape, name="weights"): initial = tf.truncated_normal(shape, stddev=0.1) return tf.Variable(initial, name=name) def bias_variable(shape, name="biases"): initial = tf.constant(0.1, shape=shape) return tf.Variable(initial, name=name) def conv2d(input, w): return tf.nn.conv2d(input, w, [1, 1, 1, 1], padding='SAME') def pool_max(input): return tf.nn.max_pool(input, ksize=[1, 3, 3, 1], strides=[1, 2, 2, 1], padding='SAME', name='pool1') def fc(input, w, b): return tf.matmul(input, w) + b # conv1 with tf.name_scope('conv1_1') as scope: kernel = weight_variable([3, 3, Channels, 64]) biases = bias_variable([64]) conv1_1 = tf.nn.bias_add(conv2d(x, kernel), biases) inputs, pop_mean, pop_var, beta, scale = my_batch_norm(conv1_1) conv_batch_norm = tf.nn.batch_normalization(inputs, pop_mean, pop_var, beta, scale, 0.001) output_conv1_1 = tf.nn.relu(conv_batch_norm, name=scope) with tf.name_scope('conv1_2') as scope: kernel = weight_variable([3, 3, 64, 64]) biases = bias_variable([64]) conv1_2 = tf.nn.bias_add(conv2d(output_conv1_1, kernel), biases) inputs, pop_mean, pop_var, beta, scale = my_batch_norm(conv1_2) conv_batch_norm = tf.nn.batch_normalization(inputs, pop_mean, pop_var, beta, scale, 0.001) output_conv1_2 = tf.nn.relu(conv_batch_norm, name=scope) pool1 = pool_max(output_conv1_2) # conv2 with tf.name_scope('conv2_1') as scope: kernel = weight_variable([3, 3, 64, 128]) biases = bias_variable([128]) conv2_1 = tf.nn.bias_add(conv2d(pool1, kernel), biases) inputs, pop_mean, pop_var, beta, scale = my_batch_norm(conv2_1) conv_batch_norm = tf.nn.batch_normalization(inputs, pop_mean, pop_var, beta, scale, 0.001) output_conv2_1 = tf.nn.relu(conv_batch_norm, name=scope) with tf.name_scope('conv2_2') as scope: kernel = weight_variable([3, 3, 128, 128]) biases = bias_variable([128]) conv2_2 = tf.nn.bias_add(conv2d(output_conv2_1, kernel), biases) inputs, pop_mean, pop_var, beta, scale = my_batch_norm(conv2_2) conv_batch_norm = tf.nn.batch_normalization(inputs, pop_mean, pop_var, beta, scale, 0.001) output_conv2_2 = tf.nn.relu(conv_batch_norm, name=scope) pool2 = pool_max(output_conv2_2) # conv3 with tf.name_scope('conv3_1') as scope: kernel = weight_variable([3, 3, 128, 256]) biases = bias_variable([256]) conv3_1 = tf.nn.bias_add(conv2d(pool2, kernel), biases) inputs, pop_mean, pop_var, beta, scale = my_batch_norm(conv3_1) conv_batch_norm = tf.nn.batch_normalization(inputs, pop_mean, pop_var, beta, scale, 0.001) output_conv3_1 = tf.nn.relu(conv_batch_norm, name=scope) with tf.name_scope('conv3_2') as scope: kernel = weight_variable([3, 3, 256, 256]) biases = bias_variable([256]) conv3_2 = tf.nn.bias_add(conv2d(output_conv3_1, kernel), biases) inputs, pop_mean, pop_var, beta, scale = my_batch_norm(conv3_2) conv_batch_norm = tf.nn.batch_normalization(inputs, pop_mean, pop_var, beta, scale, 0.001) output_conv3_2 = tf.nn.relu(conv_batch_norm, name=scope) # with tf.name_scope('conv3_3') as scope: # kernel = weight_variable([3, 3, 256, 256]) # biases = bias_variable([256]) # output_conv3_3 = tf.nn.relu(conv2d(output_conv3_2, kernel) + biases, name=scope) pool3 = pool_max(output_conv3_2) # ''' # # conv4 # with tf.name_scope('conv4_1') as scope: # kernel = weight_variable([3, 3, 256, 512]) # biases = bias_variable([512]) # output_conv4_1 = tf.nn.relu(conv2d(pool3, kernel) + biases, name=scope) # # with tf.name_scope('conv4_2') as scope: # kernel = weight_variable([3, 3, 512, 512]) # biases = bias_variable([512]) # output_conv4_2 = tf.nn.relu(conv2d(output_conv4_1, kernel) + biases, name=scope) # # with tf.name_scope('conv4_3') as scope: # kernel = weight_variable([3, 3, 512, 512]) # biases = bias_variable([512]) # output_conv4_3 = tf.nn.relu(conv2d(output_conv4_2, kernel) + biases, name=scope) # # pool4 = pool_max(output_conv4_3) # # # conv5 # with tf.name_scope('conv5_1') as scope: # kernel = weight_variable([3, 3, 512, 512]) # biases = bias_variable([512]) # output_conv5_1 = tf.nn.relu(conv2d(pool4, kernel) + biases, name=scope) # # with tf.name_scope('conv5_2') as scope: # kernel = weight_variable([3, 3, 512, 512]) # biases = bias_variable([512]) # output_conv5_2 = tf.nn.relu(conv2d(output_conv5_1, kernel) + biases, name=scope) # # with tf.name_scope('conv5_3') as scope: # kernel = weight_variable([3, 3, 512, 512]) # biases = bias_variable([512]) # output_conv5_3 = tf.nn.relu(conv2d(output_conv5_2, kernel) + biases, name=scope) # # pool5 = pool_max(output_conv5_3) # ''' #fc6 with tf.name_scope('fc6') as scope: shape = int(np.prod(pool3.get_shape()[1:])) kernel = weight_variable([shape, 120]) #kernel = weight_variable([shape, 4096]) #biases = bias_variable([4096]) biases = bias_variable([120]) pool5_flat = tf.reshape(pool3, [-1, shape]) output_fc6 = tf.nn.relu(fc(pool5_flat, kernel, biases), name=scope) #fc7 with tf.name_scope('fc7') as scope: #kernel = weight_variable([4096, 4096]) #biases = bias_variable([4096]) kernel = weight_variable([120, 100]) biases = bias_variable([100]) output_fc7 = tf.nn.relu(fc(output_fc6, kernel, biases), name=scope) #fc8 with tf.name_scope('fc8') as scope: #kernel = weight_variable([4096, n_classes]) kernel = weight_variable([100, n_classes]) biases = bias_variable([n_classes]) output_fc8 = tf.nn.relu(fc(output_fc7, kernel, biases), name=scope) finaloutput = tf.nn.softmax(output_fc8, name="softmax") ####這個名稱很重要!! cost = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(logits=finaloutput, labels=y))*1000 optimize = tf.train.AdamOptimizer(lr).minimize(cost) prediction_labels = tf.argmax(finaloutput, axis=1, name="output") ####這個名稱很重要!!! read_labels = tf.argmax(y, axis=1) correct_prediction = tf.equal(prediction_labels, read_labels) accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32)) correct_times_in_batch = tf.reduce_sum(tf.cast(correct_prediction, tf.int32)) return dict( x=x, y=y, lr=lr, optimize=optimize, correct_prediction=correct_prediction, correct_times_in_batch=correct_times_in_batch, cost=cost, accuracy=accuracy, ) def train_network(graph, batch_size, num_epochs, pb_file_path): tra_image_batch, tra_label_batch = read_and_decode2stand(tfrecords_file=tra_data_dir, batch_size= batch_size) val_image_batch, val_label_batch = read_and_decode2stand(tfrecords_file=val_data_dir, batch_size= batch_size) init = tf.global_variables_initializer() with tf.Session() as sess: sess.run(init) coord = tf.train.Coordinator() threads = tf.train.start_queue_runners(sess=sess, coord=coord) epoch_delta = 20 try: for epoch_index in range(num_epochs): learning_rate = min_learning_rate + (max_learning_rate - min_learning_rate) * math.exp(-epoch_index/decay_speed) tra_images,tra_labels = sess.run([tra_image_batch, tra_label_batch]) accuracy,mean_cost_in_batch,return_correct_times_in_batch,_=sess.run([graph['accuracy'],graph['cost'],graph['correct_times_in_batch'],graph['optimize']], feed_dict={ graph['x']: tra_images, graph['lr']:learning_rate, graph['y']: tra_labels }) if epoch_index % epoch_delta == 0: # 開始在 train set上計算一下accuracy和cost print("index[%s]".center(50,'-')%epoch_index) print("Train: cost_in_batch:{},correct_in_batch:{},accuracy:{}".format(mean_cost_in_batch,return_correct_times_in_batch,accuracy)) # 開始在 test set上計算一下accuracy和cost val_images, val_labels = sess.run([val_image_batch, val_label_batch]) mean_cost_in_batch,return_correct_times_in_batch = sess.run([graph['cost'],graph['correct_times_in_batch']], feed_dict={ graph['x']: val_images, graph['y']: val_labels }) print("***Val: cost_in_batch:{},correct_in_batch:{},accuracy:{}".format(mean_cost_in_batch,return_correct_times_in_batch,return_correct_times_in_batch/batch_size)) if epoch_index % 50 == 0: constant_graph = graph_util.convert_variables_to_constants(sess, sess.graph_def, ["output"]) with tf.gfile.FastGFile(pb_file_path, mode='wb') as f: f.write(constant_graph.SerializeToString()) except tf.errors.OutOfRangeError: print('Done training -- epoch limit reached') finally: coord.request_stop() coord.join(threads) sess.close() if __name__=="__main__": batch_size = 30 num_epochs = 1000 pb_file_path = "catdog.pb" g = build_network(height=H, width=W, channel=3) train_network(g, batch_size, num_epochs, pb_file_path)

這個訓練模型採用的vgg16,至於層數你可以自己調節,這個版本網上很多的。其中的模型引數,圖片大小可以根據你的需要來進行調節,需要注意的是在訓練中注意給輸入輸出起一個名字啦!!!

接下來就是漫長的等待,等訓練完了,你會發現這就生成了一個pb格式的檔案。接下來我們可以來測試一下模型效能怎麼樣,

import matplotlib.pyplot as plt import tensorflow as tf import numpy as np import PIL.Image as Image from skimage import transform W = 224H = 224def recognize(jpg_path): with tf.Graph().as_default(): output_graph_def = tf.GraphDef() pb_file_path="catdog.pb" with open(pb_file_path, "rb") as f: output_graph_def.ParseFromString(f.read()) #rb _ = tf.import_graph_def(output_graph_def, name="") with tf.Session() as sess: tf.global_variables_initializer().run() input_x = sess.graph.get_tensor_by_name("input:0") ####這就是剛才取名的原因 print (input_x) out_softmax = sess.graph.get_tensor_by_name("softmax:0") print (out_softmax) out_label = sess.graph.get_tensor_by_name("output:0") print (out_label) img = np.array(Image.open(jpg_path).convert('L')) img = transform.resize(img, (H, W, 3)) plt.figure("fig1") plt.imshow(img) img = img * (1.0 /255) img_out_softmax = sess.run(out_softmax, feed_dict={input_x:np.reshape(img, [-1, H, W, 3])}) print ("img_out_softmax:",img_out_softmax) prediction_labels = np.argmax(img_out_softmax, axis=1) print ("prediction_labels:",prediction_labels) plt.show() recognize("C:\\Users\\Administrator\\Desktop\\處理效果圖\\11.jpg") ####修改成自己的圖片路徑

(2)呼叫

發現模型預測結果還不錯,那就開始進入今天的主題啦!!!!!我們該怎樣才能在Windows下通過c++來呼叫該模型呢?接下來就是見證奇蹟開始的時候啦!!!別眨眼哦。

首先宣告一下,我的電腦配置是win10,vs是10版本的,我的python3是通過anaconda來安裝的。

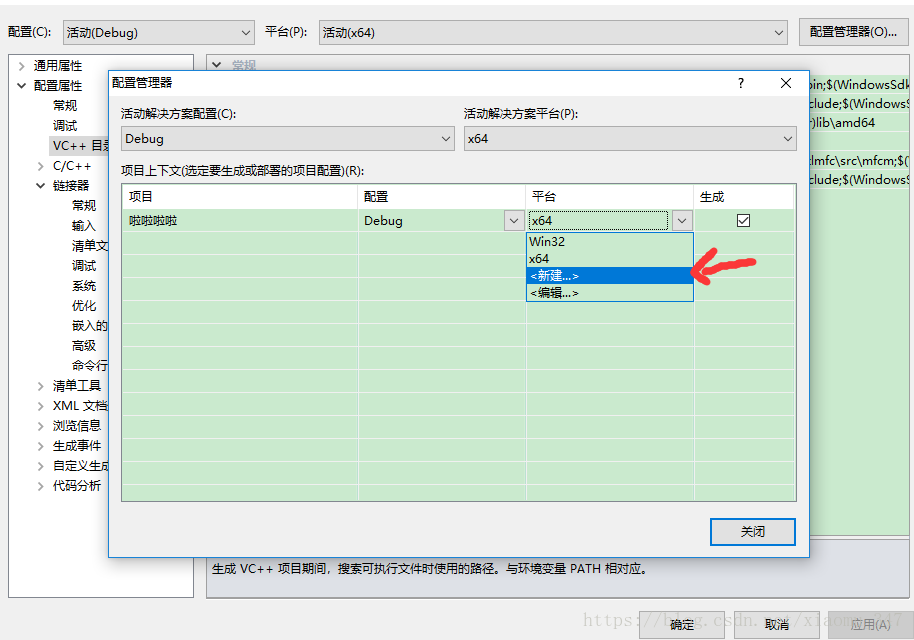

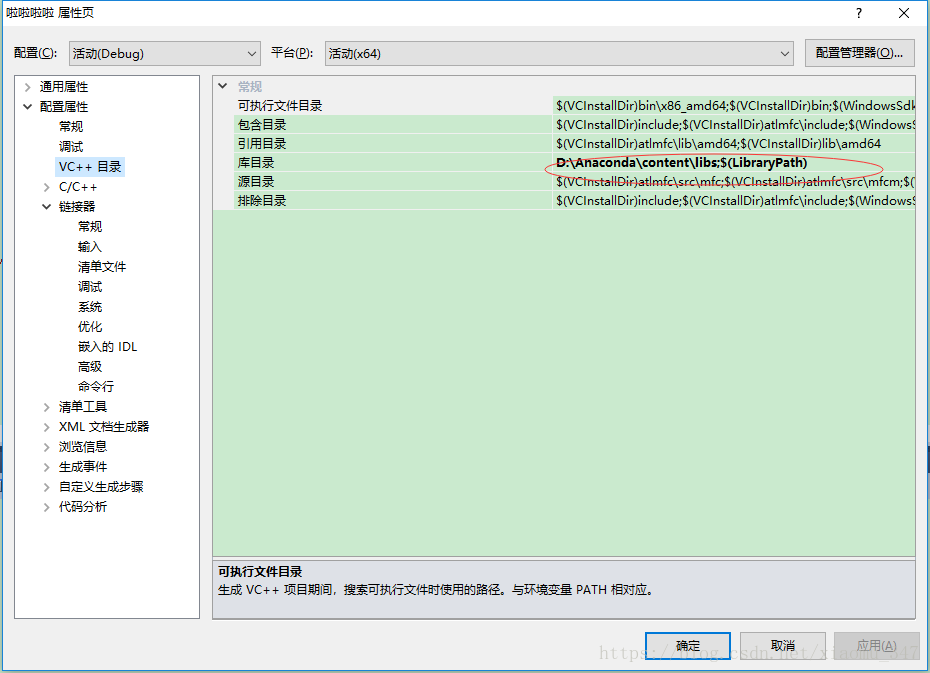

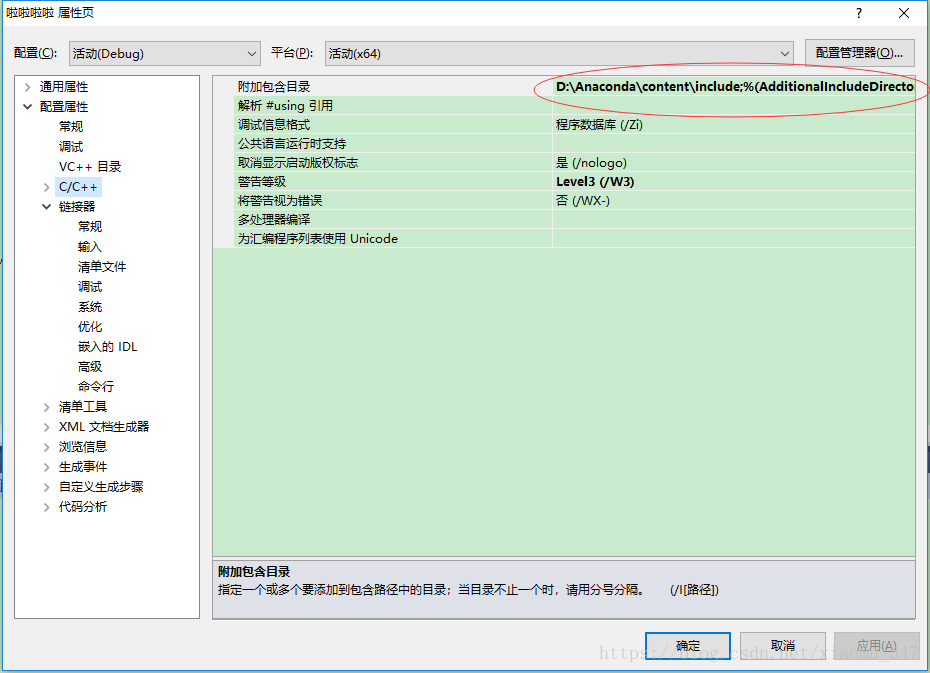

接下來我們首先做的當然是在vs裡新建一個控制檯程式或者MFC程式啦!然後再開始匯入python庫,這一步很重要,需要針對自己剛開始訓練的環境來,由於我剛開始是在win64下訓練的模型,下載的也是64位的tensorflow,所以我需要把我的vs環境切換到win64下,然後開始配置載入你電腦上的python庫,具體操作如下圖所示:

沒有就選新建,然後你需要做的就是載入庫

還有標頭檔案

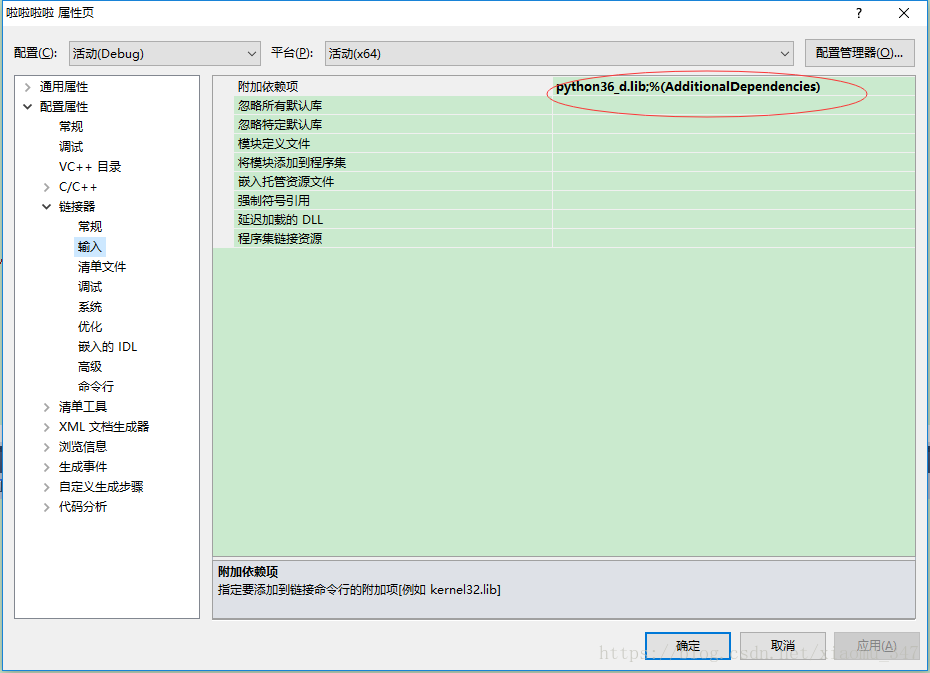

還有

其實你開啟自己的安裝的python路徑libs資料夾,你會發現你下面根本沒有python36_d.lib檔案,其實你需要做的就是將python36.lib拷貝重新命名一份即可。

環境配置好了以後,你需要做的有兩件事,那就是寫一個cpp檔案以及需要呼叫的py檔案啦。其中cpp檔案程式碼如下:

#include<iostream>

#include <Python.h>

#include<windows.h>

using namespace std;

void testImage(char * path)

{

try{

Py_Initialize();

PyEval_InitThreads();

PyObject*pFunc = NULL;

PyObject*pArg = NULL;

PyObject* module = NULL;

module = PyImport_ImportModule("catmodel");//myModel:Python檔名

if (!module) {

printf("cannot open module!");

Py_Finalize();

return ;

}

pFunc = PyObject_GetAttrString(module, "test_one_image");//test_one_image:Python檔案中的函式名

if (!pFunc) {

printf("cannot open FUNC!");

Py_Finalize();

return ;

}

//開始呼叫model

pArg = Py_BuildValue("(s)", path);

if (module != NULL) {

PyGILState_STATE gstate;

gstate = PyGILState_Ensure();

PyEval_CallObject(pFunc, pArg);

PyGILState_Release(gstate);

}

}

catch (exception& e)

{

cout << "Standard exception: " << e.what() << endl;

}

}

int main()

{

char * path= "D:\\pycharm\\My-TensorFlow-tutorials-master\\01 cats vs dogs\\data\\train\\cat.1.jpg";

testImage(path);

system("pause");

return 0;

}

而py檔案如下:(注意py檔名需要和cpp中對應)

import matplotlib.pyplot as plt import tensorflow as tf import numpy as np import PIL.Image as Image from skimage import io, transform def test_one_image(jpg_path): print("進入模型") with tf.Graph().as_default(): output_graph_def = tf.GraphDef() pb_file_path="D:\\vs2010\\Project\\呼叫模型\\x64\\Release\\catdog.pb" with open(pb_file_path, "rb") as f: output_graph_def.ParseFromString(f.read()) #rb _ = tf.import_graph_def(output_graph_def, name="") print("2222") with tf.Session() as sess: tf.global_variables_initializer().run() input_x = sess.graph.get_tensor_by_name("input:0") print (input_x) out_softmax = sess.graph.get_tensor_by_name("softmax:0") print (out_softmax) out_label = sess.graph.get_tensor_by_name("output:0") print (out_label) print("開始讀圖")img = io.imread(jpg_path) img = transform.resize(img, (224, 224, 3)) img_out_softmax = sess.run(out_softmax, feed_dict={input_x:np.reshape(img, [-1, 224, 224, 3])}) print("234234") print ("img_out_softmax:",img_out_softmax) prediction_labels = np.argmax(img_out_softmax, axis=1) print ("prediction_labels:",prediction_labels)

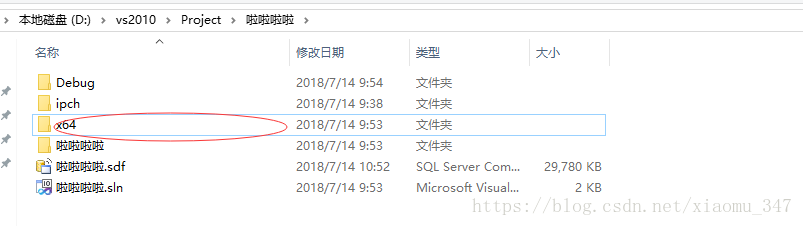

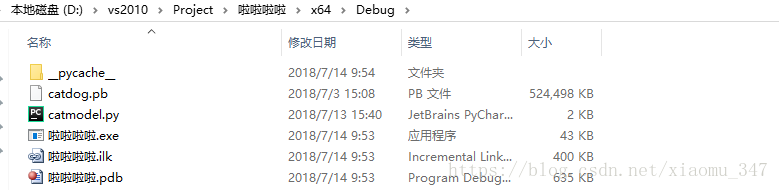

將py檔案放入到你c++新建的工程x64檔案下

如果剛開始沒有這個檔案,你可以現在vs裡面執行一下,無論報錯,然後就可以看到這個檔案了,至於是debug下還是release下就看你上面配置的環境了,為了方便你也可以將pb檔案一起拷貝過來,雖然py檔案裡已經指定了pb的路徑,這個需要保持一致。

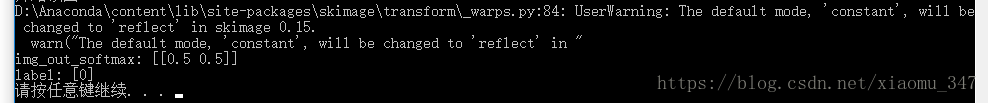

接下來就是見證奇蹟開始的時候啦,在vs下執行cpp檔案,出現以下結果就表示你呼叫成功啦!

好的今天就先寫到這了!!!