深度學習進階(六)--CNN卷積神經網路除錯,錯誤歷程總結

總結一下今天的學習過程

(注:我此刻的心情與剛剛三分鐘前的心情是完全不一樣的)

(昨天在想一些錯誤,今天又重拾信心重新配置GPU環境,結果很失敗,不過現在好了,在尋思著今天干了什麼的時候,無意間想到是不是自己方法入口不對啊。結果果然很幸運的被我猜到了,,,哈哈哈,我的心情又好了)

總結最大的體會:有的時候在程式碼不能執行的時候,可是嘗試先看看學習程式碼,

起碼從程式碼的入口呼叫看起、看清、看準,不要整個全網基本不會出現的錯誤讓自己造出來了,更重要的是自己看不懂這個錯誤

起碼從程式碼的入口呼叫看起、看清、看準,不要整個全網基本不會出現的錯誤讓自己造出來了,更重要的是自己看不懂這個錯誤

起碼從程式碼的入口呼叫看起、看清、看準,不要整個全網基本不會出現的錯誤讓自己造出來了,更重要的是自己看不懂這個錯誤

哎 崩潰的一天

總結一下昨天失敗的原因

1,編碼問題

使用TextEncoding.exe檔案將E:\Python\CRDA\cuda8\include中編碼改成Unicode

2,什麼出現巨集定義啥的,解決的辦法忘了,明天或許會幫同學重灌,到時再補充

3,cuda9與cuda8再vs2015中衝突,vs2015中使用的的是cuda9,將其cuda9解除安裝和cuda8都解除安裝,重新安裝cuda8

4,使用vs2015編譯cudaruntime程式,並執行,生成一些所謂的必要檔案,我也是傻懵的,結果少了很多錯誤,最好在64位和32位都debug編譯

5,在編譯cudaruntime程式時,會出現FIB類似的檔案打不開或者無法找到,這些都可以在百度中尋找答案,建議:不要在意有關nvxxxx.dll檔案找不到無法載入,然後自己又去DOS視窗使用regsvr32 C:WindowsSysWOW64\nvcuda.dll等等,這是非常愚蠢的,,

會出現一個已載入但找不到入口點DLLRegisterServer

這個可以盡情的百度吧、谷歌吧,結果你會奔潰的,除非你對dll動態庫或者C特別熟,否則你會幹耗死在這只要不是nvxxx.dll的都可以忽略

6,其他的一些程式碼問題,百度解決一下即可

其中關於downsample與pool_2d,由於Python的版本不同,Python3中被替換

pooled_out = pool_2d(input=conv_out, ws=self.poolsize, ignore_border=True)這裡是ws不是ds,不然會有一個警告

UserWarning: DEPRECATION: the 'ds' parameter is not going to exist anymore as it is going to be replaced by the parameter 'ws'.

pooled_out = pool_2d(input=conv_out, ds=self.poolsize, ignore_border=True)

conv2d這個庫匯入也要注意,有時候

from theano.tensor.nnet import conv2d

conv_out = conv2d(

input=self.inpt, filters=self.w, filter_shape=self.filter_shape,

input_shape=self.image_shape)

7,還有一個嚴重的錯誤是關於cudnn版本的

在cudnn-8.0-windows7-x64-v7中有一個XXX_DV4,類似cudnnGetPoolingNdDescriptor_v4的錯誤,說是找不到此檔案,這裡要將cudnn-8.0-windows7-x64-v7替換成cudnn-8.0-windows7-x64-v5.1,警告也解決了,錯誤也解決了

其他的錯誤一時半會想不出來了,反正就是太多太多了

最後說一下今天的最大的失誤愚蠢

起碼從程式碼的入口呼叫看起、看清、看準,不要整個全網基本不會出現的錯誤讓自己造出來了,更重要的是自己看不懂這個錯誤

造成的錯誤:

Trying to run under a GPU. If this is not desired, then modify network3.py

to set the GPU flag to False.

(<CudaNdarrayType(float32, matrix)>, Elemwise{Cast{int32}}.0)

cost: Elemwise{add,no_inplace}.0

grads: [Elemwise{add,no_inplace}.0, GpuFromHost.0, Elemwise{add,no_inplace}.0, GpuFromHost.0, Elemwise{add,no_inplace}.0, GpuFromHost.0]

updates: [(<CudaNdarrayType(float32, 4D)>, Elemwise{sub,no_inplace}.0), (<CudaNdarrayType(float32, vector)>, Elemwise{sub,no_inplace}.0), (w, Elemwise{sub,no_inplace}.0), (b, Elemwise{sub,no_inplace}.0), (w, Elemwise{sub,no_inplace}.0), (b, Elemwise{sub,no_inplace}.0)]

train_mb: <theano.compile.function_module.Function object at 0x000000002290B0B8>

Training mini-batch number 0

Traceback (most recent call last):

File "E:\Python\Anaconda3\lib\site-packages\theano\compile\function_module.py", line 884, in __call__

self.fn() if output_subset is None else\

ValueError: GpuReshape: cannot reshape input of shape (10, 20, 12, 12) to shape (10, 784).

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "E:\Python\NewPythonData\neural-networks-and-deep-learning\src\demo3.py", line 38, in <module>

net.SGD(training_data,10,mini_batch_size,0.1,validation_data,test_data)

File "E:\Python\NewPythonData\neural-networks-and-deep-learning\src\network3.py", line 179, in SGD

train_mb(minibatch_index)

File "E:\Python\Anaconda3\lib\site-packages\theano\compile\function_module.py", line 898, in __call__

storage_map=getattr(self.fn, 'storage_map', None))

File "E:\Python\Anaconda3\lib\site-packages\theano\gof\link.py", line 325, in raise_with_op

reraise(exc_type, exc_value, exc_trace)

File "E:\Python\Anaconda3\lib\site-packages\six.py", line 685, in reraise

raise value.with_traceback(tb)

File "E:\Python\Anaconda3\lib\site-packages\theano\compile\function_module.py", line 884, in __call__

self.fn() if output_subset is None else\

ValueError: GpuReshape: cannot reshape input of shape (10, 20, 12, 12) to shape (10, 784).

Apply node that caused the error: GpuReshape{2}(GpuElemwise{add,no_inplace}.0, TensorConstant{[ 10 784]})

Toposort index: 51

Inputs types: [CudaNdarrayType(float32, 4D), TensorType(int32, vector)]

Inputs shapes: [(10, 20, 12, 12), (2,)]

Inputs strides: [(2880, 144, 12, 1), (4,)]

Inputs values: ['not shown', array([ 10, 784])]

Outputs clients: [[GpuElemwise{Composite{(scalar_sigmoid(i0) * i1)},no_inplace}(GpuReshape{2}.0, GpuFromHost.0)]]

HINT: Re-running with most Theano optimization disabled could give you a back-trace of when this node was created. This can be done with by setting the Theano flag 'optimizer=fast_compile'. If that does not work, Theano optimizations can be disabled with 'optimizer=None'.

HINT: Use the Theano flag 'exception_verbosity=high' for a debugprint and storage map footprint of this apply node.如果出現類似的錯誤,希望朋友們重頭確認一下,自己輸入的卷積層、取樣層、全連線層的引數是否錯誤

改正後,結果非常激動

Trying to run under a GPU. If this is not desired, then modify network3.py

to set the GPU flag to False.

(<CudaNdarrayType(float32, matrix)>, Elemwise{Cast{int32}}.0)

cost: Elemwise{add,no_inplace}.0

grads: [Elemwise{add,no_inplace}.0, GpuFromHost.0, Elemwise{add,no_inplace}.0, GpuFromHost.0, Elemwise{add,no_inplace}.0, GpuFromHost.0]

updates: [(<CudaNdarrayType(float32, 4D)>, Elemwise{sub,no_inplace}.0), (<CudaNdarrayType(float32, vector)>, Elemwise{sub,no_inplace}.0), (w, Elemwise{sub,no_inplace}.0), (b, Elemwise{sub,no_inplace}.0), (w, Elemwise{sub,no_inplace}.0), (b, Elemwise{sub,no_inplace}.0)]

train_mb: <theano.compile.function_module.Function object at 0x0000000022A18208>

Training mini-batch number 0

Training mini-batch number 1000

Training mini-batch number 2000

Training mini-batch number 3000

Training mini-batch number 4000

Epoch 0: validation accuracy 93.73%

This is the best validation accuracy to date.

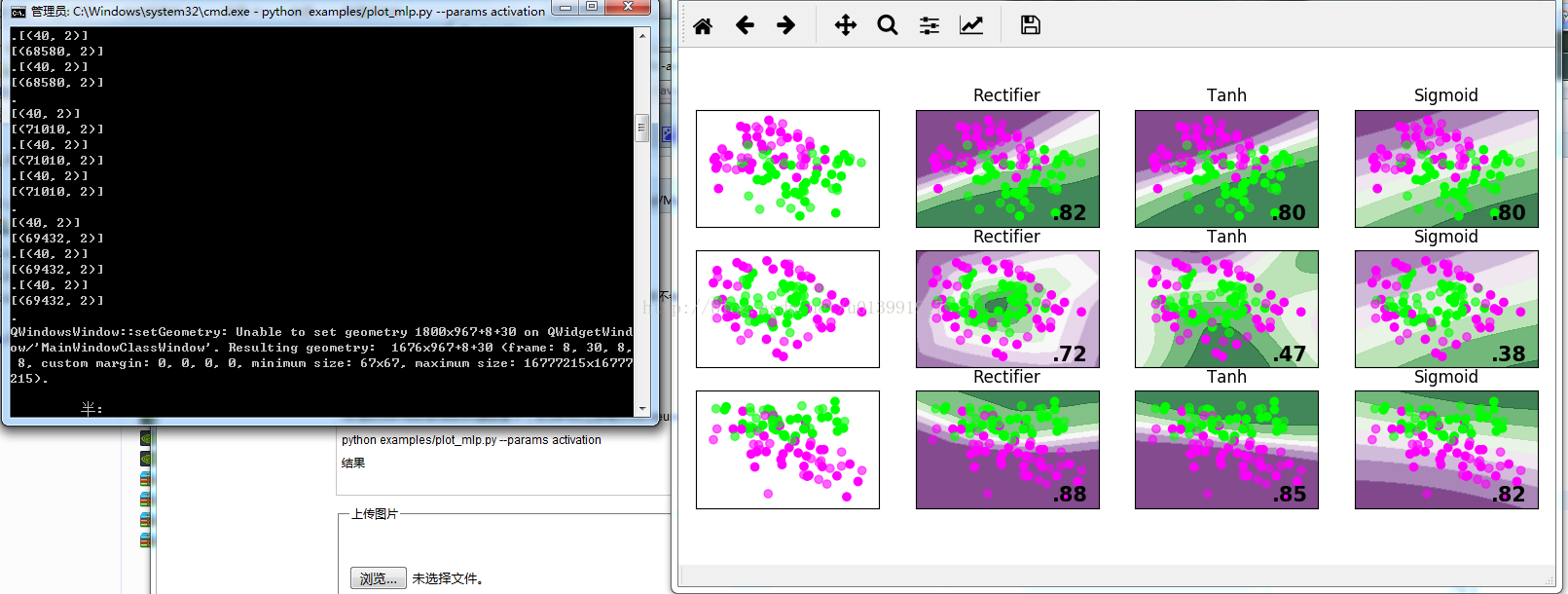

The corresponding test accuracy is 93.20%scikit-neuralnetwork中的examples/plot_mlp.py

測試技測試成功

python examples/plot_mlp.py --params activation

結果