Elastic-Job原理--任務排程處理(四)

阿新 • • 發佈:2019-01-07

在上一篇部落格Elastic-Job原理--任務分片策略(三)我們已經瞭解了Elastic-Job的任務分片策略,這篇部落格我們瞭解學習一下Elastic-Job是如何執行分片任務的。

首先,Elastic-Job的定時任務執行機制還是基於quartz開發的,因此Elastic-Job實現了Quartz的任務介面Job實現了LiteJob,來根據定時任務規則執行定時任務。

實現Quartz的介面Job

public final class LiteJob implements Job { @Setter private ElasticJob elasticJob; @Setter private JobFacade jobFacade; @Override public void execute(final JobExecutionContext context) throws JobExecutionException { JobExecutorFactory.getJobExecutor(elasticJob, jobFacade).execute(); } }

根據任務型別JobExecutorFactory會獲取任務執行器,然後呼叫execute方法

/** * 獲取作業執行器. * * @param elasticJob 分散式彈性作業 * @param jobFacade 作業內部服務門面服務 * @return 作業執行器 */ @SuppressWarnings("unchecked") public static AbstractElasticJobExecutor getJobExecutor(final ElasticJob elasticJob, final JobFacade jobFacade) { if (null == elasticJob) { return new ScriptJobExecutor(jobFacade); } if (elasticJob instanceof SimpleJob) { return new SimpleJobExecutor((SimpleJob) elasticJob, jobFacade); } if (elasticJob instanceof DataflowJob) { return new DataflowJobExecutor((DataflowJob) elasticJob, jobFacade); } throw new JobConfigurationException("Cannot support job type '%s'", elasticJob.getClass().getCanonicalName()); }

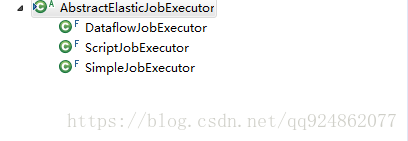

Elastic-Job提供了任務執行器抽象類AbstractElasticJobExecutor,在AbstractElasticJobExecutor中會獲取任務分片資訊及任務失敗轉移等處理操作。

在execute中會獲取所有的分片資訊,及一系列的處理操作。

/** * 執行作業. */ public final void execute() { try { jobFacade.checkJobExecutionEnvironment(); } catch (final JobExecutionEnvironmentException cause) { jobExceptionHandler.handleException(jobName, cause); } //獲取分片資訊 ShardingContexts shardingContexts = jobFacade.getShardingContexts(); if (shardingContexts.isAllowSendJobEvent()) { jobFacade.postJobStatusTraceEvent(shardingContexts.getTaskId(), State.TASK_STAGING, String.format("Job '%s' execute begin.", jobName)); } if (jobFacade.misfireIfRunning(shardingContexts.getShardingItemParameters().keySet())) { if (shardingContexts.isAllowSendJobEvent()) { jobFacade.postJobStatusTraceEvent(shardingContexts.getTaskId(), State.TASK_FINISHED, String.format( "Previous job '%s' - shardingItems '%s' is still running, misfired job will start after previous job completed.", jobName, shardingContexts.getShardingItemParameters().keySet())); } return; } try { jobFacade.beforeJobExecuted(shardingContexts); //CHECKSTYLE:OFF } catch (final Throwable cause) { //CHECKSTYLE:ON jobExceptionHandler.handleException(jobName, cause); } //執行分片任務 execute(shardingContexts, JobExecutionEvent.ExecutionSource.NORMAL_TRIGGER); while (jobFacade.isExecuteMisfired(shardingContexts.getShardingItemParameters().keySet())) { jobFacade.clearMisfire(shardingContexts.getShardingItemParameters().keySet()); execute(shardingContexts, JobExecutionEvent.ExecutionSource.MISFIRE); } jobFacade.failoverIfNecessary(); try { jobFacade.afterJobExecuted(shardingContexts); //CHECKSTYLE:OFF } catch (final Throwable cause) { //CHECKSTYLE:ON jobExceptionHandler.handleException(jobName, cause); } }

在execute中會記錄一些任務的狀態資訊,然後執行process方法

private void execute(final ShardingContexts shardingContexts, final JobExecutionEvent.ExecutionSource executionSource) {

if (shardingContexts.getShardingItemParameters().isEmpty()) {

if (shardingContexts.isAllowSendJobEvent()) {

jobFacade.postJobStatusTraceEvent(shardingContexts.getTaskId(), State.TASK_FINISHED, String.format("Sharding item for job '%s' is empty.", jobName));

}

return;

}

jobFacade.registerJobBegin(shardingContexts);

String taskId = shardingContexts.getTaskId();

if (shardingContexts.isAllowSendJobEvent()) {

jobFacade.postJobStatusTraceEvent(taskId, State.TASK_RUNNING, "");

}

try {

//執行分片任務

process(shardingContexts, executionSource);

} finally {

// TODO 考慮增加作業失敗的狀態,並且考慮如何處理作業失敗的整體迴路

jobFacade.registerJobCompleted(shardingContexts);

if (itemErrorMessages.isEmpty()) {

if (shardingContexts.isAllowSendJobEvent()) {

jobFacade.postJobStatusTraceEvent(taskId, State.TASK_FINISHED, "");

}

} else {

if (shardingContexts.isAllowSendJobEvent()) {

jobFacade.postJobStatusTraceEvent(taskId, State.TASK_ERROR, itemErrorMessages.toString());

}

}

}

}在process方法中,會根據分片數量單任務時直接執行,多工時新增到執行緒池執行

//根據分片數量依次執行分片任務

private void process(final ShardingContexts shardingContexts, final JobExecutionEvent.ExecutionSource executionSource) {

Collection<Integer> items = shardingContexts.getShardingItemParameters().keySet();

//如果只存在一個分片則直接執行

if (1 == items.size()) {

int item = shardingContexts.getShardingItemParameters().keySet().iterator().next();

JobExecutionEvent jobExecutionEvent = new JobExecutionEvent(shardingContexts.getTaskId(), jobName, executionSource, item);

process(shardingContexts, item, jobExecutionEvent);

return;

}

final CountDownLatch latch = new CountDownLatch(items.size());

//根據分片數量依次執行分片任務

for (final int each : items) {

final JobExecutionEvent jobExecutionEvent = new JobExecutionEvent(shardingContexts.getTaskId(), jobName, executionSource, each);

if (executorService.isShutdown()) {

return;

}

executorService.submit(new Runnable() {

@Override

public void run() {

try {

process(shardingContexts, each, jobExecutionEvent);

} finally {

latch.countDown();

}

}

});

}

try {

//等待所有分片任務執行完畢

latch.await();

} catch (final InterruptedException ex) {

Thread.currentThread().interrupt();

}

}呼叫子類的process方法根據任務型別去執行

private void process(final ShardingContexts shardingContexts, final int item, final JobExecutionEvent startEvent) {

if (shardingContexts.isAllowSendJobEvent()) {

jobFacade.postJobExecutionEvent(startEvent);

}

log.trace("Job '{}' executing, item is: '{}'.", jobName, item);

JobExecutionEvent completeEvent;

try {

//執行分片任務

process(new ShardingContext(shardingContexts, item));

completeEvent = startEvent.executionSuccess();

log.trace("Job '{}' executed, item is: '{}'.", jobName, item);

if (shardingContexts.isAllowSendJobEvent()) {

jobFacade.postJobExecutionEvent(completeEvent);

}

// CHECKSTYLE:OFF

} catch (final Throwable cause) {

// CHECKSTYLE:ON

completeEvent = startEvent.executionFailure(cause);

jobFacade.postJobExecutionEvent(completeEvent);

itemErrorMessages.put(item, ExceptionUtil.transform(cause));

jobExceptionHandler.handleException(jobName, cause);

}

}

最終根據任務型別,呼叫AbstractElasticJobExecutor的實現類

/**

* 簡單作業執行器.

*

* @author zhangliang

*/

public final class SimpleJobExecutor extends AbstractElasticJobExecutor {

private final SimpleJob simpleJob;

public SimpleJobExecutor(final SimpleJob simpleJob, final JobFacade jobFacade) {

super(jobFacade);

this.simpleJob = simpleJob;

}

@Override

protected void process(final ShardingContext shardingContext) {

simpleJob.execute(shardingContext);

}

}