單應性變換(Homography)的學習與理解

內容源於:

What is Homography ?

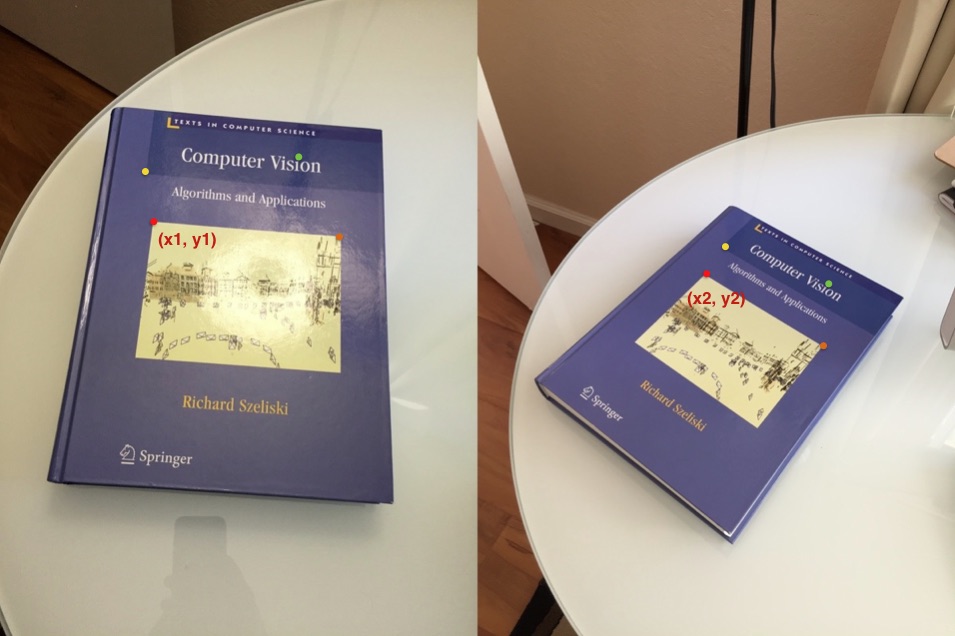

Consider two images of a plane (top of the book) shown in Figure 1. The red dot represents the same physical point in the two images. In computer vision jargon we call these corresponding points. Figure 1. shows four corresponding points in four different colors — red, green, yellow and orange. A Homography

Figure 1 : Two images of a 3D plane ( top of the book ) are related by a Homography

Figure 1 : Two images of a 3D plane ( top of the book ) are related by a HomographyNow since a homography is a 3×3 matrix we can write it as

![Rendered by QuickLaTeX.com \[ H = \left[ \begin{array}{ccc} h_{00} & h_{01} & h_{02} \\ h_{10} & h_{11} & h_{12} \\ h_{20} & h_{21} & h_{22} \end{array} \right] \]](https://www.learnopencv.com/wp-content/ql-cache/quicklatex.com-0e501a99a732aad3f7a911294b942aa0_l3.png)

Let us consider the first set of corresponding points — ![]()

![Rendered by QuickLaTeX.com \[ \left[ \begin{array}{c} x_1 \\ y_1 \\ 1 \end{array} \right] &= H \left[ \begin{array}{c} x_2 \\ y_2 \\ 1 \end{array} \right] &= \left[ \begin{array}{ccc} h_{00} & h_{01} & h_{02} \\ h_{10} & h_{11} & h_{12} \\ h_{20} & h_{21} & h_{22} \end{array} \right] \left[ \begin{array}{c} x_2 \\ y_2 \\ 1 \end{array} \right] \]](https://www.learnopencv.com/wp-content/ql-cache/quicklatex.com-5ed4910ec273ad30626f55eefe5b0373_l3.png)

Image Alignment Using Homography

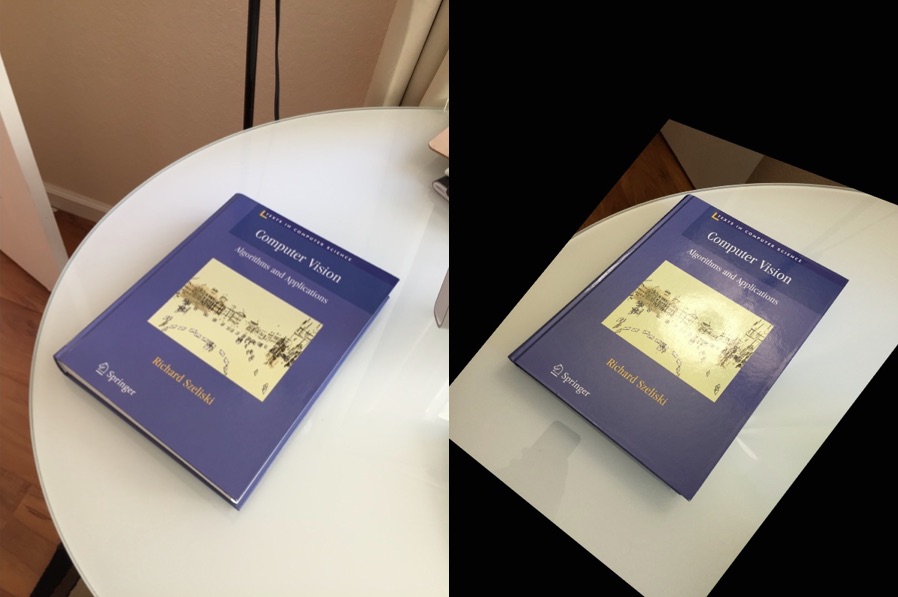

The above equation is true for ALL sets of corresponding points as long as they lie on the same plane in the real world. In other words you can apply the homography to the first image and the book in the first image will get aligned with the book in the second image! See Figure 2.

Figure 2 : One image of a 3D plane can be aligned with another image of the same plane using Homography

Figure 2 : One image of a 3D plane can be aligned with another image of the same plane using HomographyBut what about points that are not on the plane ? Well, they will NOT be aligned by a homography as you can see in Figure 2. But wait, what if there are two planes in the image ? Well, then you have two homographies — one for each plane.

Panorama : An Application of Homography

In the previous section, we learned that if a homography between two images is known, we can warp one image onto the other. However, there was one big caveat. The images had to contain a plane ( the top of a book ), and only the planar part was aligned properly. It turns out that if you take a picture of any scene ( not just a plane ) and then take a second picture by rotating the camera, the two images are related by a homography! In other words you can mount your camera on a tripod and take a picture. Next, pan it about the vertical axis and take another picture. The two images you just took of a completely arbitrary 3D scene are related by a homography. The two images will share some common regions that can be aligned and stitched and bingo you have a panorama of two images. Is it really that easy ? Nope! (sorry to disappoint) A lot more goes into creating a good panorama, but the basic principle is to align using a homography and stitch intelligently so that you do not see the seams. Creating panoramas will definitely be part of a future post.

How to calculate a Homography ?

To calculate a homography between two images, you need to know at least 4 point correspondences between the two images. If you have more than 4 corresponding points, it is even better. OpenCV will robustly estimate a homography that best fits all corresponding points. Usually, these point correspondences are found automatically by matching features like SIFT or SURF between the images, but in this post we are simply going to click the points by hand.

Let’s look at the usage first.

C++

| 12345678910 | // pts_src and pts_dst are vectors of points in source // and destination images. They are of type vector<Point2f>. // We need at least 4 corresponding points. Mat h = findHomography(pts_src, pts_dst);// The calculated homography can be used to warp // the source image to destination. im_src and im_dst are// of type Mat. Size is the size (width,height) of im_dst. warpPerspective(im_src, im_dst, h, size); |

Python

| 1234567891011121314 | '''pts_src and pts_dst are numpy arrays of pointsin source and destination images. We need at least 4 corresponding points. '''h, status = cv2.findHomography(pts_src, pts_dst)''' The calculated homography can be used to warp the source image to destination. Size is the size (width,height) of im_dst'''im_dst = cv2.warpPerspective(im_src, h, size) |

Let us look at a more complete example in both C++ and Python.

OpenCV C++ Homography Example

Images in Figure 2. can be generated using the following C++ code. The code below shows how to take four corresponding points in two images and warp image onto the other.

| 1234567891011121314151617181920212223242526272829303132333435363738394041 | #include "opencv2/opencv.hpp" using namespace cv;using namespace std;int main( int argc, char** argv){// Read source image.Mat im_src = imread("book2.jpg");// Four corners of the book in source imagevector<Point2f> pts_src;pts_src.push_back(Point2f(141, 131));pts_src.push_back(Point2f(480, 159));pts_src.push_back(Point2f(493, 630));pts_src.push_back(Point2f(64, 601));// Read destination image.Mat im_dst = imread("book1.jpg");// Four corners of the book in destination image.vector<Point2f> pts_dst;pts_dst.push_back(Point2f(318, 256));pts_dst.push_back(Point2f(534, 372));pts_dst.push_back(Point2f(316, 670));pts_dst.push_back(Point2f(73, 473));// Calculate HomographyMat h = findHomography(pts_src, pts_dst);// Output imageMat im_out;// Warp source image to destination based on homographywarpPerspective(im_src, im_out, h, im_dst.size());

|