caffe原始碼 之 Relu層

阿新 • • 發佈:2019-01-11

本文主要實現caffe框架中/src/caffe/layers/Relu_layer.cpp檔案,該檔案實現的是啟用函式Relu。

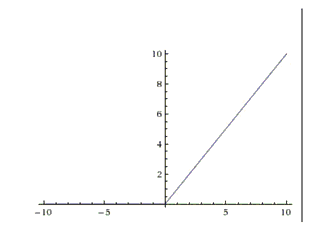

ReLU是近些年非常流行的啟用函式。相比於sigmoid與Tanh,它具有一定的優越性,這三者對比可見https://zhuanlan.zhihu.com/p/21462488?refer=intelligentunit,它的函式公式是f(x)=max(0,x)。換句話說,這個啟用函式就是一個關於0的閾值。如下圖:::

下面記錄我在看relu層時的程式碼註釋:::

Relu_layer.hpp:::

#ifndef CAFFE_RELU_LAYER_HPP_ Relu_layer.cpp:::

#include <algorithm>

#include <vector>

#include "caffe/layers/relu_layer.hpp"

namespace caffe {

/*Relu層的前向傳播函式*/

template <typename Dtype>

void ReLULayer<Dtype>::Forward_cpu(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top) {

const Dtype* bottom_data = bottom[0]->cpu_data(); //獲得輸入資料記憶體地址指標

Dtype* top_data = top[0]->mutable_cpu_data(); //獲得輸出資料記憶體地址指標

//輸入的blob的個數

const int count = bottom[0]->count();

//negative_slope是Leak Relu的引數,預設為0,就是普通的Relu函式。

//Leaky ReLU是為解決“ReLU死亡”問題的嘗試。

//一般的ReLU中當x<0時,函式值為0。禠eaky ReLU則是給出一個很小的負數梯度值,比如0.01。

//Leaky Relu公式如下 f(x) = max(x, 0) + alpha*min(x, 0) 其中alpha就是下面的程式碼中引數negative_slope

Dtype negative_slope = this->layer_param_.relu_param().negative_slope();

for (int i = 0; i < count; ++i) {

top_data[i] = std::max(bottom_data[i], Dtype(0))

+ negative_slope * std::min(bottom_data[i], Dtype(0));

}

}

/*Relu層的返向傳播函式*/

template <typename Dtype>

void ReLULayer<Dtype>::Backward_cpu(const vector<Blob<Dtype>*>& top,

const vector<bool>& propagate_down,

const vector<Blob<Dtype>*>& bottom) {

//propagate_down與計算bottom的梯度有關,在caffe的BP實現中非常重要

if (propagate_down[0]) {

//獲得前一層的前向傳播的資料記憶體地址

const Dtype* bottom_data = bottom[0]->cpu_data();

//獲得後一層的後向傳播的導數的記憶體地址(對於本層來說是輸入資料)

const Dtype* top_diff = top[0]->cpu_diff();

//獲得前一層的後向傳播的導數的記憶體地址(對於本層來說是輸出資料)

Dtype* bottom_diff = bottom[0]->mutable_cpu_diff();

//參與計算的blob個數

const int count = bottom[0]->count();

//見上面的Forward_cpu函式中關於這個引數的解釋

Dtype negative_slope = this->layer_param_.relu_param().negative_slope();

//這裡(bottom_data[i] > 0)實現的就是Relu的導數, 這是一個邏輯判斷,如果bottom_data[i]值大於0則(bottom_data[i] > 0)值為1,反之為0

//這裡((bottom_data[i] > 0) + negative_slope * (bottom_data[i] <= 0)) 實現的是Leaky Relu的導數

//根據求導鏈式法則,前一層(對於返向傳播前一層為輸出層)的導數對於上一層導數乘以當前層函式的導數

for (int i = 0; i < count; ++i) {

bottom_diff[i] = top_diff[i] * ((bottom_data[i] > 0)

+ negative_slope * (bottom_data[i] <= 0));

}

}

}

#ifdef CPU_ONLY

STUB_GPU(ReLULayer);

#endif

INSTANTIATE_CLASS(ReLULayer);

} // namespace caffe