搭積木般構建深度學習網路——Xception完整程式碼解析

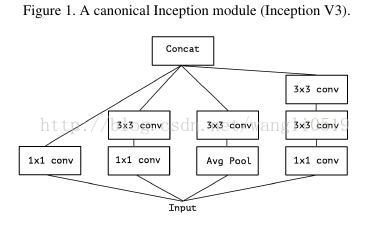

在瞭解什麼是Xception以前,我們首先要了解Inception。Inception結構,由Szegedy等在2014年引入,被稱為GoogLeNet(Inception V1),之後被優化為Inception V2, Inception V3以及最新的Inception-ResNet。Inception自身受早期的網路-網路結構啟發。自從首次推出,Inception無論是對於ImageNet的資料集,還是Google內部的資料集,特別是JFT,都是表現最好的模型之一。Inception風格模型最重要的內容是Inception模組,該模組有不同版本存在。

上圖是Inception V3結構中傳統Inception模組。一個Inception模型是該模組的堆疊。這與早期的VGG風格簡單卷積層堆疊的網路不同。

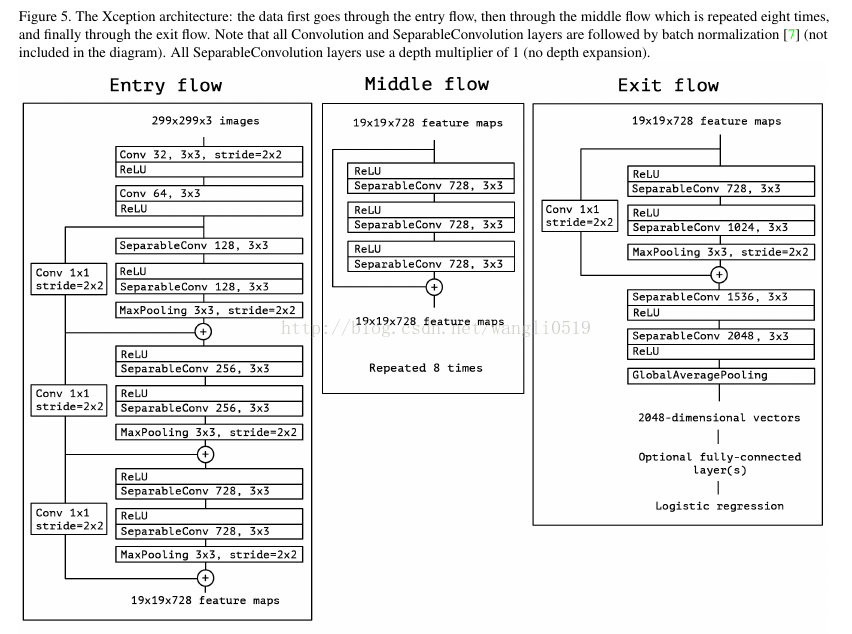

而Xception是指卷積神經網路的特徵圖中的跨通道相關性和空間相關性的對映可以完全脫鉤。由於這種假設是Inception結構中極端化的假設,我們將它稱作Xception,意指極端Inception。

Xception在ImageNet上top-1的驗證準確度達0.790,top-5的驗證準確度達0.945。需要注意的是輸入圖片的模式不同於VGG16和ResNet的224×224,是299×299,並且輸入預處理函式也有不同。另外需要指出的是目前該模型只支援TensorFlow作為後端。

詳細介紹請見 深度可分卷積 點選開啟連結

首先我們載入一些依賴庫檔案

from __future__ import print_function from __future__ import absolute_import import warnings import numpy as np from keras.preprocessing import image from keras.models import Model from keras import layers from keras.layers import Dense from keras.layers import Input from keras.layers import BatchNormalization from keras.layers import Activation from keras.layers import Conv2D from keras.layers import SeparableConv2D from keras.layers import MaxPooling2D from keras.layers import GlobalAveragePooling2D from keras.layers import GlobalMaxPooling2D from keras.engine.topology import get_source_inputs from keras.utils.data_utils import get_file from keras import backend as K from keras.applications.imagenet_utils import decode_predictions from keras.applications.imagenet_utils import _obtain_input_shape

然後我們指定預訓練模型的權重路徑。權重可以從網上下載也可以是你自己此前訓練並儲存的權重,如何儲存訓練好的模型和權重可以參閱keras常見問題彙總 點選開啟連結

這裡提供網上預訓練的Xception引數下載。再次提醒模型目前只支援TensorFlow作為後端,因此僅提供TensorFlow的模型訓練引數。

TF_WEIGHTS_PATH = 'https://github.com/fchollet/deep-learning-models/releases/download/v0.4/xception_weights_tf_dim_ordering_tf_kernels.h5' TF_WEIGHTS_PATH_NO_TOP = 'https://github.com/fchollet/deep-learning-models/releases/download/v0.4/xception_weights_tf_dim_ordering_tf_kernels_notop.h5'

如果你沒有閱讀本文此前關於深度可分卷積的連結文章,那麼我們簡單回顧一下它的模型結構。

接下來我們用程式碼來表現上述架構。注意載入預訓練的權重是可選項,資料格式要符合TensorFlow的順序要求(寬、高、通道),在Keras的設定檔案keras.json中將image_data_format設定成‘channel_last',預設的輸入影象大小是299×299。通道確定為3,寬和高應不小於71。

def Xception(include_top=True, weights='imagenet',

input_tensor=None, input_shape=None,

pooling=None,

classes=1000):

if weights not in {'imagenet', None}:

raise ValueError('The `weights` argument should be either '

'`None` (random initialization) or `imagenet` '

'(pre-training on ImageNet).')

if weights == 'imagenet' and include_top and classes != 1000:

raise ValueError('If using `weights` as imagenet with `include_top`'

' as true, `classes` should be 1000')

if K.backend() != 'tensorflow':

raise RuntimeError('The Xception model is only available with '

'the TensorFlow backend.')

if K.image_data_format() != 'channels_last':

warnings.warn('The Xception model is only available for the '

'input data format "channels_last" '

'(width, height, channels). '

'However your settings specify the default '

'data format "channels_first" (channels, width, height). '

'You should set `image_data_format="channels_last"` in your Keras '

'config located at ~/.keras/keras.json. '

'The model being returned right now will expect inputs '

'to follow the "channels_last" data format.')

K.set_image_data_format('channels_last')

old_data_format = 'channels_first'

else:

old_data_format = None

input_shape = _obtain_input_shape(input_shape,

default_size=299,

min_size=71,

data_format=K.image_data_format(),

include_top=include_top)

if input_tensor is None:

img_input = Input(shape=input_shape)

else:

if not K.is_keras_tensor(input_tensor):

img_input = Input(tensor=input_tensor, shape=input_shape)

else:

img_input = input_tensor

x = Conv2D(32, (3, 3), strides=(2, 2), use_bias=False, name='block1_conv1')(img_input)

x = BatchNormalization(name='block1_conv1_bn')(x)

x = Activation('relu', name='block1_conv1_act')(x)

x = Conv2D(64, (3, 3), use_bias=False, name='block1_conv2')(x)

x = BatchNormalization(name='block1_conv2_bn')(x)

x = Activation('relu', name='block1_conv2_act')(x)

residual = Conv2D(128, (1, 1), strides=(2, 2),

padding='same', use_bias=False)(x)

residual = BatchNormalization()(residual)

x = SeparableConv2D(128, (3, 3), padding='same', use_bias=False, name='block2_sepconv1')(x)

x = BatchNormalization(name='block2_sepconv1_bn')(x)

x = Activation('relu', name='block2_sepconv2_act')(x)

x = SeparableConv2D(128, (3, 3), padding='same', use_bias=False, name='block2_sepconv2')(x)

x = BatchNormalization(name='block2_sepconv2_bn')(x)

x = MaxPooling2D((3, 3), strides=(2, 2), padding='same', name='block2_pool')(x)

x = layers.add([x, residual])

residual = Conv2D(256, (1, 1), strides=(2, 2),

padding='same', use_bias=False)(x)

residual = BatchNormalization()(residual)

x = Activation('relu', name='block3_sepconv1_act')(x)

x = SeparableConv2D(256, (3, 3), padding='same', use_bias=False, name='block3_sepconv1')(x)

x = BatchNormalization(name='block3_sepconv1_bn')(x)

x = Activation('relu', name='block3_sepconv2_act')(x)

x = SeparableConv2D(256, (3, 3), padding='same', use_bias=False, name='block3_sepconv2')(x)

x = BatchNormalization(name='block3_sepconv2_bn')(x)

x = MaxPooling2D((3, 3), strides=(2, 2), padding='same', name='block3_pool')(x)

x = layers.add([x, residual])

residual = Conv2D(728, (1, 1), strides=(2, 2),

padding='same', use_bias=False)(x)

residual = BatchNormalization()(residual)

x = Activation('relu', name='block4_sepconv1_act')(x)

x = SeparableConv2D(728, (3, 3), padding='same', use_bias=False, name='block4_sepconv1')(x)

x = BatchNormalization(name='block4_sepconv1_bn')(x)

x = Activation('relu', name='block4_sepconv2_act')(x)

x = SeparableConv2D(728, (3, 3), padding='same', use_bias=False, name='block4_sepconv2')(x)

x = BatchNormalization(name='block4_sepconv2_bn')(x)

x = MaxPooling2D((3, 3), strides=(2, 2), padding='same', name='block4_pool')(x)

x = layers.add([x, residual])

for i in range(8):

residual = x

prefix = 'block' + str(i + 5)

x = Activation('relu', name=prefix + '_sepconv1_act')(x)

x = SeparableConv2D(728, (3, 3), padding='same', use_bias=False, name=prefix + '_sepconv1')(x)

x = BatchNormalization(name=prefix + '_sepconv1_bn')(x)

x = Activation('relu', name=prefix + '_sepconv2_act')(x)

x = SeparableConv2D(728, (3, 3), padding='same', use_bias=False, name=prefix + '_sepconv2')(x)

x = BatchNormalization(name=prefix + '_sepconv2_bn')(x)

x = Activation('relu', name=prefix + '_sepconv3_act')(x)

x = SeparableConv2D(728, (3, 3), padding='same', use_bias=False, name=prefix + '_sepconv3')(x)

x = BatchNormalization(name=prefix + '_sepconv3_bn')(x)

x = layers.add([x, residual])

residual = Conv2D(1024, (1, 1), strides=(2, 2),

padding='same', use_bias=False)(x)

residual = BatchNormalization()(residual)

x = Activation('relu', name='block13_sepconv1_act')(x)

x = SeparableConv2D(728, (3, 3), padding='same', use_bias=False, name='block13_sepconv1')(x)

x = BatchNormalization(name='block13_sepconv1_bn')(x)

x = Activation('relu', name='block13_sepconv2_act')(x)

x = SeparableConv2D(1024, (3, 3), padding='same', use_bias=False, name='block13_sepconv2')(x)

x = BatchNormalization(name='block13_sepconv2_bn')(x)

x = MaxPooling2D((3, 3), strides=(2, 2), padding='same', name='block13_pool')(x)

x = layers.add([x, residual])

x = SeparableConv2D(1536, (3, 3), padding='same', use_bias=False, name='block14_sepconv1')(x)

x = BatchNormalization(name='block14_sepconv1_bn')(x)

x = Activation('relu', name='block14_sepconv1_act')(x)

x = SeparableConv2D(2048, (3, 3), padding='same', use_bias=False, name='block14_sepconv2')(x)

x = BatchNormalization(name='block14_sepconv2_bn')(x)

x = Activation('relu', name='block14_sepconv2_act')(x)

if include_top:

x = GlobalAveragePooling2D(name='avg_pool')(x)

x = Dense(classes, activation='softmax', name='predictions')(x)

else:

if pooling == 'avg':

x = GlobalAveragePooling2D()(x)

elif pooling == 'max':

x = GlobalMaxPooling2D()(x)

if input_tensor is not None:

inputs = get_source_inputs(input_tensor)

else:

inputs = img_input

model = Model(inputs, x, name='xception')

if weights == 'imagenet':

if include_top:

weights_path = get_file('xception_weights_tf_dim_ordering_tf_kernels.h5',

TF_WEIGHTS_PATH,

cache_subdir='models')

else:

weights_path = get_file('xception_weights_tf_dim_ordering_tf_kernels_notop.h5',

TF_WEIGHTS_PATH_NO_TOP,

cache_subdir='models')

model.load_weights(weights_path)

if old_data_format:

K.set_image_data_format(old_data_format)

return model

定義輸入預處理函式

def preprocess_input(x):

x /= 255.

x -= 0.5

x *= 2.

return x最後是檔案執行主函式

if __name__ == '__main__':

model = Xception(include_top=True, weights='imagenet')

img_path = 'elephant.jpg'

img = image.load_img(img_path, target_size=(299, 299))

x = image.img_to_array(img)

x = np.expand_dims(x, axis=0)

x = preprocess_input(x)

print('Input image shape:', x.shape)

preds = model.predict(x)

print(np.argmax(preds))

print('Predicted:', decode_predictions(preds, 1))簡單講,Xception結構是帶有殘差連線的深度可分卷積層的線性堆疊,它吸收了此前一些模型的優點,並且作為這一複雜程度的模型來講容易定義與修改,並在實踐中取得了非常好的效果。