Logistic迴歸 Python實現

阿新 • • 發佈:2019-02-19

Logistic迴歸

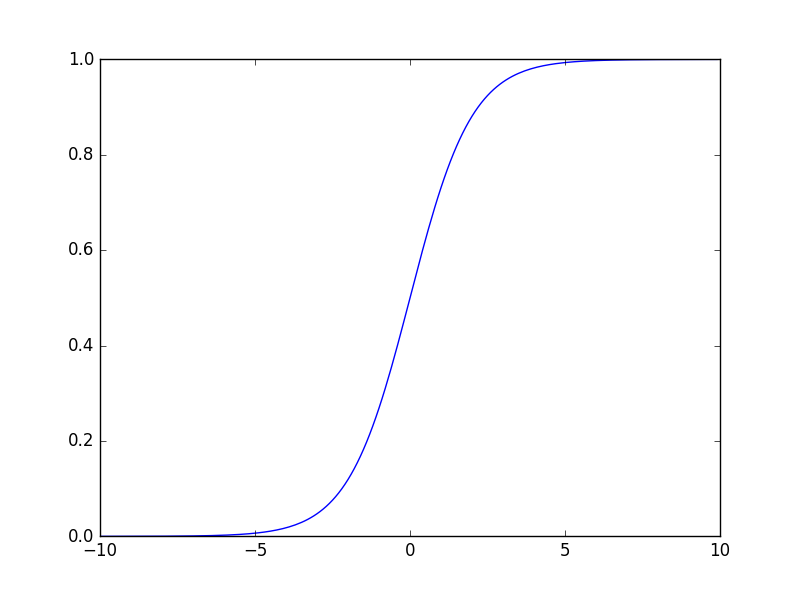

Logistic函式

其函式影象為:

繪圖方法

>>> import numpy as np

>>> x = np.arange(-10,10,0.1)

>>> y = 1/(1+np.exp(-x))

>>> import matplotlib.pyplot as plt

>>> plt.plot(x,y)

[<matplotlib.lines.Line2D object at 0x00000000054FFE10>]

>>> plt.show() 其在近0點陡峭的上升特點決定了它可以將一個迴歸問題轉換為一個分類問題。

在ml/dt.py中的資料生成

def createD3():

np.random.seed(101)

ndim = 2

n2 =10

a = 3+5*np.random.randn(n2,ndim)

b = 18+4*np.random.randn(n2,ndim)

X = np.concatenate((a,b))

ay = np.zeros(n2)

by = np.ones(n2)

ylabel = np.concatenate((ay,by))

return ml/fig.py中的繪圖

# -*- coding: UTF-8 -*-

'''

Created on 2016-4-24

@author: Administrator

'''

import numpy as np

import operator

import matplotlib.pyplot as plt

#繪圖

def plotData(ds,type='o'):

X= ds['X']

y=ds['ylabel']

n = X.shape[0]

cn = len(np.unique(y))

cs = ['r' 分類器程式碼

# -*- coding: UTF-8 -*-

'''

Logistic 迴歸

Created on 2016-4-26

@author: taiji1985

'''

import numpy as np

import operator

import matplotlib.pyplot as plt

def sigmoid(X):

return 1.0/(1+np.exp(-X))

pass

#生成該矩陣的增廣矩陣,新增最後一列(全部為1)

def augment(X):

n,ndim = X.shape

a = np.mat(np.ones((n,1)))

return np.concatenate((X,a),axis=1)

def classify(X,w):

X = np.mat(X)

w = np.mat(w)

if w.shape[0] < w.shape[1]:

w = w.T

#增廣

X= augment(X)

d = X*w

r = np.zeros((X.shape[0],1))

r[d>0] = 1

return r

pass

#梯度下降法學習

#alpha 學習因子

def learn(dataset,alpha=0.001):

X= dataset['X']

ylabel = dataset['ylabel']

n,ndim = X.shape

cls_label = np.unique(ylabel)

cn=len(cls_label)

X = np.mat(X)

ylabel = np.mat(ylabel).T

#生成增廣矩陣

X = augment(X)

ndim += 1

max_c = 500

w = np.ones((ndim,1))

i = 0

ep= 0.0001

cha = 1

while cha > ep:

#計算y = wx + b

ypred =X*w

#計算 logistic

ypred = sigmoid(ypred)

#計算誤差

error = ylabel - ypred

cha = alpha*X.T*error

w = w + cha

cha = np.abs( np.sum(cha))

print i,w.T

i=i+1

return w

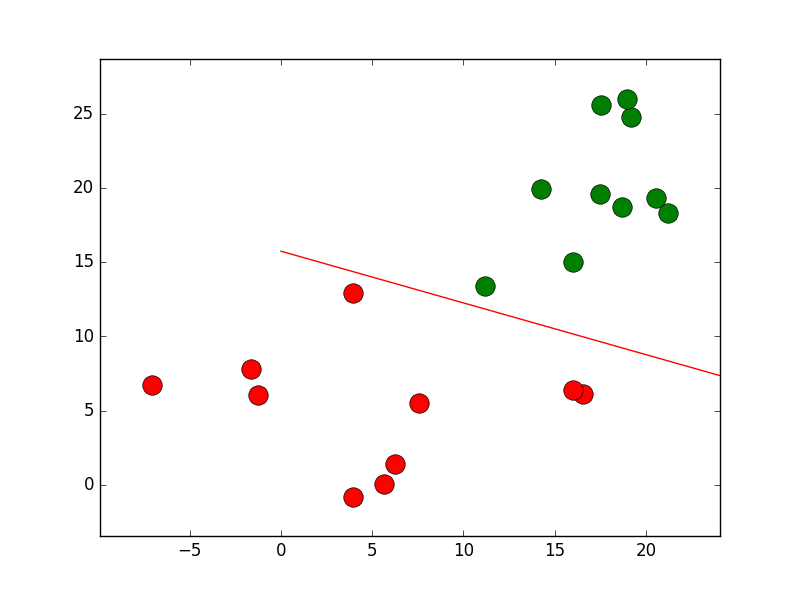

測試

'''

Created on 2016-4-26

@author: Administrator

'''

from ml import lsd

from ml import dt

from ml import fig

import numpy as np

import matplotlib.pyplot as plt

ds = dt.createD3()

w = lsd.learn(ds,0.01)

#draw line

lx = [0, -w[2]/w[0]]

ly = [-w[2]/w[1],0]

plt.figure()

fig.plotData(ds, 'o')

plt.plot(lx,ly)

plt.show()

ypred = lsd.classify(ds['X'],w)

ylabel = np.mat(ds['ylabel']).T

print ypred

print ylabel

dist = np.sum(ypred != ylabel)

print dist,len(ylabel), 1.0*dist/len(ylabel)