學習筆記之——基於pytorch的卷積神經網路

本博文為本人的學習筆記。參考材料為《深度學習入門之——PyTorch》

pytorch中文網:https://www.pytorchtutorial.com/

關於反捲積:https://github.com/vdumoulin/conv_arithmetic/blob/master/README.md

關於卷積和反捲積函式中的引數——“dilation(int or tuple, optional) – 卷積核元素之間的間距”,相當於將卷積核變得稀疏了。

對於全連線神經網路,網路引數太多了。如,對於一張28*28的圖片輸入,第一個隱含層的單個神經元的權重數目就達28*28=784個。若多設定幾層隱含層、輸入圖片再大一點,引數量十分龐大。

卷積神經網路是一個3D容量的神經元。卷積層和全連線層包含引數,而啟用層和池化層不包含引數。引數通過梯度下降法來更新(或者Adam)。

卷積層中濾波器的引數是通過學習得到的。

與神經元連結的空間大小叫神經元的感受野(receptive field)。感受野的大小即filters size(濾波器的尺寸)。而感受野的深度必須和輸入輸入的深度一致。輸出的感受野深度等於the number of filters

CNN——引數共享、稀疏連結(區域性連結)

設定網路時,要注意步長限制

引數共享可以有效減少引數的個數。

下面開始構建簡單的卷積神經網路

import torch import numpy as np import torch.nn as nn #define the model class SimpleCNN(nn.Module): """docstring for SimpleCNN""" def __init__(self): super(SimpleCNN, self).__init__() layer1=nn.Sequential()#Container class, We can add some basic modules in it. layer1.add_module('conv1',nn.Conv2d(in_channels=3,out_channels=32,kernel_size=3,stride=1,padding=1)) layer1.add_module('relu1',nn.ReLU(True)) layer1.add_module('pool1',nn.MaxPool2d(2,2)) self.layer1=layer1 layer2=nn.Sequential() layer2.add_module('conv2',nn.Conv2d(in_channels=32,out_channels=64,kernel_size=3,stride=1,padding=1)) layer2.add_module('relu2',nn.ReLU(True)) layer2.add_module('pool2',nn.MaxPool2d(2,2)) self.layer2=layer2 layer3=nn.Sequential() layer3.add_module('conv3',nn.Conv2d(in_channels=64,out_channels=128,kernel_size=3,stride=1,padding=1)) layer3.add_module('relu3',nn.ReLU(True)) layer3.add_module('pool3',nn.MaxPool2d(2,2)) self.layer3=layer3 layer4=nn.Sequential() layer4.add_module('fc1',nn.Linear(2048,512)) layer4.add_module('fc_relu1',nn.ReLU(True)) layer4.add_module('fc2',nn.Linear(512,64)) layer4.add_module('fc_relu2',nn.ReLU(True)) layer4.add_module('fc3',nn.Linear(64,10)) self.layer4=layer4 def forward(self,x): conv1=self.layer1(x) conv2=self.layer2(conv1) conv3=self.layer3(conv2) fc_input=conv3.view(conv3.size(0),-1)#A multi line Tensor is spliced into a row. fc_out=self.layer4(fc_input) return fc_out model=SimpleCNN() print(model)

run之後的結果:

for param in model.named_parameters():#get the name of the layyer, and the Iterator of parameters

print(param[0])結果如下圖所示

通過增加1*1的卷積層可以降低輸入層的維度,使網路引數減少,從而減少網路裡的複雜性。

在pytorch中的torchvision.model裡面有很多定義好的網路,同時大部分網路都有訓練好的引數。詳細可參考連結:

https://www.pytorchtutorial.com/docs/torchvision/torchvision-models/

下面實現一個demo,對MNIST資料集中手寫數字進行分類。MNIST資料集是一個手寫字型資料集,包含了0~9這10個數字,有55000張訓練集,10000張測試集i,5000張驗證集,圖片大小是28*28的灰度圖

import torch

from torch import optim

import torch.nn as nn

from torch.autograd import Variable

from torch.utils.data import DataLoader

from torchvision import datasets,transforms

torch.manual_seed(1) # reproducible

#Hyperparameters

batch_size=50

learning_rate=1e-3

EPOCH=1

#Data preprocessing

data_tf=transforms.Compose([transforms.ToTensor(),transforms.Normalize([0.5],[0.5])])#take all of the preprocessing together

#.ToTensor():Standardization of Image

#normalization,Subtract the mean, divide by variance.

#download the MNIST

train_dataset=datasets.MNIST(root='./MNIST_data',train=True,transform=data_tf,download=True)

test_data=datasets.MNIST(root='./MNIST_data',train=False,transform=data_tf)

train_loader=DataLoader(dataset=train_dataset,batch_size=batch_size,shuffle=True)# mess up the data

#####################################################################################################################

class CNN(nn.Module):

def __init__(self):

super(CNN, self).__init__()

self.layer1=nn.Sequential(nn.Conv2d(in_channels=1,out_channels=16,kernel_size=3,stride=1,padding=0),#the number of feature=16*26*26

nn.BatchNorm2d(16),

nn.ReLU(),)#inplace=True,Changing the input data

self.layer2=nn.Sequential(nn.Conv2d(in_channels=16,out_channels=32,kernel_size=3,stride=1,padding=0),#32*24*24

nn.BatchNorm2d(32),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2,stride=2),)#32*12*12

self.layer3=nn.Sequential(nn.Conv2d(in_channels=32,out_channels=64,kernel_size=3,stride=1,padding=0),#64*10*10

nn.BatchNorm2d(64),

nn.ReLU(),)

self.layer4=nn.Sequential(nn.Conv2d(in_channels=64,out_channels=128,kernel_size=3,stride=1,padding=0),#128*8*8

nn.BatchNorm2d(128),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2,stride=2),)#128*4*4

self.fc=nn.Sequential(nn.Linear(128*4*4,1024),

nn.ReLU(),

nn.Linear(1024,128),

nn.ReLU(),

nn.Linear(128,10),)

def forward(self,x):

x=self.layer1(x)

x=self.layer2(x)

x=self.layer3(x)

x=self.layer4(x)

x=x.view(x.size(0),-1)

output=self.fc(x)

return output

###########################################################################################################

#train

model=CNN()

print(model)

if torch.cuda.is_available():

model=model.cuda()

criterion=nn.CrossEntropyLoss()

optimizer=optim.Adam(model.parameters(),lr=learning_rate)

for epoch in range(EPOCH):

for step,(img,label) in enumerate(train_loader):

if torch.cuda.is_available():

img=Variable(img).cuda()#Nodes with a volatile attribute of True will not be derivation. and default is False

label=Variable(label).cuda()

else:

img=Variable(img)

label=Variable(label)

output=model(img)

loss=criterion(output,label)

#reset gradients

optimizer.zero_grad()

#backward pass

loss.backward()

#update parameters

optimizer.step()

#test

model.eval()#evaluation Pattern,

#The dropout is turned off during the test, and the parameters in the BN are also used to retain the parameters during training,

#so the test should enter the evaluation mode.上面程式碼執行有點問題,下面給出新的程式碼

import torch

import torch.nn as nn

import torchvision

#It includes the popular data set, model structure and commonly used image conversion tools.

import torchvision.transforms as transforms

#Device configuration

device=torch.device('cuda:0'if torch.cuda.is_available() else 'cpu')

#Hyper parameters

num_epochs=6

num_classes=10#number 0~9

batch_size=100

learning_rate=0.001

#MNIST dataset

train_dataset=torchvision.datasets.MNIST(root='./MNIST_data',train=True,transform=transforms.ToTensor(),download=True)

test_dataset=torchvision.datasets.MNIST(root='./MNIST_data',train=False,transform=transforms.ToTensor())

#data loader or you can call it data Preprocessing

#According to batch size, it is encapsulated into Tensor.

#After that, Variable is only needed to be input into the model.

train_loader=torch.utils.data.DataLoader(dataset=train_dataset,batch_size=batch_size,shuffle=True)

test_loader=torch.utils.data.DataLoader(dataset=test_dataset,batch_size=batch_size,shuffle=False)

##########################################################

#define the CNN

class ConvNet(nn.Module):

def __init__(self,num_classes=10):

super(ConvNet,self).__init__()#input 1*28*28

self.layer1=nn.Sequential(

nn.Conv2d(in_channels=1,out_channels=16,kernel_size=5,stride=1,padding=2),#16*28*28

nn.BatchNorm2d(16),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2,stride=2)#16*14*14

)

self.layer2=nn.Sequential(

nn.Conv2d(in_channels=16,out_channels=32,kernel_size=5,stride=1,padding=2),#32*14*14

nn.BatchNorm2d(32),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2,stride=2)#32*7*7

)

self.fc=nn.Linear(7*7*32,num_classes)

def forward(self,x):

out=self.layer1(x)

out=self.layer2(out)

out=out.reshape(out.size(0),-1)

out=self.fc(out)

return out

model=ConvNet(num_classes).to(device)#this Sentence is see wherether CPU or GPU speed up

#loss and optimizer

criterion=nn.CrossEntropyLoss()

optimizer=torch.optim.Adam(model.parameters(),lr=learning_rate)

#traian the model

total_step=len(train_loader)#all of the train data, each itertation is the number of batch_size. the

for epoch in range(num_epochs):

for i,(images,labels) in enumerate(train_loader):

images=images.to(device)

labels=labels.to(device)

#Forward pass

outputs=model(images)

loss=criterion(outputs,labels)

#backward and optimize

optimizer.zero_grad()

loss.backward()

optimizer.step()

if(i+1)%100==0:

print('Epoch[{}/{}],Step[{}/{}],Loss:{:.4f}'

.format(epoch+1,num_epochs,i+1,total_step,loss.item()))

#################################################################################

#test the model

model.eval()# eval mode (batchnorm uses moving mean/variance instead of mini-batch mean/variance)

with torch.no_grad():#Remove the gradient

correct=0

total=0

for images,labels in test_loader:

images=images.to(device)

labels=labels.to(device)

outputs=model(images)

_,predicted=torch.max(outputs.data,1)#Returns the maximum value on the dimension=1.

total+=labels.size(0)

correct += (predicted == labels).sum().item()

print('Test Accuracy of the model on the 10000 test images: {} %'.format(100 * correct / total))

# Save the model checkpoint

#torch.save(model.state_dict(), 'model.ckpt')執行結果截圖

關於DataLoader(https://blog.csdn.net/u014380165/article/details/79058479)

該介面主要用來將自定義的資料讀取介面的輸出或者PyTorch已有的資料讀取介面的輸入按照batch size封裝成Tensor,後續只需要再包裝成Variable即可作為模型的輸入。

關於ReLU(inplace=True)

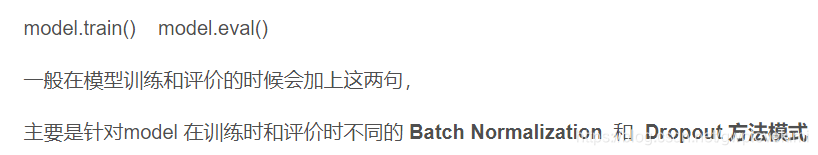

關於PyTorch進行訓練和測試時指定例項化的model模式為:train/eval

https://www.cnblogs.com/king-lps/p/8570021.html

關於optimizer.step()

關於torch.no_grad()

關於torch.max