機器學習與深度學習系列連載: 第二部分 深度學習(十二)卷積神經網路 3 經典的模型(LeNet-5,AlexNet ,VGGNet,GoogLeNet,ResNet)

卷積神經網路 3 經典的模型

經典的卷積神經網路模型是我們學習CNN的利器,不光是學習原理、架構、而且經典模型的超引數、引數,都是我們做遷移學習最好的源材料之一。

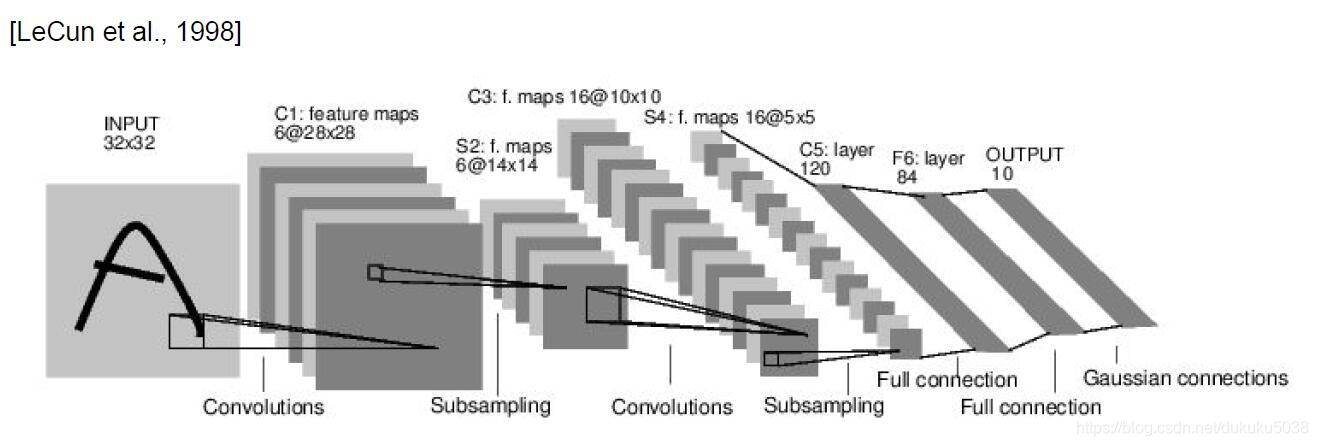

1. LeNet-5 [LeCun et al., 1998]

我們還是從CNN之父,LeCun大神在98年提出的模型看起。

引數有:Conv filters were 5x5, applied at stride 1

Subsampling (Pooling) layers were 2x2 applied at stride 2

架構是:[CONV-POOL-CONV-POOL-CONV-FC]

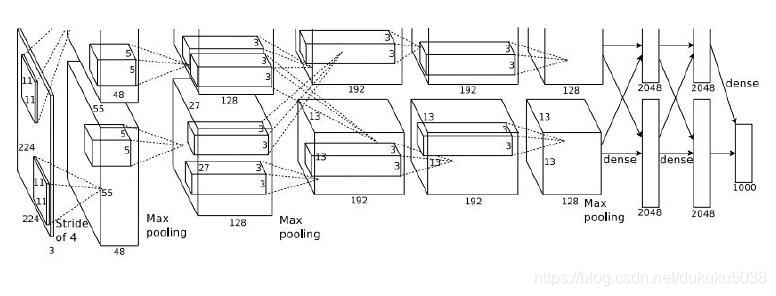

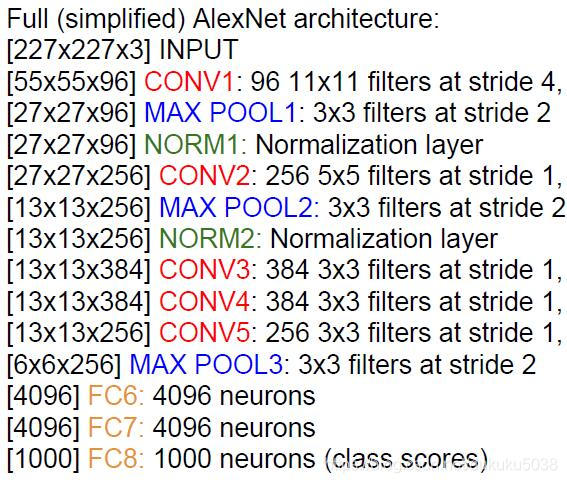

2.AlexNet [Krizhevsky et al. 2012]

這個模型是2012年Imagenet 奪冠的模型,從這個模型開始,可以說,深度學習的大幕已經拉開!

模型引數:

(1) First layer:

Input: 227x227x3 images

(CONV1): 96 11x11 filters applied at stride 4,

Output volume [55x55x96]

這一層的所有的引數是:(11113)*96 = 35K

(2) Second layer(Pooling):

3x3 filters applied at stride 2

Output volume: 27x27x96

這一層不需要引數

…

(3) 整個架構 (Pooling):

(4) 模型特徵和超引數設定:

- first use of ReLU

- used Norm layers (not common anymore)

- heavy data augmentation

- dropout 0.5

- batch size 128

- SGD Momentum 0.9

- Learning rate 1e-2, reduced by 10

manually when val accuracy plateaus - L2 weight decay 5e-4

- 7 CNN ensemble: 18.2% -> 15.4%

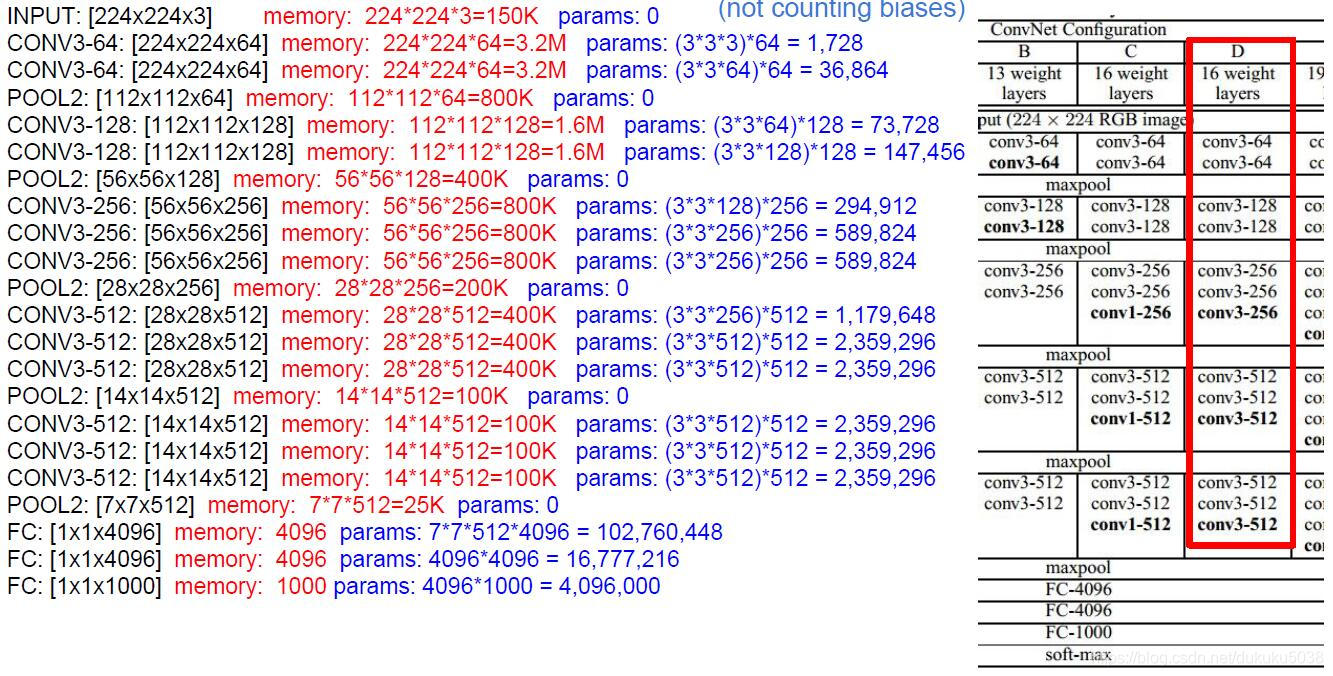

3.VGGNet [Simonyan and Zisserman, 2014]

模型的特點:

*Only 3x3 CONV stride 1, pad 1 and 2x2 MAX POOL stride 2;

將11.2% top 5 error in ILSVRC 2013降低到 7.3% top 5 error;

TOTAL memory: 24M * 4 bytes ~= 93MB / image (only forward! ~2 for bwd)

TOTAL params: 138M parameters

模型具體的設定和引數:

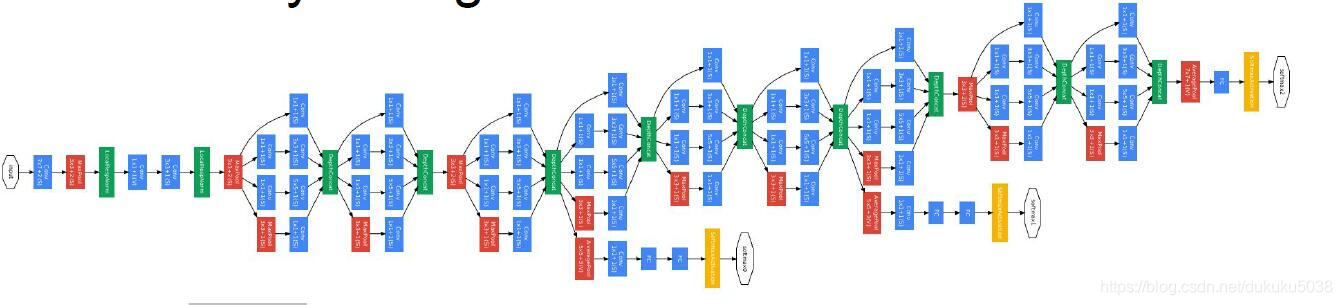

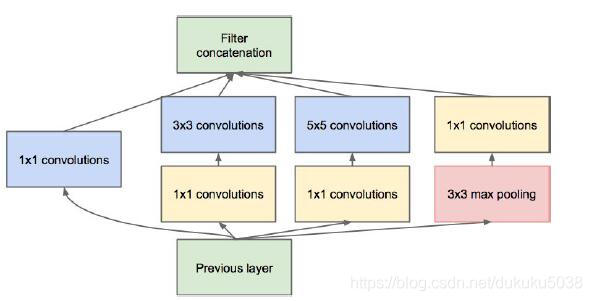

4.GoogLeNet[Szegedy et al., 2014]

ILSVRC 2014 冠軍(6.7% top 5 error)

模型特點:

- Inception Model

- No FC layer

- Only 5 million params!(Removes FC layers completely)

- Compared to AlexNet:

12X less params

2x more compute

6.67% (vs. 16.4%)

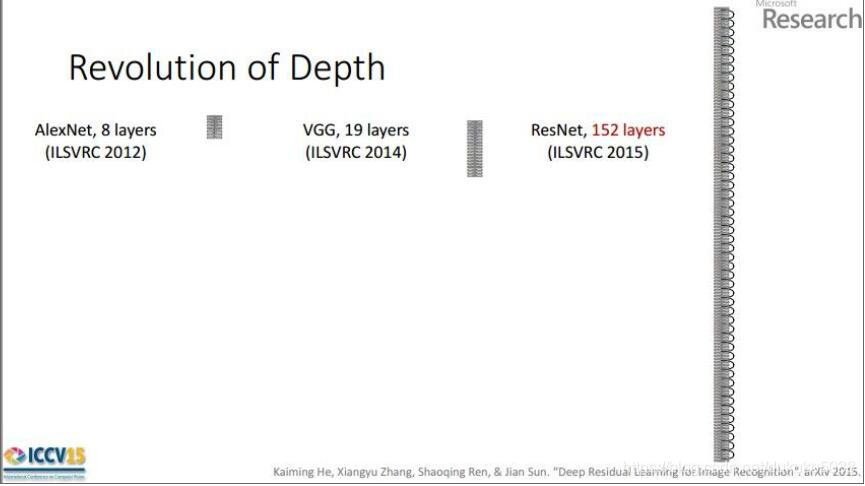

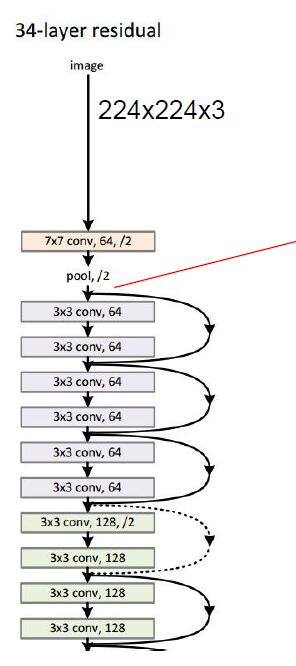

5. ResNet [He et al., 2015]

ILSVRC 2015 winner (3.6% top 5 error)

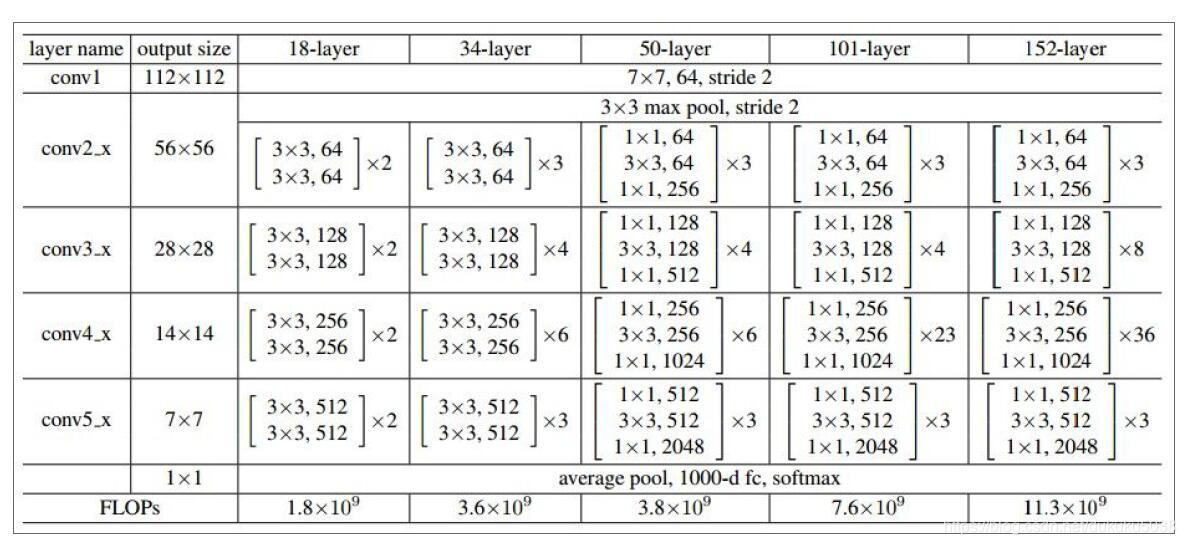

迄今為止,我見過的最深的模型!152層!!!!!!!!!!!

- 2-3 weeks of training on 8 GPU machine

- at runtime: faster than a VGGNet! (even though it has 8x more layers)

(1) 系統結構

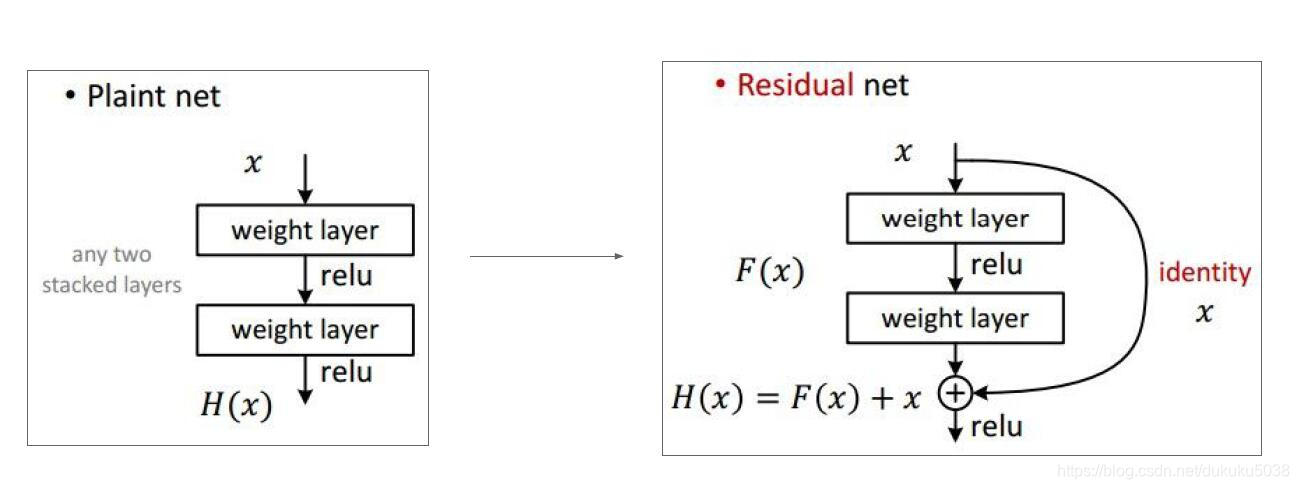

(2) Res 殘差的概念

(3) 超引數設定 - Batch Normalization after every CONV layer

- Xavier/2 initialization from He et al.

- SGD + Momentum (0.9)

- Learning rate: 0.1, divided by 10 when validation error plateaus

- Mini-batch size 256

- Weight decay of 1e-5

- No dropout used

(4) 層詳情

本專欄圖片、公式很多來自臺灣大學李弘毅老師、斯坦福大學cs229,斯坦福大學cs231n 、斯坦福大學cs224n課程。在這裡,感謝這些經典課程,向他們致敬!