Coursera機器學習基石作業二python實現

阿新 • • 發佈:2018-11-12

##機器學習基石作業二

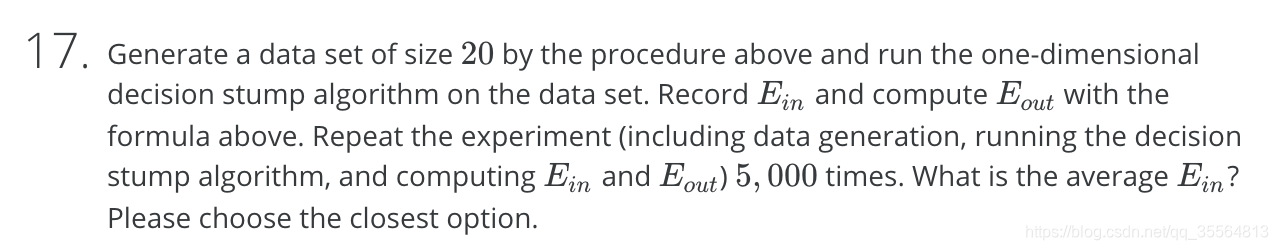

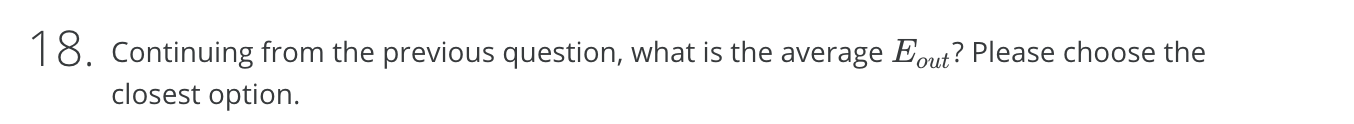

下面的程式碼是17、18題的結合:

import numpy as np import random class decisonStump(object): def __init__(self,dimension,data_count,noise): self.dimension=dimension self.data_count=data_count self.noise=noise def generate_dataset(self): dataset=np.zeros((self.data_count,self.dimension+1)) for i in range(self.data_count): x=random.uniform(-1,1) line=[] line.append(x) y=np.sign(x)*np.sign(random.uniform(0,1)-self.noise) line.append(y) dataset[i:]=line return dataset def get_theta(self,dataset): l=np.sort(dataset[:,0]) theta=np.zeros((self.data_count,1)) for i in range(self.data_count-1): theta[i]=(l[i]+l[i+1])/2 theta[-1]=1 return theta def question1718(self): sum_e_in = 0 sum_e_out=0 for i in range(5000): dataset = self.generate_dataset() theta=self.get_theta(dataset) e_in = np.zeros((2, self.data_count)) for j in range(self.data_count): a=dataset[:,1]*np.sign(dataset[:,0]-theta[j]) e_in[0][j] = (self.data_count - np.sum(a)) / (2 * self.data_count) # 陣列只有-1和+1,可直接計算出-1所佔比例 e_in[1][j] = (self.data_count - np.sum(-a)) / (2 * self.data_count) min0, min1 = np.min(e_in[0]), np.min(e_in[1]) s=0 theta_best=0 if min0 < min1: s = 1 theta_best = theta[np.argmin(e_in[0]),0] sum_e_in+=min0 else: s = -1 theta_best = theta[np.argmin(e_in[1]),0] sum_e_in+=min1 e_out=0.5+0.3*s*(np.abs(theta_best)-1) sum_e_out+=e_out print(sum_e_in/5000,sum_e_out/5000) if __name__=='__main__': decision=decisonStump(1,20,0.2) decision.question1718()

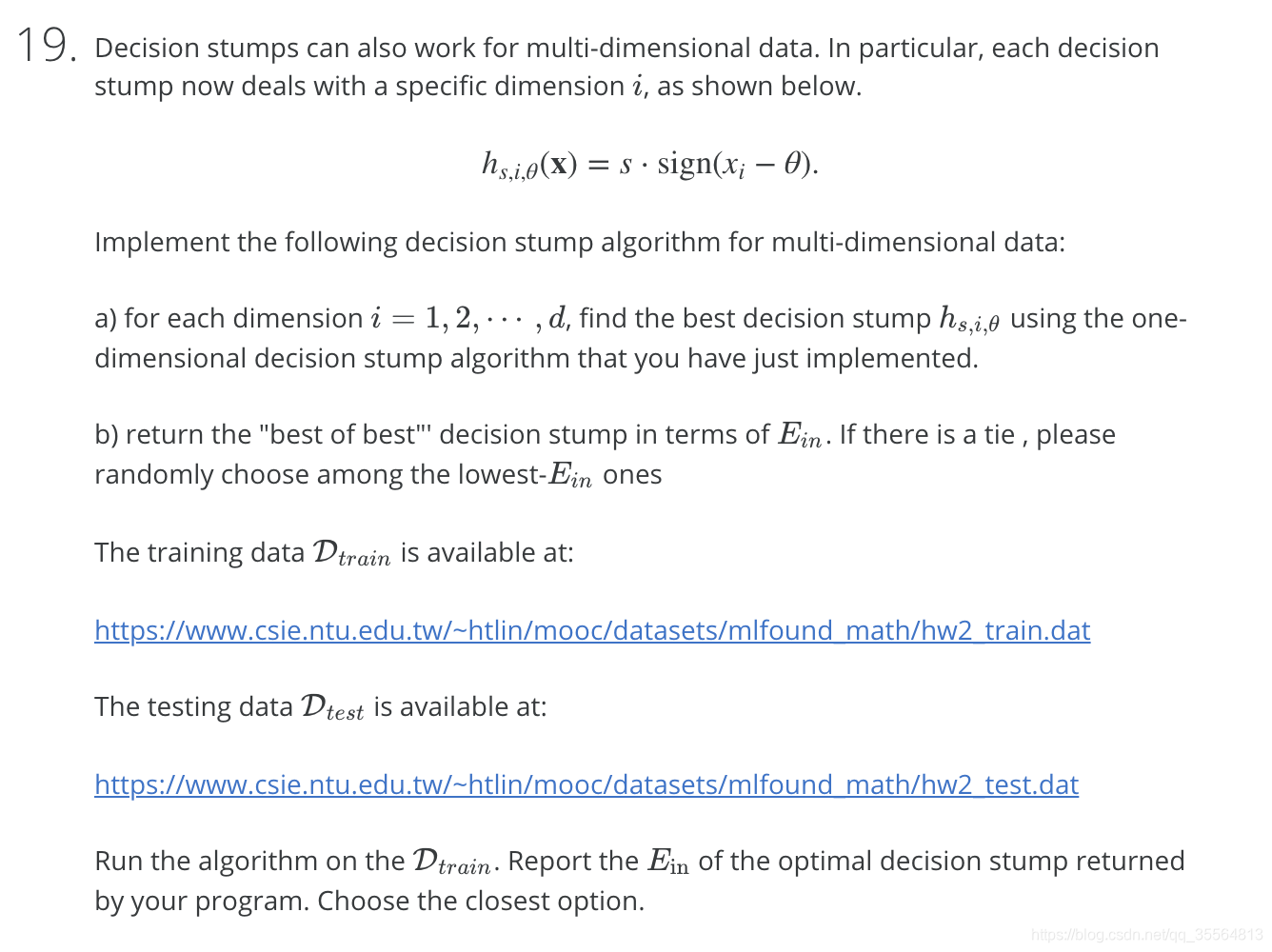

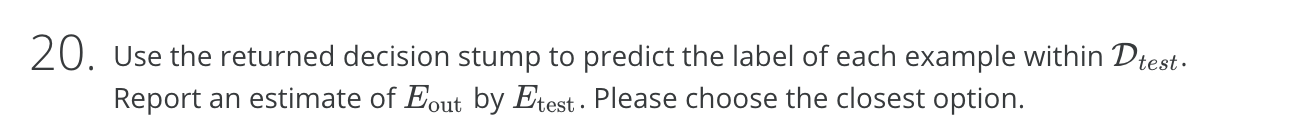

下面的程式碼是19、20題的結合:

import numpy as np class decisonStump(object): def get_train_dataset(self,path): with open(path,'r') as f: rawData=f.readlines() dimension=len(rawData[0].strip().split(' '))-1 data_count=len(rawData) data_set=np.zeros((data_count,dimension+1)) for i in range(data_count): data_set[i:]=rawData[i].strip().split(' ') return data_set,dimension,data_count def get_theta(self,dataset): data_count=len(dataset) l=np.sort(dataset) theta=np.zeros((data_count,1)) for i in range(data_count-1): theta[i]=(l[i]+l[i+1])/2 theta[-1]=1 return theta def question19(self): dataset,dimension,data_count=self.get_train_dataset('hw2_train.dat.txt') s1=[] theta_best1=[] E_in=[] for i in range(dimension): theta=self.get_theta(dataset[:,i]) e_in = np.zeros((2, data_count)) for j in range(data_count): a=dataset[:,-1]*np.sign(dataset[:,i]-theta[j]) e_in[0][j] = (data_count - np.sum(a)) / (2 * data_count) # 陣列只有-1和+1,可直接計算出-1所佔比例 e_in[1][j] = (data_count - np.sum(-a)) / (2 * data_count) min0,min1=np.min(e_in[0,:]),np.min(e_in[1,:]) if min0>=min1: s1.append(-1) theta_best1.append(theta[np.argmin(e_in[1])]) else: s1.append(1) theta_best1.append(theta[np.argmin(e_in[0])]) E_in.append(np.min(np.min(e_in))) minS=s1[np.argmin(E_in)] minTheta=theta_best1[np.argmin(E_in)] print(np.min(E_in)) return minS,minTheta def question20(self): s,theta=self.question19() dataset, dimension, data_count = self.get_train_dataset('hw2_test.dat.txt') E_out=[] for i in range(dimension): a=dataset[:,-1]*np.sign(dataset[:,i]-theta)*s e_out=(data_count-np.sum(a))/(2*data_count) E_out.append(e_out) print(np.min(E_out)) if __name__=='__main__': decision=decisonStump() decision.question20()