tensorflow利用Inception-v3實現遷移學習

阿新 • • 發佈:2018-12-13

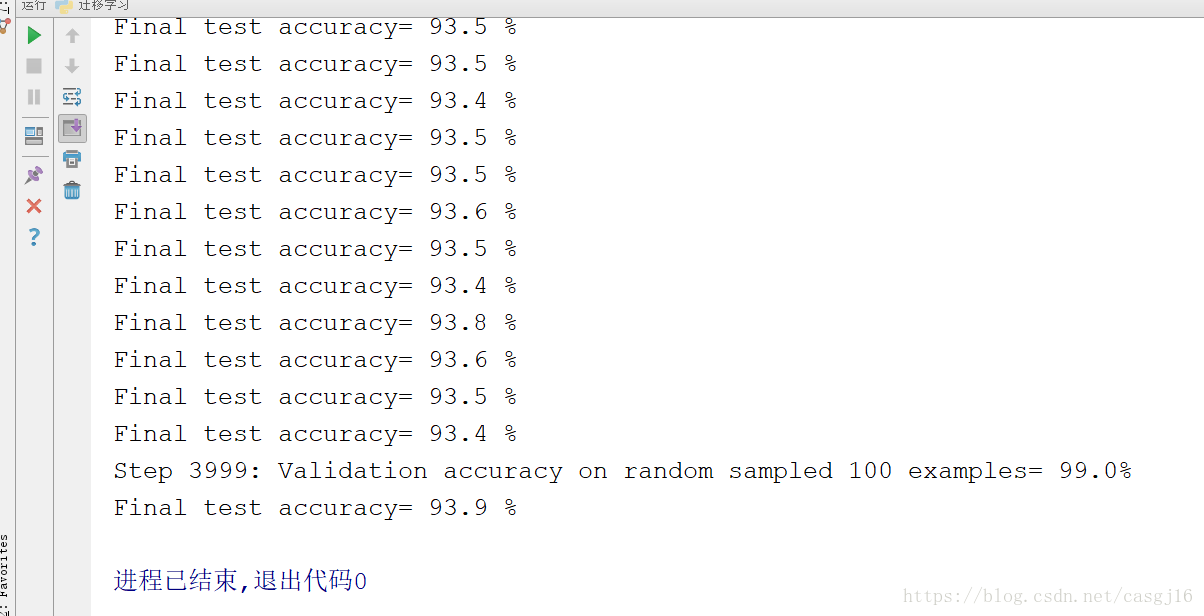

1、Tensorflow 實現遷移學習。 #photo地址: #http://download.tensorflow.org/example_images/flower_photos.tgz #Inception-v3模型 #https://storage.googleapis.com/download.tensorflow.org/models/inception_dec_2015.zip 2、程式碼 import glob import os.path import random import numpy as np import tensorflow as tf from tensorflow.python.platform import gfile #Inception-v3模型瓶頸層得節點個數 BOTTLENECK_TENSOR_SIZE=2048 BOTTLENECK_TENSOR_NAME='pool_3/_reshape:0' JPEG_DATA_TENSOR_NAME='DecodeJpeg/contents:0' MODEL_DIR='C:/Users/casgj/PycharmProjects/CNN/inception_dec_2015' MODEL_FILE='tensorflow_inception_graph.pb' CACHE_DIR='C:/Users/casgj/PycharmProjects/CNN/bottleneck' INPUT_DATA='C:/Users/casgj/PycharmProjects/CNN/flower_photos' #驗證得資料百分比 VALIDATION_PERCENTAGE=10 #測試得資料百分比 TEST_PERCENTAGE=10 #定義神經網路得設定 LEARNING_RATE=0.01 STEPS=4000 BATCH=100 #從資料資料夾讀取所有得圖片列表並按訓練、驗證、測試資料分開 def create_image_lists(testing_percentage, validation_percentage): result = {} sub_dirs = [x[0] for x in os.walk(INPUT_DATA)] is_root_dir = True for sub_dir in sub_dirs: if is_root_dir: is_root_dir = False continue extensions = ['jpg', 'jpeg', 'JPG', 'JPEG'] file_list = [] dir_name = os.path.basename(sub_dir) for extension in extensions: file_glob = os.path.join(INPUT_DATA, dir_name, '*.' + extension) file_list.extend(glob.glob(file_glob)) if not file_list: continue label_name = dir_name.lower() # 初始化 training_images = [] testing_images = [] validation_images = [] for file_name in file_list: base_name = os.path.basename(file_name) # 隨機劃分資料 chance = np.random.randint(100) #產生一個<100得正整數 if chance < validation_percentage: validation_images.append(base_name) elif chance < (testing_percentage + validation_percentage): testing_images.append(base_name) else: training_images.append(base_name) result[label_name] = { 'dir': dir_name, 'training': training_images, 'testing': testing_images, 'validation': validation_images, } return result #獲取給定類別category中index圖片的地址 def get_image_path(image_lists,image_dir,label_name,index,category): label_lists=image_lists[label_name] category_list=label_lists[category] mod_index=index% len(category_list) base_name=category_list[mod_index] sub_dir=label_lists['dir'] full_path=os.path.join(image_dir,sub_dir,base_name) return full_path def get_bottleneck_path(image_lists,label_name,index,category): return get_image_path(image_lists,CACHE_DIR, label_name,index,category)+'.txt' def run_bottleneck_on_image(sess,image_data,image_data_tensor,bottleneck_tensor): bottleneck_values=sess.run(bottleneck_tensor, {image_data_tensor:image_data}) bottleneck_values=np.squeeze(bottleneck_values) return bottleneck_values #獲取一張圖片對應得特徵向量 def get_or_create_bottleneck( sess,image_lists,label_name,index, category,jpeg_data_tensor,bottleneck_tensor): # 獲取一張圖片對應得特徵向量文字得路徑 label_lists=image_lists[label_name] sub_dir=label_lists['dir'] sub_dir_path=os.path.join(CACHE_DIR,sub_dir) if not os.path.exists(sub_dir_path): os.makedirs(sub_dir_path) bottleneck_path=get_bottleneck_path( image_lists,label_name,index,category ) #如果這個特徵向量檔案不存在,則通過Inception-v3模型來 建立一個 if not os.path.exists(bottleneck_path): # get_image_path 獲取給定類別category中index圖片的地址 image_path=get_image_path( image_lists,INPUT_DATA,label_name,index,category) image_data=gfile.FastGFile(image_path,'rb').read() bottleneck_values=run_bottleneck_on_image( sess,image_data,jpeg_data_tensor,bottleneck_tensor ) #將計算得特徵向量存入檔案 bottleneck_string=','.join(str(x) for x in bottleneck_values) with open(bottleneck_path,'w') as bottleneck_file: bottleneck_file.write(bottleneck_string) else: #直接從檔案中讀取圖爿對應得特徵向量 with open(bottleneck_path,'r') as bottleneck_file: bottleneck_string=bottleneck_file.read() bottleneck_values=[float(x) for x in bottleneck_string.split(',')] return bottleneck_values #返回一個類別為category 的 how_many數量的集合 資料塊 和 標籤快 def get_random_cache_bottlenecks(sess,n_classes,image_lists,how_many,category, jpeg_data_tensor,bottleneck_tensor): bottlenecks=[] ground_truths=[] for _ in range(how_many): #隨機一個類別 label_index=random.randrange(n_classes) label_name=list(image_lists.keys())[label_index] # 隨機一個圖片編號 image_index=random.randrange(65536) # get_or_create_bottleneck 獲取一張圖片對應得特徵向量 bottleneck=get_or_create_bottleneck( sess,image_lists,label_name,image_index,category, jpeg_data_tensor,bottleneck_tensor) #對一個標籤 ground_truth=np.zeros(n_classes,dtype=np.float32) ground_truth[label_index]=1.0 bottlenecks.append(bottleneck) ground_truths.append(ground_truth) return bottlenecks,ground_truths #獲取testing的所有資料 def get_test_bottlenecks(sess,image_lists,n_classes, jpeg_data_tensor,bottleneck_tensor): bottlenecks=[] ground_truths=[] label_name_list=list(image_lists.keys()) for label_index,label_name in enumerate(label_name_list): category='testing' for index,unused_base_name in enumerate( image_lists[label_name][category]): # get_or_create_bottleneck 獲取一張圖片對應得特徵向量 bottleneck=get_or_create_bottleneck( sess,image_lists,label_name,index,category, jpeg_data_tensor,bottleneck_tensor ) #對應的label ground_truth=np.zeros(n_classes,dtype=np.float32) ground_truth[label_index]=1.0 bottlenecks.append(bottleneck) ground_truths.append(ground_truth) return bottlenecks,ground_truths def sss(): image_lists=create_image_lists(TEST_PERCENTAGE,VALIDATION_PERCENTAGE) n_classes=len(image_lists.keys()) #print(n_classes) #5 # 讀取已經訓練好的Inception-v3模型。 with gfile.FastGFile(os.path.join(MODEL_DIR,MODEL_FILE),'rb') as f: graph_def=tf.GraphDef() graph_def.ParseFromString(f.read()) bottleneck_tensor,jpeg_data_tensor=tf.import_graph_def( graph_def, return_elements=[BOTTLENECK_TENSOR_NAME,JPEG_DATA_TENSOR_NAME] ) # 定義新的神經網路輸入 bottleneck_input=tf.placeholder( tf.float32,[None,BOTTLENECK_TENSOR_SIZE], name='BottleneckInputPlaceholder') # 定義新的標準答案輸入 ground_truth_input=tf.placeholder( tf.float32,[None,n_classes],name='GroundTruthInput' ) # 定義一層全連結層 with tf.name_scope('final_training_ops'): weights=tf.Variable(tf.truncated_normal( [BOTTLENECK_TENSOR_SIZE,n_classes],stddev=0.001 )) biases=tf.Variable(tf.zeros([n_classes])) logits=tf.matmul(bottleneck_input,weights)+biases final_tensor=tf.nn.softmax(logits) #定義交叉熵損失函式 cross_entropy=tf.nn.softmax_cross_entropy_with_logits( logits,ground_truth_input ) cross_entropy_mean=tf.reduce_mean(cross_entropy) train_step=tf.train.GradientDescentOptimizer(LEARNING_RATE).minimize(cross_entropy_mean) #計算正確率 with tf.name_scope('evaluation'): correct_prediction=tf.equal(tf.argmax(final_tensor,1),tf.argmax(ground_truth_input,1)) evaluation_step=tf.reduce_mean( tf.cast(correct_prediction,tf.float32) ) with tf.Session() as sess: init=tf.initialize_all_variables() sess.run(init) #訓練過程 for i in range(STEPS): ##get_random_cache_bottlenecks # 返回一個類別為 'training'的 BATCH數量 的集合 train_bottlenecks,train_ground_truth=get_random_cache_bottlenecks( sess,n_classes,image_lists,BATCH, 'training', jpeg_data_tensor,bottleneck_tensor) sess.run(train_step,feed_dict={bottleneck_input:train_bottlenecks, ground_truth_input:train_ground_truth}) if i%100==0 or i+1==STEPS: #驗證集 validation_bottlenecks, validation_groiund_truth=\ get_random_cache_bottlenecks(sess,n_classes,image_lists,BATCH, 'validation',jpeg_data_tensor,bottleneck_tensor ) validation_accuracy=sess.run( evaluation_step,feed_dict={ bottleneck_input:validation_bottlenecks, ground_truth_input:validation_groiund_truth } ) print('Step %d: Validation accuracy on random sampled %d examples= %.1f%%'% (i,BATCH,validation_accuracy*100)) # 測試集 test_bottlenecks,test_ground_truth=get_test_bottlenecks( sess,image_lists,n_classes,jpeg_data_tensor,bottleneck_tensor ) test_accuracy=sess.run(evaluation_step,feed_dict={ bottleneck_input:test_bottlenecks, ground_truth_input:test_ground_truth }) print('Final test accuracy= %.1f %%'%(test_accuracy*100)) sss() 3、結果: