Three models for Kaggle’s “Flowers Recognition” Dataset

After resizing, samples were divided into two parts for training and validation. I didn’t use test dataset because 1) the experiment was not for competition purpose that I didn’t need a precise accuracy; 2) the dataset was already very small for training.

The train_images was added by sequence from sub-folders so we need to shuffle the dataset. Otherwise, the model can only learn what is “daisy” from the first 800 images, which wouldn't optimise the parameters of the model. Note that the seed need to be set and applied to both train_images and train_labels so that each image can match the right label.

Build The Model

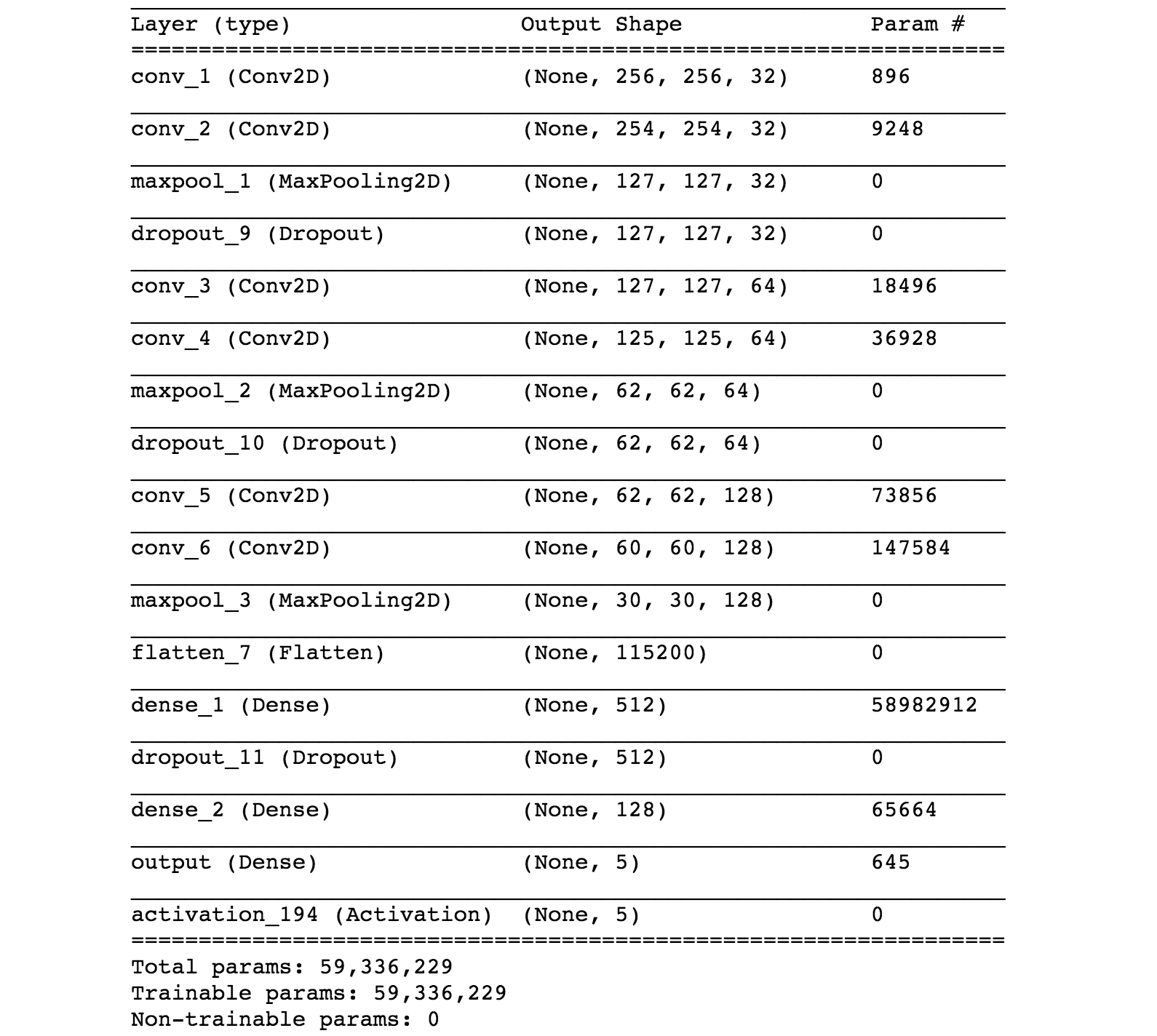

The first model I used was a model built from scratch. I had three hidden layers and two FN. The convolution shape for each layer was 32, 64 and 128, the most common setting for image classification tasks. The activation I used was ‘ReLU’. The pooling size I used was 2x2. Two dense function with size 512 and 128 accompanying ‘ReLU’ activation function. ‘softmax’ function was used at last dense

The second model I tried was customised pre-trained model VGG19. Here I froze the first 5 layers with untrainable parameters and the customised layers were two dense functions with size 1028 accompanying ‘ReLU’ activation function. The last layer and corresponding parameters I chose were the same as the first model.

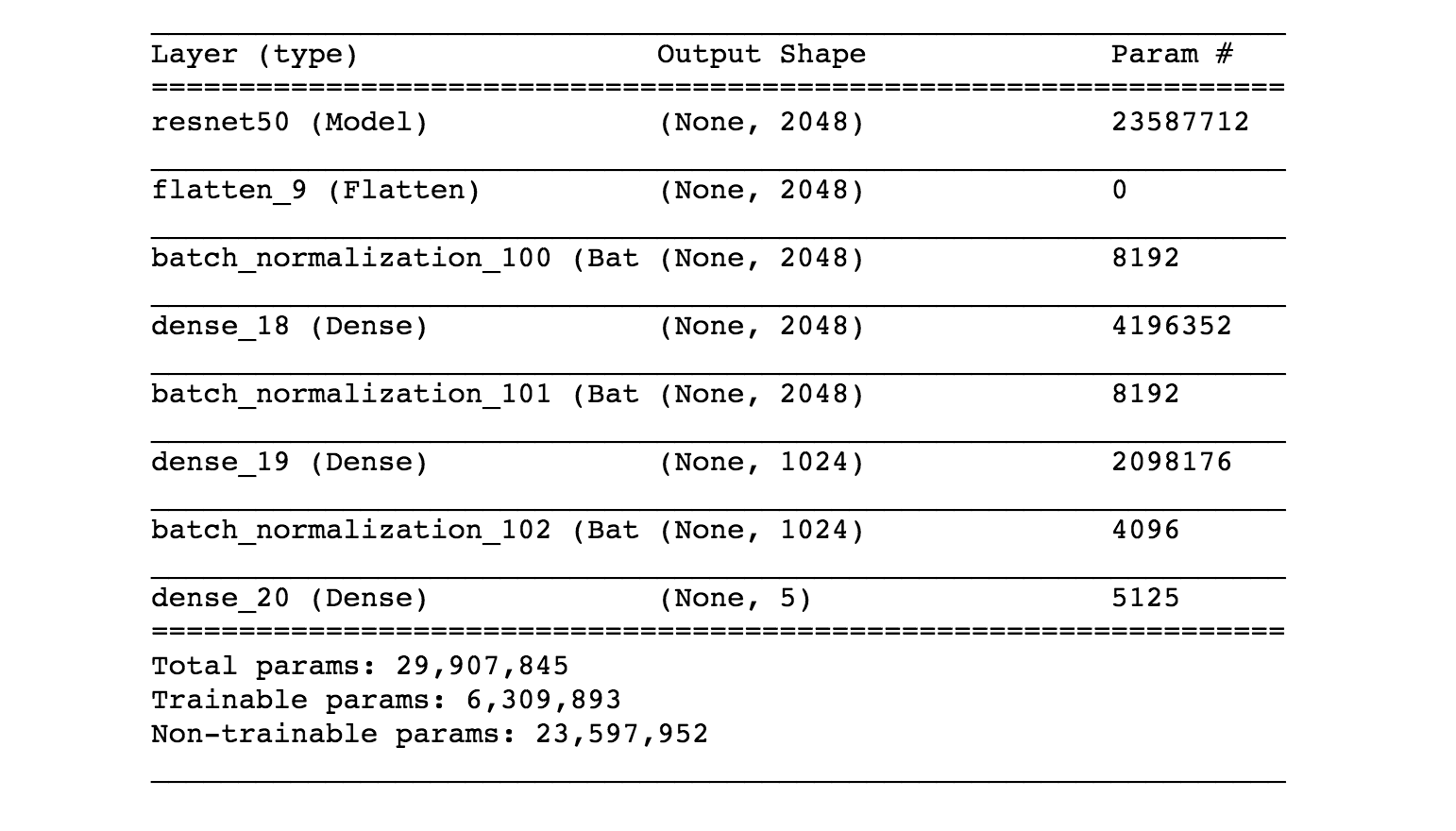

The third model I tried was customised pre-trained model ResNet-50. All settings were the same as the second model except the first layer was untrainable, which meant my model would fit more to the new dataset.

Input Data

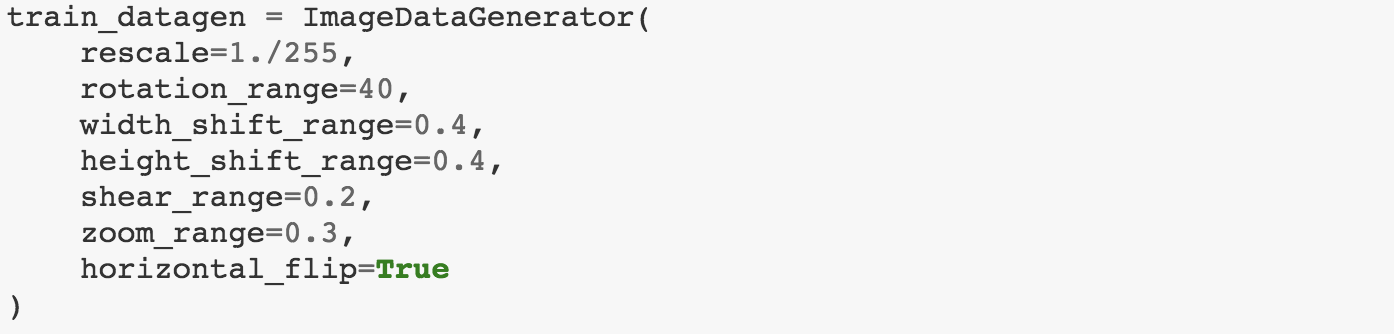

Considering the dataset was small, data augmentation might be a useful way to improve the accuracy. Except for normalising pixel value with 255, rotation, shift, shear, zoom and horizontal flip were applied to input data by ImageDataGenerator in Keras.

Train and Evaluation the Model

The batch size I used was 32, which was quite common and friendly to GPU’s parallel computing.

The epochs I chose for these three models were different.

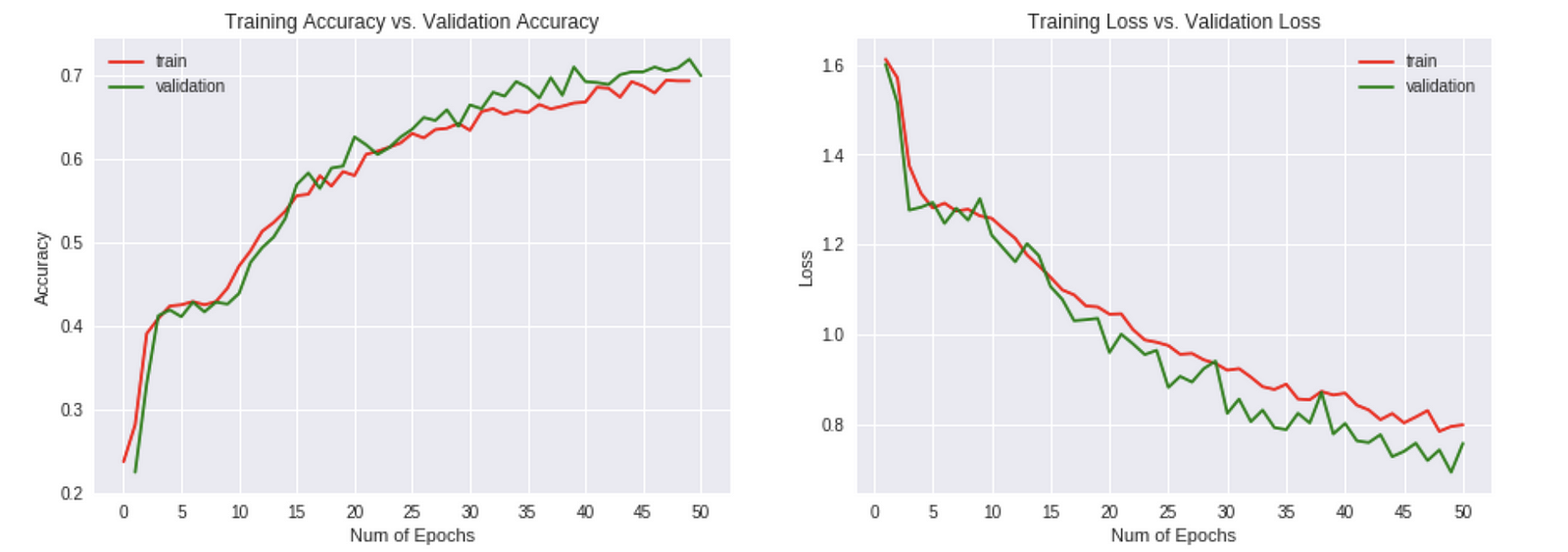

- I used 50 for the first model because the model was built from scratch and took longer time to learn.

- I used 10 for the pre-trained VGG19 model because I found that the accuracies for train and validation were both low (only 0.24). Even though I tried to froze the first layer only, the result was similar. ResNet-50 has more complex layer and larger number of params than VGG19. Maybe the information preserved in model VGG19 is not big enough for this dataset, or unsuitable for this dataset. I might try to add more convolutional layer to VGG19 for training in the future.

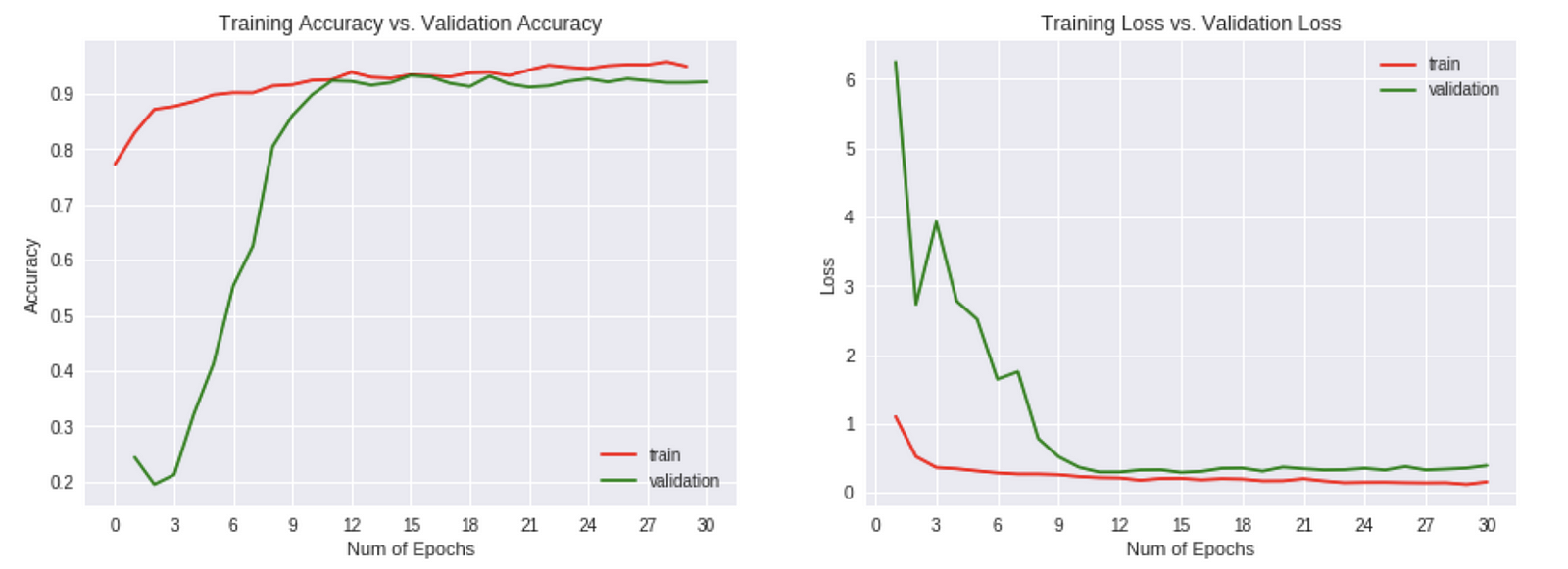

- The third (with pre-trained ResNet-50 model) I used was 30. I didn’t imagine that it could fit so well in just around 12 epochs. Considering VGG19 and ResNet-50 used the same dataset ‘ImageNet’, the features they catch should be similar. It was a little suprise that I got totally different results.

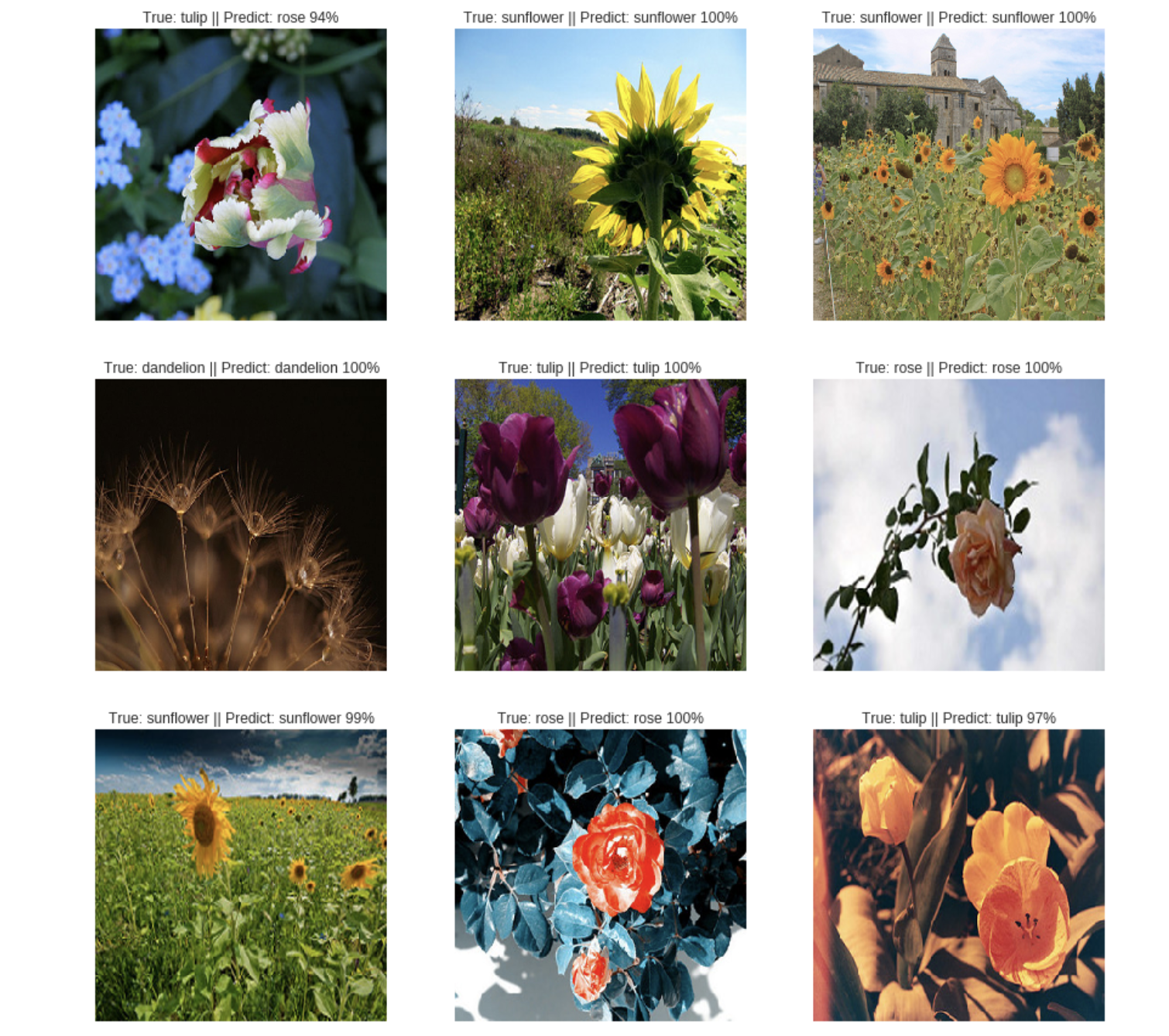

As we can see, the accuracy is very good for customised ResNet-50 model. But I guess these five flowers classes were contained in ImageNet Dataset, which was a little cheating for my experiment.

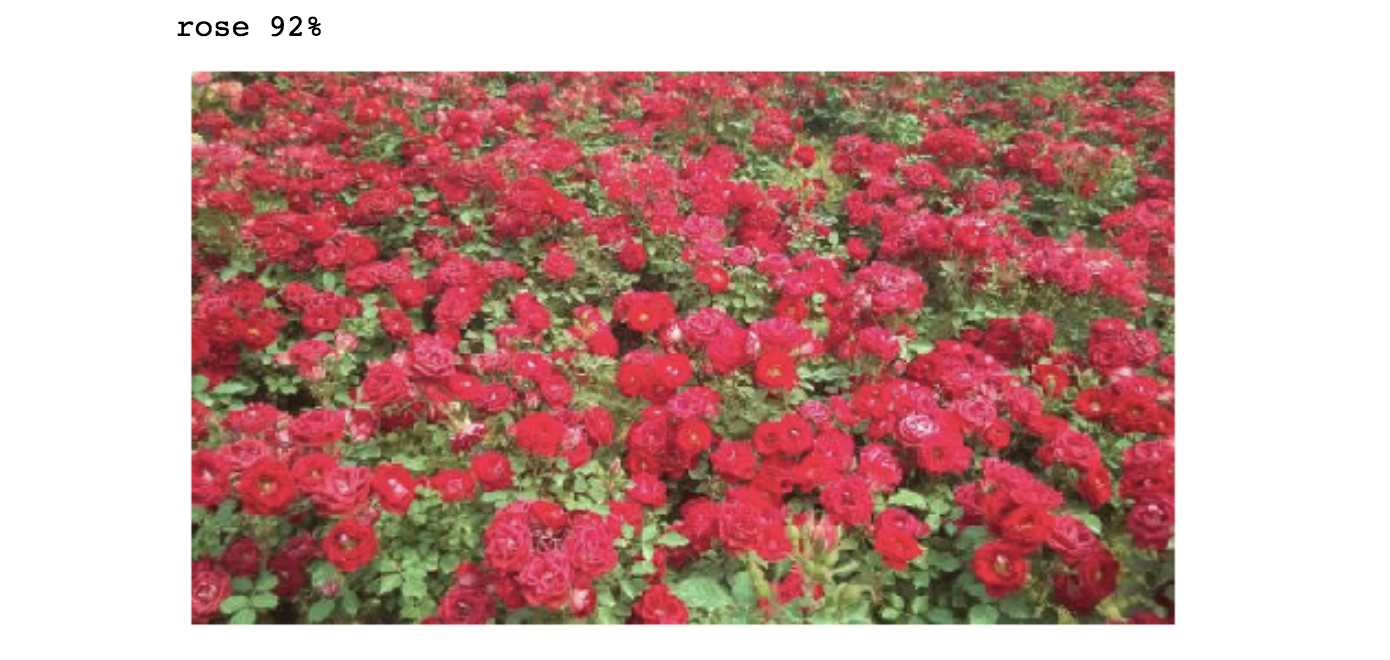

Finally, I randomly downloaded flowers pictures online and tested with my model. Customised model with ResNet-50 worked perfectly well and model built from scratch also made resonable predictions.

So, this is for now. I might update my model in the future and hope I can find out why the second and third results are that different.

Again, the code and details can be viewed on my github.

Thanks for your watching!