High Quality Video Encoding at Scale

High Quality Video Encoding at Scale

At Netflix we receive high quality sources for our movies and TV shows and encode them to the best video streams possible for a given member’s viewing device and bandwidth capabilities. With the continued growth of our service it has been essential to build a video encoding pipeline that is highly robust, efficient and scalable. Our production system is designed to easily scale to support the demands of the business (i.e., more titles, more video encodes, shorter time to deploy), while guaranteeing a high quality of experience for our members.

Pipeline in the Cloud

The video encoding pipeline runs EC2 Linux cloud instances. The elasticity of the cloud enables us to seamlessly scale up when more titles need to be processed, and scale down to free up resources. Our video processing applications don’t require any special hardware and can run on a number of EC2 instance types. Long processing jobs are divided into smaller tasks and parallelized to reduce end-to-end delay and local storage requirements. It also allows us to exploit our

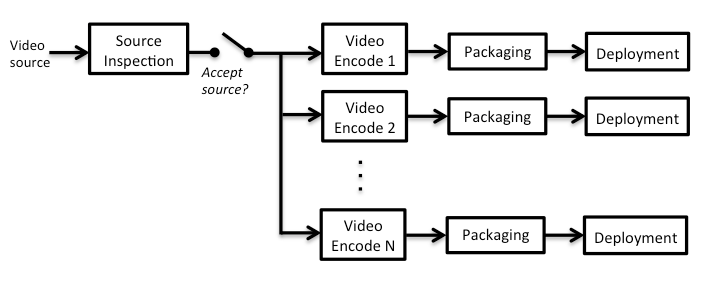

The figure below shows a high-level overview of our system. We ingest high quality video sources and generate video encodes of various codec profiles, at multiple quality representations per profile. The encodes are packaged and then deployed to a content delivery network for streaming. During a streaming session, the client requests the encodes it can play and adaptively switches among quality levels based on network conditions.

Video Source Inspection

To ensure that we have high quality output streams, we need pristine video sources. Netflix ingests source videos from our originals production houses or content partners. In some undesirable cases, the delivered source video contains distortion or artifacts which would result in bad quality video encodes — garbage in means garbage out. These artifacts may have been introduced by multiple processing and transcoding steps before delivery, data corruption during transmission or storage, or human errors during content production. Rather than fixing the source video issues after ingest (for example, apply error concealment to corrupted frames or re-edit sources which contain extra content), Netflix rejects the problematic source video and requests redelivery. Rejecting problematic sources ensures that:

- The best source video available is ingested into the system. In many cases, error mitigation techniques only partially fix the problem.

- Complex algorithms (which could have been avoided by better processes upstream) do not unnecessarily burden the Netflix ingest pipeline.

- Source issues are detected early where a specific and actionable error can be raised.

- Content partners are motivated to triage their production pipeline and address the root causes of the problems. This will lead to improved video source deliveries in the future.

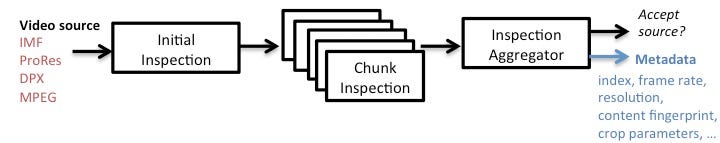

Our preferred source type is Interoperable Master Format (IMF). In addition we support ProRes, DPX, and MPEG (typically older sources). During source inspection, we 1) verify that the source is conformed to the relevant specification(s), 2) detect content that could lead to a bad viewing experience and 3) generate metadata required by the encoding pipeline. If the inspection deems the source unacceptable, the system automatically informs our content partner about issues and requests a redelivery of the source.

A modern 4K source file can be quite large. Larger, in fact, than a typical drive on an EC2 instance. In order to efficiently support these large source files, we must run the inspection on the file in smaller chunks. This chunked model lends itself to parallelization. As shown in the more detailed diagram below, an initial inspection step is performed to index the source file, i.e. determine the byte offsets for frame-accurate seeking, and generate basic metadata such as resolution and frame count. The file segments are then processed in parallel on different instances. For each chunk, bitstream-level and pixel-level analysis is applied to detect errors and generate metadata such as temporal and spatial fingerprints. After all the chunks are inspected, the results are assembled by the inspection aggregator to determine whether the source should be allowed into the encoding pipeline. With our highly optimized inspection workflow, we can inspect a 4K source in less than 15 minutes. Note that longer duration sources would have more chunks, so the total inspection time will still be less than 15 minutes.

Parallel Video Encoding

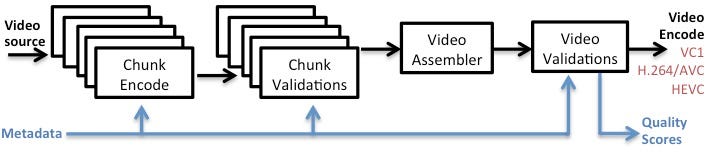

At Netflix we stream to a heterogenous set of viewing devices. This requires a number of codec profiles: VC1, H.264/AVC Baseline, H.264/AVC Main and HEVC. We also support varying bandwidth scenarios for our members, all the way from sub-0.5 Mbps cellular to 100+ Mbps high-speed Internet. To deliver the best experience, we generate multiple quality representations at different bitrates (ranging from 100 kbps to 16 Mbps) and the Netflix client adaptively selects the optimal stream given the instantaneous bandwidth.

Similar to inspection, encoding is performed on chunks of the source file, which allows for efficient parallelization. Since we strive for quality control at every step of the pipeline, we verify the correctness of each encoded chunk right after it completes encoding. If a problem is detected, we can immediately triage the problem (or in the case of transient errors, resubmit the task) without waiting for the entire video to complete. When all the chunks corresponding to a stream have successfully completed, they are stitched together by a video assembler. To guard against frame accuracy issues that may have been introduced by incorrect parallel encoding (for example, chunks assembled in the wrong order, or frames dropped or duplicated at chunk boundaries), we validate the assembled stream by comparing the spatial and temporal fingerprints of the encode with that of the source video (fingerprints of the source are generated during the inspection stage).

In addition to straightforward encoding, the system calculates multiple full-reference video quality metrics for each output video stream. By automatically generating quality scores for each encode, we can monitor video quality at scale. The metrics also help pinpoint bugs in the system and guide us in finding areas for improving our encode recipes. We will provide more detail on the quality metrics we utilize in our pipeline in a future blog post.

Quality of Service

Before we implemented parallel chunked encoding, a 1080p movie could take days to encode, and a failure occurring late in the process would delay the encode even further. With our current pipeline, a title can be fully inspected and encoded at the different profiles and quality representations, with automatic quality control checks, within a few hours. This enables us to stream titles within just a few hours of their original broadcast. We are currently working on further improvements to our system which will allow us to inspect and encode a 1080p source in 30 minutes or less. Note that since the work is done in parallel, processing time is not increased for longer sources.

Before automated quality checks were integrated into our system, encoding issues (picture corruption, inserted black frames, frame rate conversion, interlacing artifacts, frozen frames, etc) could go unnoticed until reported by Netflix members through Customer Support. Not only was this a poor member experience, triaging these issues was costly and inefficient, often escalating through many teams before the root cause was found. In addition, encoding failures (for example due to corrupt sources) would also require manual intervention and long delays in root-causing the failure. With our investment in automated inspection at scale, we detect the issues early, whether it was because of a bad source delivery, an implementation bug, or a glitch in one of the cloud instances, and we provide specific and actionable error messages. For a source that passes our inspections, we have an encode reliability of 99.99% or better. When we do find a problem that was not caught by our algorithms, we design new inspections to detect those issues in the future.

In Summary

High quality video streams are essential for delivering a great Netflix experience to our members. We have developed, and continue to improve on, a video ingest and encode pipeline that runs on the cloud reliably and at scale. We designed for automated quality control checks throughout so that we fail fast and detect issues early in the processing chain. Video is processed in parallel segments. This decreases end-to-end processing delay, reduces the required local storage and improves the system’s error resilience. We have invested in integrating video quality metrics into the pipeline so that we can continuously monitor performance and further optimize our encoding.

Our encoding pipeline, combined with the compute power of the Netflix internal spot market, has value outside our day-to-day production operations. We leverage this system to run large-scale video experiments (codec comparisons, encode recipe optimizations, quality metrics design, etc.) which strive to answer questions that are important to delivering the highest quality video streams, and at the same time could benefit the larger video research community.

— by Anne Aaron and David Ronca