機器學習 線性迴歸 (matlab實現)

阿新 • • 發佈:2019-02-01

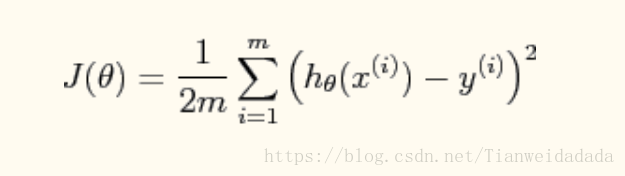

代價函式:

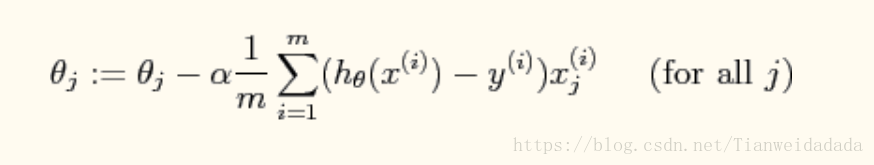

下降梯度:

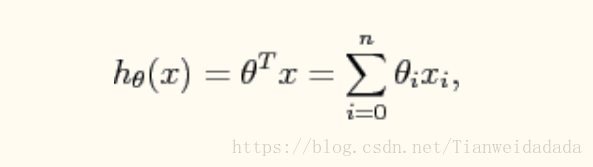

假設函式:

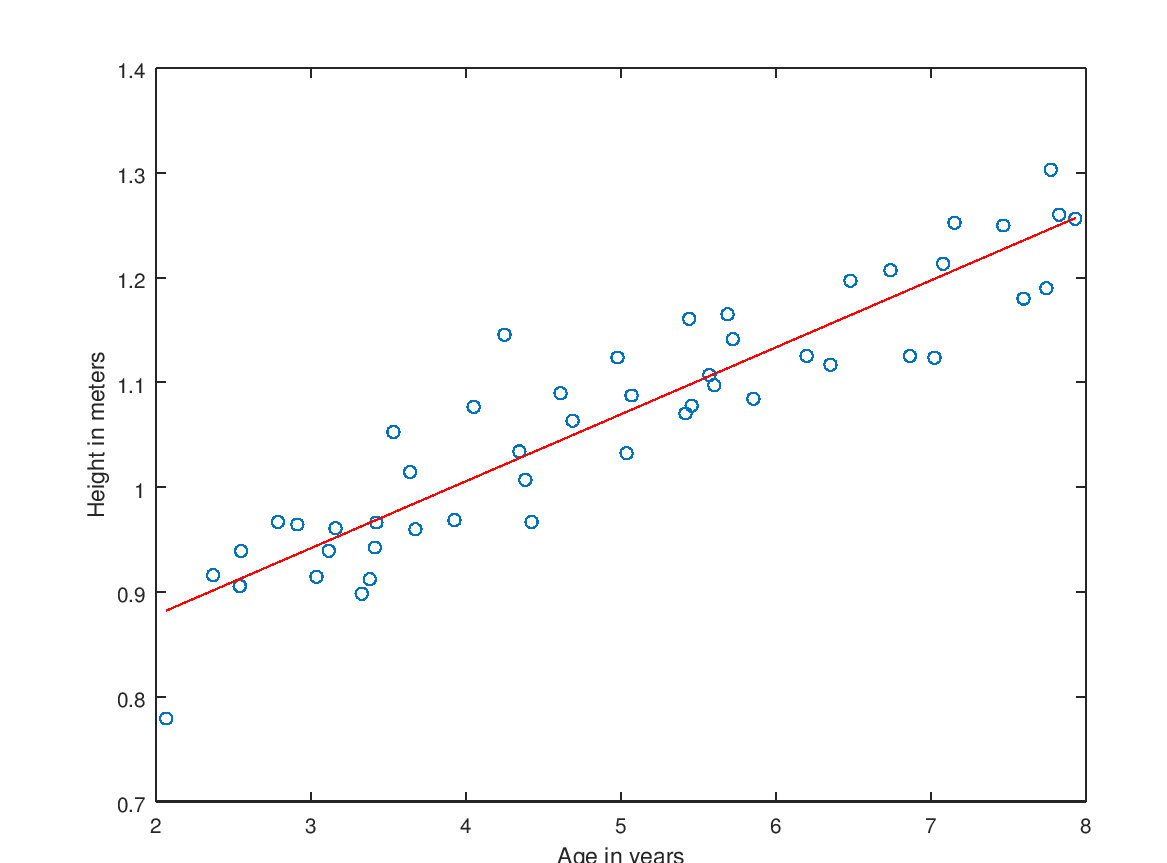

x代表年齡,y代表身高

預測身高與年齡的關係

Code:

x = load('ex2x.dat'); y = load('ex2y.dat'); [m,n] = size(x); x = [ones(m,1),x];%偏置項 x0 = 1 figure % open a new figure window plot(x(:,2), y, 'o');%袁術資料分佈 hold on; ylabel('Height in meters') xlabel('Age in years'); inittheta = zeros(n+1,1); [theta J] = linearReg(x,y,inittheta);%下降梯度函式 求theta 與各步代價 plot(x(:,2),x*theta,'g');%畫出邊界函式 hold off;

linearRegression:

function [theta J] = linearReg(x,y,inittheta) % x0已經被置為1了 [m,n] = size(x); theta = inittheta; MAX_ITR = 1500; %迭代 1500次 alpha = 0.07;%學習率 J = zeros(MAX_ITR,1); for i = 1:MAX_ITR h = x*theta;//預測值 grad = (1/m).*(x'*(h-y));%梯度向量 theta = theta - alpha*grad; J(i) = 1/(2*m)*(sum((h-y).^2));%每一步的代價 end

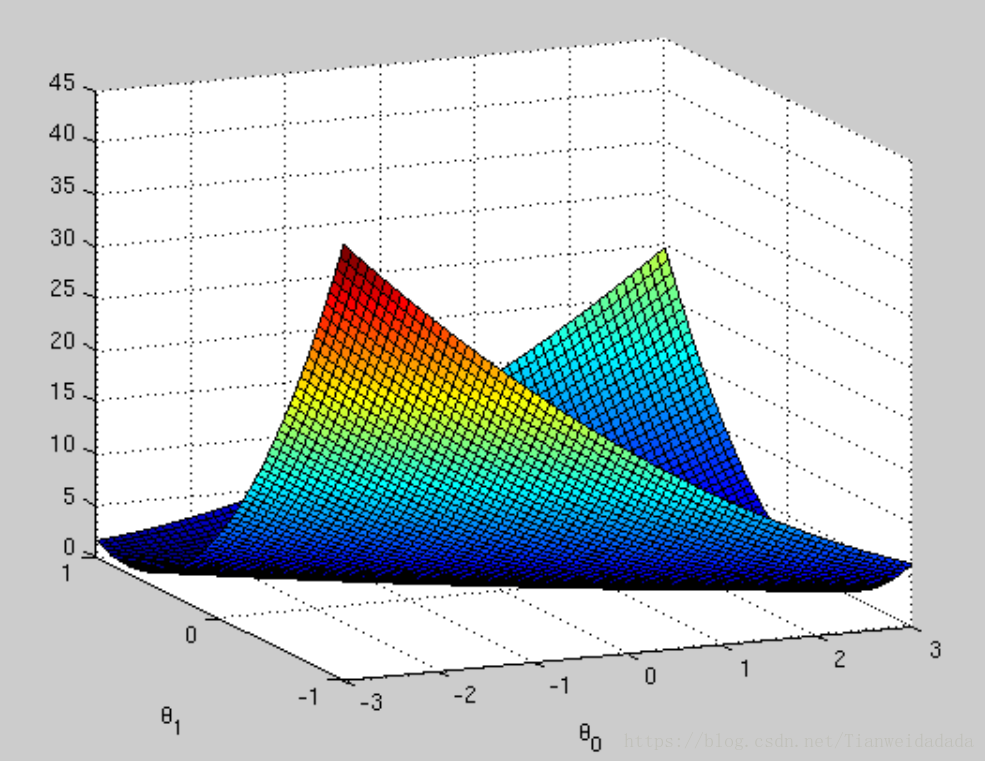

可以畫出代價函式隨著兩個引數theta(1),theta(2)的取值變化情況:

執行命令:

J_vals = J_vals';

figure;

surf(theta0_vals, theta1_vals, J_vals);

hold on;

xlabel('\theta_0'); ylabel('\theta_1');測試函式

function J_vals = testJ(x,y) [m,n] = size(x); J_vals = zeros(100, 100); % initialize Jvals to 100x100 matrix of 0's theta0_vals = linspace(-3, 3, 100); theta1_vals = linspace(-1, 1, 100); for i = 1:length(theta0_vals) for j = 1:length(theta1_vals) t = [theta0_vals(i); theta1_vals(j)]; J_vals(i,j) = 1/(2*m)*(sum((x*t-y).^2)); end end

代價隨引數變化情況:

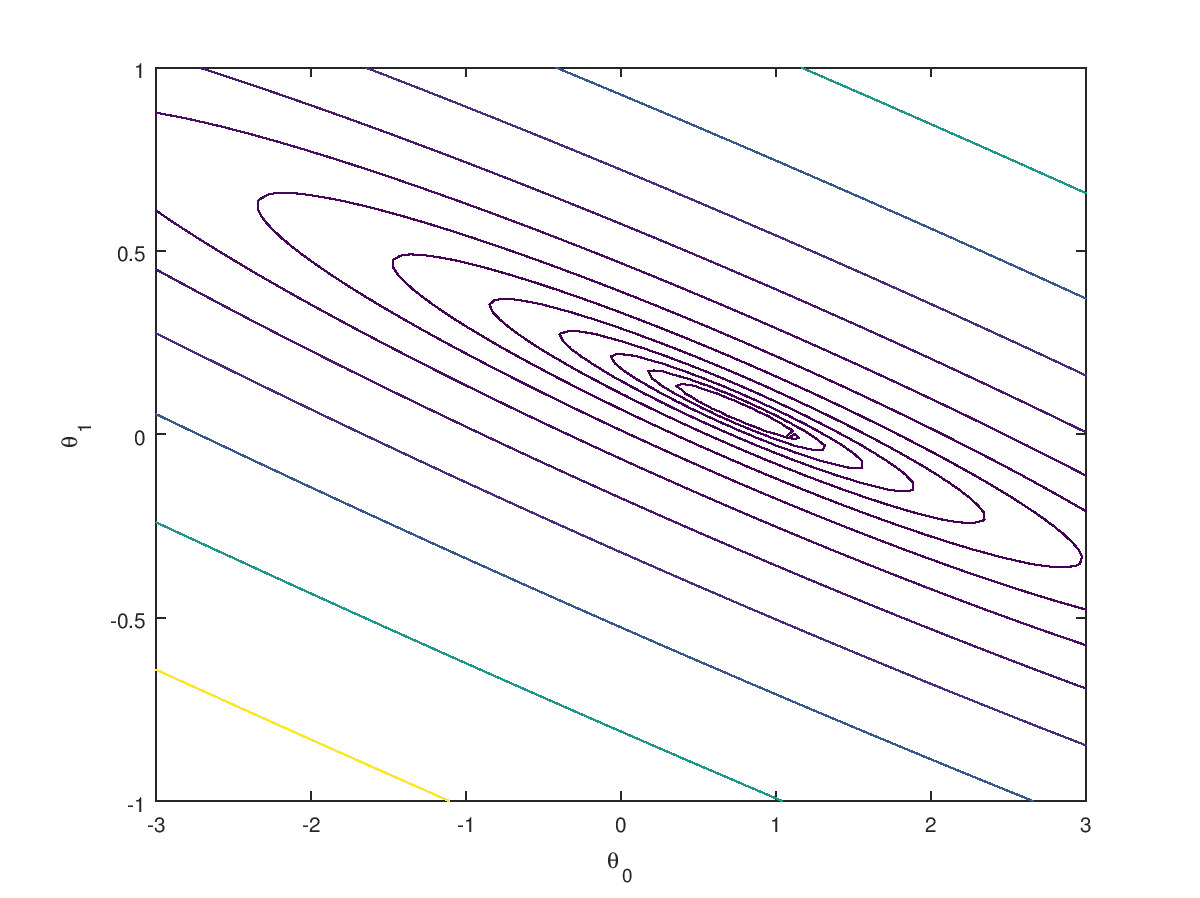

還可畫出等高線:

contour(theta0_vals, theta1_vals, J_vals, logspace(-2, 2, 15))

xlabel('\theta_0'); ylabel('\theta_1')

參考:點選開啟連結(內含資料)