目標檢測SSD+Tensorflow 轉資料為tfrecord

用tensorflow做深度學習的目標檢測真是艱難困苦啊!

1.程式碼地址:https://github.com/balancap/SSD-Tensorflow,下載該程式碼到本地

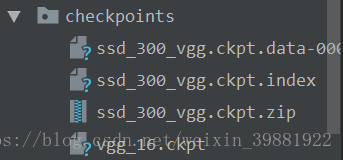

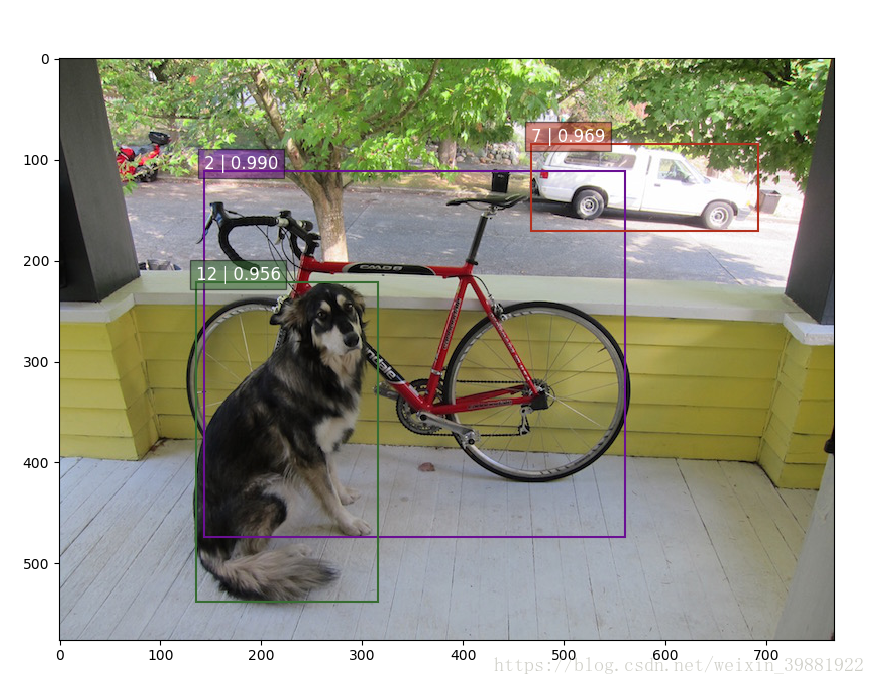

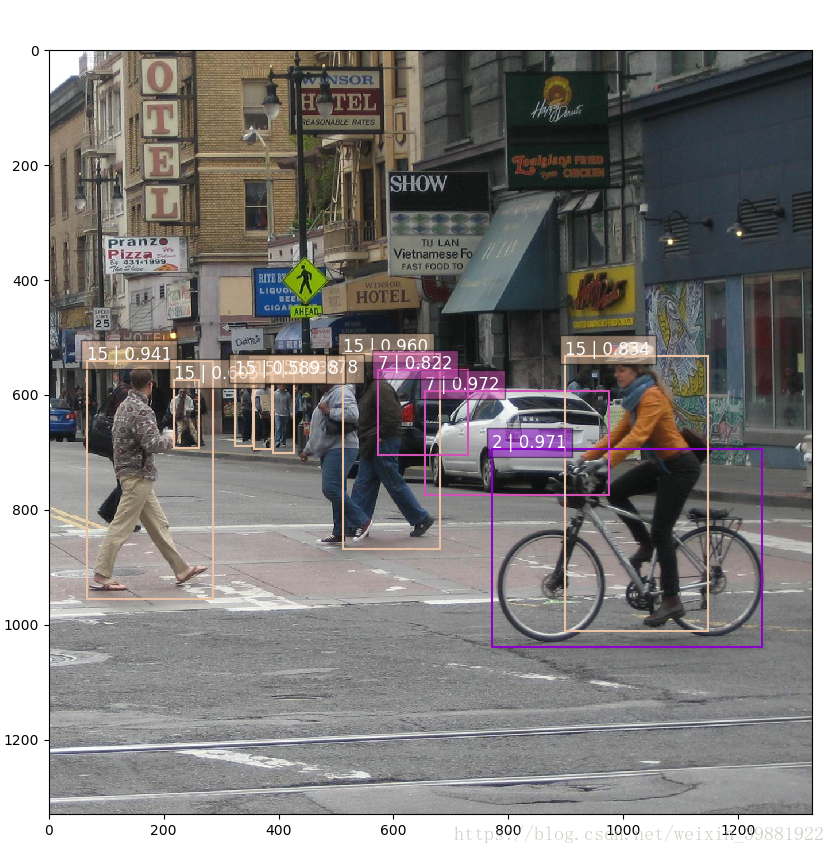

2.解壓ssd_300_vgg.ckpt.zip 到checkpoint資料夾下

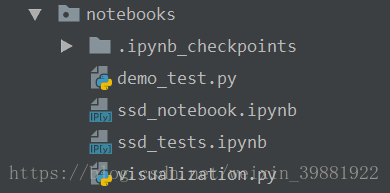

3.測試一下看看,在notebooks中建立demo_test.py,其實就是複製ssd_notebook.ipynb中的程式碼,該py檔案是完成對於單張圖片的測試,對Jupyter不熟,就自己改了,感覺這樣要方便一些。

-

import os -

import math -

import random -

import numpy as np -

import tensorflow as tf -

import cv2 -

slim = tf.contrib.slim -

import matplotlib.pyplot as plt -

import matplotlib.image as mpimg -

import sys -

sys.path.append('../') -

from nets import ssd_vgg_300, ssd_common, np_methods -

from preprocessing import ssd_vgg_preprocessing -

from notebooks import visualization -

# TensorFlow session: grow memory when needed. TF, DO NOT USE ALL MY GPU MEMORY!!! -

gpu_options = tf.GPUOptions(allow_growth=True) -

config = tf.ConfigProto(log_device_placement=False, gpu_options=gpu_options) -

isess = tf.InteractiveSession(config=config) -

# Input placeholder. -

net_shape = (300, 300) -

data_format = 'NHWC' -

img_input = tf.placeholder(tf.uint8, shape=(None, None, 3)) -

# Evaluation pre-processing: resize to SSD net shape. -

image_pre, labels_pre, bboxes_pre, bbox_img = ssd_vgg_preprocessing.preprocess_for_eval( -

img_input, None, None, net_shape, data_format, resize=ssd_vgg_preprocessing.Resize.WARP_RESIZE) -

image_4d = tf.expand_dims(image_pre, 0) -

# Define the SSD model. -

reuse = True if 'ssd_net' in locals() else None -

ssd_net = ssd_vgg_300.SSDNet() -

with slim.arg_scope(ssd_net.arg_scope(data_format=data_format)): -

predictions, localisations, _, _ = ssd_net.net(image_4d, is_training=False, reuse=reuse) -

# Restore SSD model. -

ckpt_filename = '../checkpoints/ssd_300_vgg.ckpt' -

# ckpt_filename = '../checkpoints/VGG_VOC0712_SSD_300x300_ft_iter_120000.ckpt' -

isess.run(tf.global_variables_initializer()) -

saver = tf.train.Saver() -

saver.restore(isess, ckpt_filename) -

# SSD default anchor boxes. -

ssd_anchors = ssd_net.anchors(net_shape) -

# Main image processing routine. -

def process_image(img, select_threshold=0.5, nms_threshold=.45, net_shape=(300, 300)): -

# Run SSD network. -

rimg, rpredictions, rlocalisations, rbbox_img = isess.run([image_4d, predictions, localisations, bbox_img], -

feed_dict={img_input: img}) -

# Get classes and bboxes from the net outputs. -

rclasses, rscores, rbboxes = np_methods.ssd_bboxes_select( -

rpredictions, rlocalisations, ssd_anchors, -

select_threshold=select_threshold, img_shape=net_shape, num_classes=21, decode=True) -

rbboxes = np_methods.bboxes_clip(rbbox_img, rbboxes) -

rclasses, rscores, rbboxes = np_methods.bboxes_sort(rclasses, rscores, rbboxes, top_k=400) -

rclasses, rscores, rbboxes = np_methods.bboxes_nms(rclasses, rscores, rbboxes, nms_threshold=nms_threshold) -

# Resize bboxes to original image shape. Note: useless for Resize.WARP! -

rbboxes = np_methods.bboxes_resize(rbbox_img, rbboxes) -

return rclasses, rscores, rbboxes -

# Test on some demo image and visualize output. -

#測試的資料夾 -

path = '../demo/' -

image_names = sorted(os.listdir(path)) -

#資料夾中的第幾張圖,-1代表最後一張 -

img = mpimg.imread(path + image_names[-1]) -

rclasses, rscores, rbboxes = process_image(img) -

# visualization.bboxes_draw_on_img(img, rclasses, rscores, rbboxes, visualization.colors_plasma) -

visualization.plt_bboxes(img, rclasses, rscores, rbboxes)

|

|

|

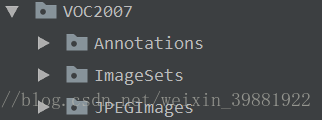

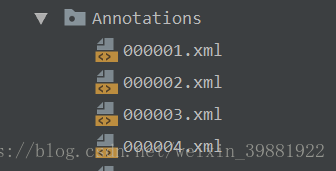

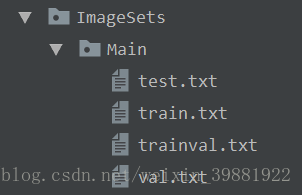

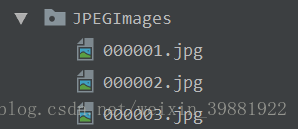

4.將自己的資料集做成VOC2007格式放在該工程下面

|

|

|

|

5. 修改datasets資料夾中pascalvoc_common.py檔案,將訓練類修改別成自己的

-

#原始的 -

# VOC_LABELS = { -

# 'none': (0, 'Background'), -

# 'aeroplane': (1, 'Vehicle'), -

# 'bicycle': (2, 'Vehicle'), -

# 'bird': (3, 'Animal'), -

# 'boat': (4, 'Vehicle'), -

# 'bottle': (5, 'Indoor'), -

# 'bus': (6, 'Vehicle'), -

# 'car': (7, 'Vehicle'), -

# 'cat': (8, 'Animal'), -

# 'chair': (9, 'Indoor'), -

# 'cow': (10, 'Animal'), -

# 'diningtable': (11, 'Indoor'), -

# 'dog': (12, 'Animal'), -

# 'horse': (13, 'Animal'), -

# 'motorbike': (14, 'Vehicle'), -

# 'person': (15, 'Person'), -

# 'pottedplant': (16, 'Indoor'), -

# 'sheep': (17, 'Animal'), -

# 'sofa': (18, 'Indoor'), -

# 'train': (19, 'Vehicle'), -

# 'tvmonitor': (20, 'Indoor'), -

# } -

#修改後的 -

VOC_LABELS = { -

'none': (0, 'Background'), -

'pantograph':(1,'Vehicle'), -

}

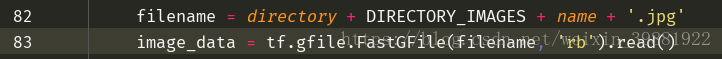

6. 將影象資料轉換為tfrecods格式,修改datasets資料夾中的pascalvoc_to_tfrecords.py檔案,然後更改檔案的83行讀取方式為’rb‘,如果你的檔案不是.jpg格式,也可以修改圖片的型別。

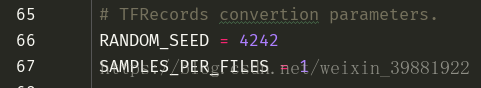

此外, 修改67行,可以修改幾張圖片轉為一個tfrecords

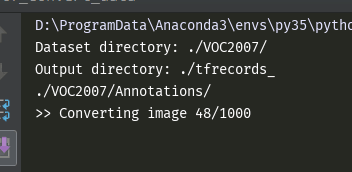

7.執行tf_convert_data.py檔案,但是需要傳給它一些引數:

| linux |

在SSD-Tensorflow-master資料夾下建立tf_conver_data.sh,檔案寫入內容如下: DATASET_DIR=./VOC2007/ #VOC資料儲存的資料夾(VOC的目錄格式未改變) |

|

windows +pychram |

配置pycharm-->run-->Edit Configuration Script parameters中寫入:--dataset_name=pascalvoc --dataset_dir=./VOC2007/ --output_name=voc_2007_train --output_dir=./tfrecords_ 執行tf_convert_data.py檔案 |

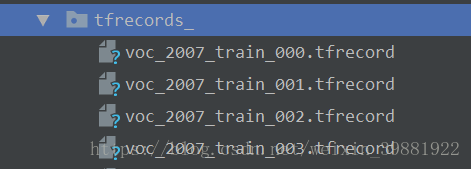

| 生成tfrecords檔案過程中你會看到 | 生成tfrecords檔案完畢後你會看到 |

|

|

|

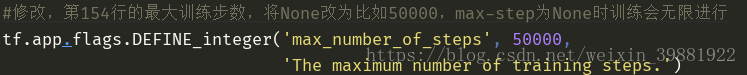

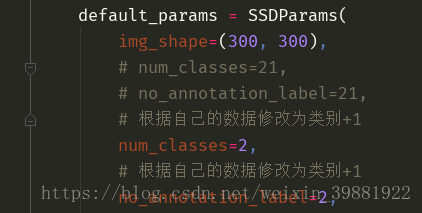

8.訓練模型train_ssd_network.py檔案中修改

train_ssd_network.py檔案中網路引數配置,若需要改,在此檔案中進行修改,如:

其他需要修改的地方

| a. nets/ssd_vgg_300.py (因為使用此網路結構) ,修改87 和88行的類別 |  |

||

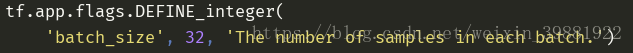

| b. train_ssd_network.py,修改類別120行,GPU佔用量,學習率,batch_size等 |  |

||

| c eval_ssd_network.py 修改類別,66行 |  |

||

| d. datasets/pascalvoc_2007.py 根據自己的訓練資料修改整個檔案 |

|

9.通過載入預訓練好的vgg16模型,訓練網路

下載預訓練好的vgg16模型,解壓放入checkpoint檔案中,如果找不到vgg_16.ckpt檔案,可以在下面的連結中點選下載。

連結:https://pan.baidu.com/s/1diWbdJdjVbB3AWN99406nA 密碼:ge3x

按照之前的方式,同樣,如果你是linux使用者,你可以新建一個.sh檔案,檔案裡寫入

-

DATASET_DIR=./tfrecords_/ -

TRAIN_DIR=./train_model/ -

CHECKPOINT_PATH=./checkpoints/vgg_16.ckpt -

python3 ./train_ssd_network.py \ -

--train_dir=./train_model/ \ #訓練生成模型的存放路徑 -

--dataset_dir=./tfrecords_/ \ #資料存放路徑 -

--dataset_name=pascalvoc_2007 \ #資料名的字首 -

--dataset_split_name=train \ -

--model_name=ssd_300_vgg \ #載入的模型的名字 -

--checkpoint_path=./checkpoints/vgg_16.ckpt \ #所載入模型的路徑 -

--checkpoint_model_scope=vgg_16 \ #所載入模型裡面的作用域名 -

--checkpoint_exclude_scopes=ssd_300_vgg/conv6,ssd_300_vgg/conv7,ssd_300_vgg/block8,ssd_300_vgg/block9,ssd_300_vgg/block10,ssd_300_vgg/block11,ssd_300_vgg/block4_box,ssd_300_vgg/block7_box,ssd_300_vgg/block8_box,ssd_300_vgg/block9_box,ssd_300_vgg/block10_box,ssd_300_vgg/block11_box \ -

--trainable_scopes=ssd_300_vgg/conv6,ssd_300_vgg/conv7,ssd_300_vgg/block8,ssd_300_vgg/block9,ssd_300_vgg/block10,ssd_300_vgg/block11,ssd_300_vgg/block4_box,ssd_300_vgg/block7_box,ssd_300_vgg/block8_box,ssd_300_vgg/block9_box,ssd_300_vgg/block10_box,ssd_300_vgg/block11_box \ -

--save_summaries_secs=60 \ #每60s儲存一下日誌 -

--save_interval_secs=600 \ #每600s儲存一下模型 -

--weight_decay=0.0005 \ #正則化的權值衰減的係數 -

--optimizer=adam \ #選取的最優化函式 -

--learning_rate=0.001 \ #學習率 -

--learning_rate_decay_factor=0.94 \ #學習率的衰減因子 -

--batch_size=24 \ -

--gpu_memory_fraction=0.9 #指定佔用gpu記憶體的百分比

如果你是windows+pycharm中執行,除了在上述的run中Edit Configuration配置,你還可以開啟Terminal,在這裡執行程式碼,輸入即可

python ./train_ssd_network.py --train_dir=./train_model/ --dataset_dir=./tfrecords_/ --dataset_name=pascalvoc_2007 --dataset_split_name=train --model_name=ssd_300_vgg --checkpoint_path=./checkpoints/vgg16.ckpt --checkpoint_model_scope=vgg_16 --checkpoint_exclude_scopes=ssd_300_vgg/conv6,ssd_300_vgg/conv7,ssd_300_vgg/block8,ssd_300_vgg/block9,ssd_300_vgg/block10,ssd_300_vgg/block11,ssd_300_vgg/block4_box,ssd_300_vgg/block7_box,ssd_300_vgg/block8_box,ssd_300_vgg/block9_box,ssd_300_vgg/block10_box,ssd_300_vgg/block11_box --trainable_scopes=ssd_300_vgg/conv6,ssd_300_vgg/conv7,ssd_300_vgg/block8,ssd_300_vgg/block9,ssd_300_vgg/block10,ssd_300_vgg/block11,ssd_300_vgg/block4_box,ssd_300_vgg/block7_box,ssd_300_vgg/block8_box,ssd_300_vgg/block9_box,ssd_300_vgg/block10_box,ssd_300_vgg/block11_box --save_summaries_secs=60 --save_interval_secs=600 --weight_decay=0.0005 --optimizer=adam --learning_rate=0.001 --learning_rate_decay_factor=0.94 --batch_size=24 --gpu_memory_fraction=0.9