fastrtext︱R語言使用facebook的fasttext快速文字分類演算法

FastText是Facebook開發的一款快速文字分類器,提供簡單而高效的文字分類和表徵學習的方法,不過這個專案其實是有兩部分組成的。理論介紹可見部落格:NLP︱高階詞向量表達(二)——FastText(簡述、學習筆記)

本輪新更新的fastrtext,同樣繼承了兩個功能:訓練詞向量 + 文字分類模型訓練

來源:

相關文件地址:

相關部落格:

.

一、安裝

1.安裝

# From Cran

install.packages("fastrtext")

# From Github

# install.packages("devtools") .

2.主函式介紹

The following arguments are mandatory:

-input training file path

-output output file path

The following arguments are optional:

-verbose verbosity level [2]

The following arguments for the dictionary are optional:

-minCount minimal number of word occurences [5 也就是execute()時候,可以輸入的函式是啥。

-dim,向量長度,預設100維;

-wordNgrams,詞型別,一般可以選擇2,二元組

-verbose,輸出資訊的詳細程度,0-2,不同層次的詳細程度(0代表啥也不顯示)。

-lr:學習速率[0.1]

-lrUpdateRate:更改學習率的更新速率[100]

-dim :字向量大小[100]

-ws:上下文視窗的大小[5]

-epoch:迴圈數[5]

-neg:抽樣數量[5]

-loss:損失函式 {ns,hs,softmax} [ns]

-thread:執行緒數[12]

-pretrainedVectors:用於監督學習的預培訓字向量

-saveOutput:輸出引數是否應該儲存[0]

.

二、官方案例一 —— 文字分類模型訓練

2.1 載入資料並訓練

library(fastrtext)

data("train_sentences")

data("test_sentences")

# prepare data

tmp_file_model <- tempfile()

train_labels <- paste0("__label__", train_sentences[,"class.text"])

train_texts <- tolower(train_sentences[,"text"])

train_to_write <- paste(train_labels, train_texts)

train_tmp_file_txt <- tempfile()

writeLines(text = train_to_write, con = train_tmp_file_txt)

test_labels <- paste0("__label__", test_sentences[,"class.text"])

test_texts <- tolower(test_sentences[,"text"])

test_to_write <- paste(test_labels, test_texts)

# learn model

execute(commands = c("supervised", "-input", train_tmp_file_txt, "-output", tmp_file_model, "-dim", 20, "-lr", 1, "-epoch", 20, "-wordNgrams", 2, "-verbose", 1))

其中可以看到與之前熟知的機器學習相關模型不同,其模型執行是通過execute來得到,並儲存。

其中:

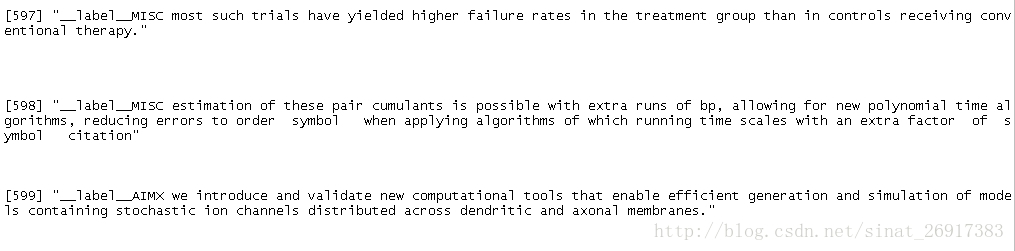

來看看輸入資料長啥樣子:

資料是char格式的,之前__label__XXX 是該文字的標籤,然後空格接上文字內容。

執行結果:

##

Read 0M words

## Number of words: 5060

## Number of labels: 15

##

Progress: 100.0% words/sec/thread: 1457520 lr: 0.000000 loss: 0.300770 eta: 0h0m.

2.2 驗證集+執行模型

# load model

model <- load_model(tmp_file_model)

# prediction are returned as a list with words and probabilities

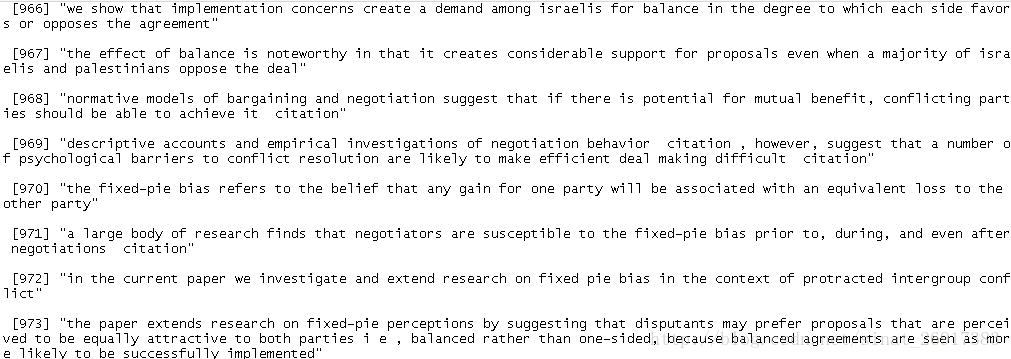

predictions <- predict(model, sentences = test_to_write)load_model模型檔案位置,test_to_write是驗證文字,長這樣(其實跟訓練集長一樣):

顯示:

print(head(predictions, 5))

## [[1]]

## __label__OWNX

## 0.9980469

##

## [[2]]

## __label__MISC

## 0.9863281

##

## [[3]]

## __label__MISC

## 0.9921875

##

## [[4]]

## __label__OWNX

## 0.9082031

##

## [[5]]

## __label__AIMX

## 0.984375.

2.3 模型驗證

計算準確率

# Compute accuracy

mean(sapply(predictions, names) == test_labels)計算海明距離

# because there is only one category by observation, hamming loss will be the same

get_hamming_loss(as.list(test_labels), predictions)

## [1] 0.8316667.

2.4 一些小函式

檢視監督模型的label有哪些,get_labels函式。

如果已經訓練好模型,放了一段時間,又不知道里面有哪些標籤,可以這麼找一下。

model <- load_model(model_test_path)

print(head(get_labels(model), 5))

#> [1] "__label__MISC" "__label__OWNX" "__label__AIMX" "__label__CONT"

#> [5] "__label__BASE"檢視模型的引數都用了啥get_parameters:

model <- load_model(model_test_path)

print(head(get_parameters(model), 5))

#> $learning_rate

#> [1] 0.05

#>

#> $learning_rate_update

#> [1] 100

#>

#> $dim

#> [1] 20

#>

#> $context_window_size

#> [1] 5

#>

#> $epoch

#> [1] 20

#> .

三、官方案例二 —— 計算詞向量

3.1 載入資料 + 訓練

library(fastrtext)

data("train_sentences")

data("test_sentences")

texts <- tolower(train_sentences[,"text"])

tmp_file_txt <- tempfile()

tmp_file_model <- tempfile()

writeLines(text = texts, con = tmp_file_txt)

execute(commands = c("skipgram", "-input", tmp_file_txt, "-output", tmp_file_model, "-verbose", 1))commands 裡面的引數是:”skipgram”,也就是計算詞向量,跟word2vec一致。

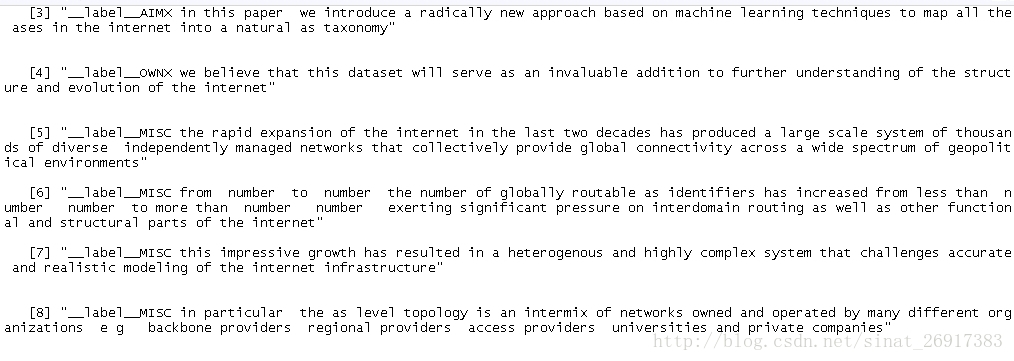

輸入的文字內容,不用帶標籤資訊:

.

3.2 詞向量

model <- load_model(tmp_file_model)載入詞向量的檔案,載入的是bin檔案

# test word extraction

dict <- get_dictionary(model)

print(head(dict, 5))

## [1] "the" "</s>" "of" "to" "and"dict 就是詞向量的字典,

# print vector

print(get_word_vectors(model, c("time", "timing")))

顯示一下,詞向量的維度。

.

3.3 計算詞向量距離——get_word_distance

# test word distance

get_word_distance(model, "time", "timing")

## [,1]

## [1,] 0.02767485.

3.4 找出最近鄰詞——get_nn

get_nn引數只有三個,最後數字代表選擇前多少個近義詞。

library(fastrtext)

model_test_path <- system.file("extdata", "model_unsupervised_test.bin", package = "fastrtext")

model <- load_model(model_test_path)

get_nn(model, "time", 10)

#> times size indicate access success allowing feelings

#> 0.6120564 0.5041215 0.4941387 0.4777856 0.4719051 0.4696053 0.4652924

#> dictator amino accuracies

#> 0.4595046 0.4582702 0.4535145 .

3.5 詞的類比——get_analogies

library(fastrtext)

model_test_path <- system.file("extdata", "model_unsupervised_test.bin", package = "fastrtext")

model <- load_model(model_test_path)

get_analogies(model, "experience", "experiences", "result")

#> results

#> 0.726607 類比關係式:

get_analogies(model, w1, w2, w3, k = 1)

w1 - w2 + w3

也即是:

experience - experiences + result