iOS 圖片部分模糊,類似於美圖秀秀

代碼地址如下:

http://www.demodashi.com/demo/14277.html

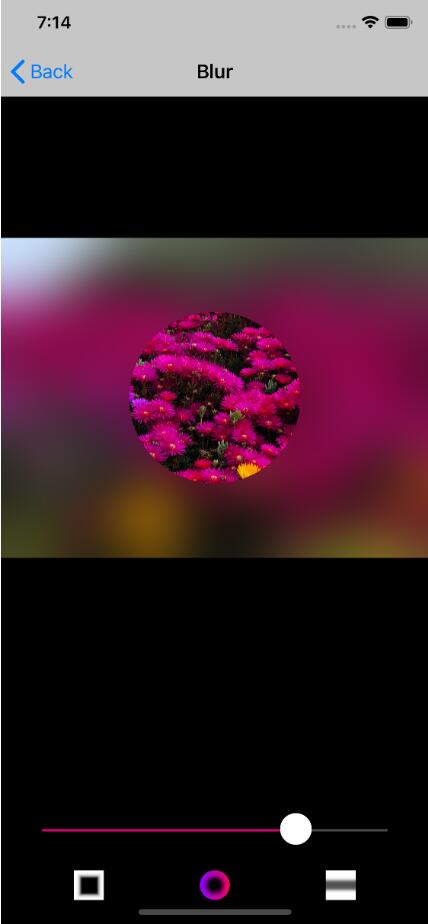

演示效果

演示效果

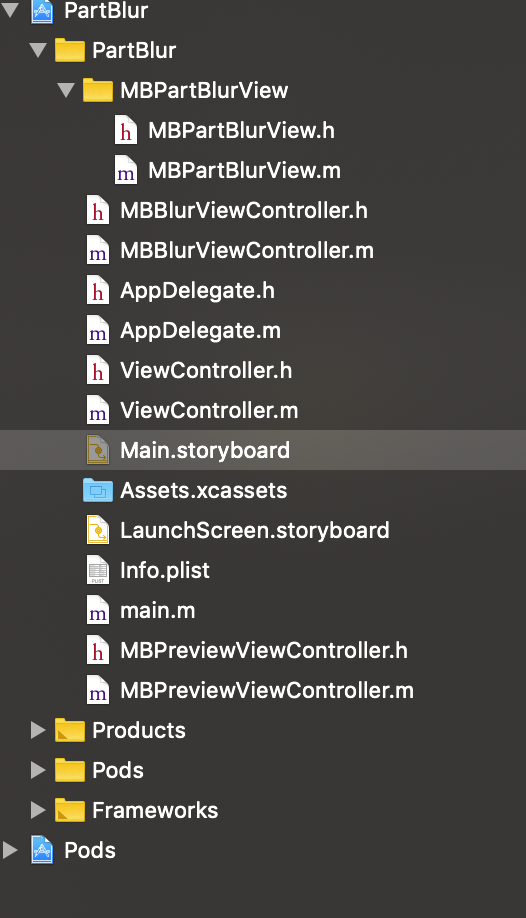

代碼結構

項目結構截圖如下:

該模塊的核心源碼部分為 MBPartBlurView,要放到自己的工程中,直接把該目錄copy進去。剩余部分是測試用的。

使用介紹

使用方法

頭文件 MBPartBlurView.h 中的註釋很詳細,使用起來也很簡單,目前支持三種形式的圖形,圓形、正方形和長方形。

@interface MBPartBlurView : UIView /** * 原始圖片 */ @property (nonatomic, strong) UIImage *rawImage; /** * 處理完的圖片 */ @property (nonatomic, strong, readonly) UIImage *currentImage; @property (nonatomic, assign) CGFloat blurRadius; /** * 不進行blur的區域 */ @property (nonatomic, assign) CGRect excludeBlurArea; /** * 不進行blur的區域是否是圓形 (目前只支持正方形) */ @property (nonatomic, assign) BOOL excludeAreaCircle; @end

程序實現

局部模糊的實現

局部模糊實際上是兩張圖片疊加在一起的效果,底部是一張全部模糊的圖片,頂部是一張未經過模糊的圖片。對頂部圖片加上mask,然後貼在底部圖上。

a. 底部的圖片放在 imageView 的 layer 上。頂部圖片放在 rawImageLayer 上。

maskLayer 就是遮罩層,作為 rawImageLayer 的遮罩。他們分別由以下三個屬性保持

@property (nonatomic, strong) UIImageView *imageView; @property (nonatomic, strong) CALayer *rawImageLayer; @property (nonatomic, strong) CAShapeLayer *maskLayer;

b. 初始化的過程。圖層一目了然

- (void)commonInit { [self.imageView addGestureRecognizer:self.tapGesture]; [self.imageView addGestureRecognizer:self.pinchGesture]; [self.brushView addGestureRecognizer:self.panGesture]; [self addSubview:self.imageView]; [self.imageView.layer addSublayer:self.rawImageLayer]; [self.imageView addSubview:self.brushView]; self.rawImageLayer.mask = self.maskLayer; [self setExcludeAreaCircle:true]; [self setExcludeBlurArea:CGRectMake(100, 100, 100, 100)]; [self showBrush:false]; }

1. 圖片模糊算法

算法用的是業界廣泛使用的高斯模糊。

(UIImage *)blurryImage:(UIImage *)image withBlurLevel:(CGFloat)blur

{

NSData *imageData = UIImageJPEGRepresentation(image, 1); // convert to jpeg

UIImage* destImage = [UIImage imageWithData:imageData];

int boxSize = (int)(blur * 100);

if (blur > 0.5) {

boxSize = (int)(blur * 100) + 50;

}else if (blur <= 0.5) {

boxSize = (int)(blur * 100);

}

boxSize = boxSize - (boxSize % 2) + 1;

CGImageRef img = destImage.CGImage;

vImage_Buffer inBuffer, outBuffer;

vImage_Error error;

void *pixelBuffer;

//create vImage_Buffer with data from CGImageRef

CGDataProviderRef inProvider = CGImageGetDataProvider(img);

CFDataRef inBitmapData = CGDataProviderCopyData(inProvider);

inBuffer.width = CGImageGetWidth(img);

inBuffer.height = CGImageGetHeight(img);

inBuffer.rowBytes = CGImageGetBytesPerRow(img);

inBuffer.data = (void*)CFDataGetBytePtr(inBitmapData);

//create vImage_Buffer for output

pixelBuffer = malloc(CGImageGetBytesPerRow(img) * CGImageGetHeight(img));

if(pixelBuffer == NULL)

NSLog(@"No pixelbuffer");

outBuffer.data = pixelBuffer;

outBuffer.width = CGImageGetWidth(img);

outBuffer.height = CGImageGetHeight(img);

outBuffer.rowBytes = CGImageGetBytesPerRow(img);

// Create a third buffer for intermediate processing

void *pixelBuffer2 = malloc(CGImageGetBytesPerRow(img) * CGImageGetHeight(img));

vImage_Buffer outBuffer2;

outBuffer2.data = pixelBuffer2;

outBuffer2.width = CGImageGetWidth(img);

outBuffer2.height = CGImageGetHeight(img);

outBuffer2.rowBytes = CGImageGetBytesPerRow(img);

//perform convolution

error = vImageBoxConvolve_ARGB8888(&inBuffer, &outBuffer2, NULL, 0, 0, boxSize, boxSize, NULL, kvImageEdgeExtend);

if (error) {

NSLog(@"error from convolution %ld", error);

}

error = vImageBoxConvolve_ARGB8888(&outBuffer2, &inBuffer, NULL, 0, 0, boxSize, boxSize, NULL, kvImageEdgeExtend);

if (error) {

NSLog(@"error from convolution %ld", error);

}

error = vImageBoxConvolve_ARGB8888(&inBuffer, &outBuffer, NULL, 0, 0, boxSize, boxSize, NULL, kvImageEdgeExtend);

if (error) {

NSLog(@"error from convolution %ld", error);

}

CGColorSpaceRef colorSpace = CGColorSpaceCreateDeviceRGB();

CGContextRef ctx = CGBitmapContextCreate(outBuffer.data,

outBuffer.width,

outBuffer.height,

8,

outBuffer.rowBytes,

colorSpace,

(CGBitmapInfo)kCGImageAlphaNoneSkipLast);

CGImageRef imageRef = CGBitmapContextCreateImage (ctx);

UIImage *returnImage = [UIImage imageWithCGImage:imageRef];

//clean up

CGContextRelease(ctx);

CGColorSpaceRelease(colorSpace);

free(pixelBuffer);

free(pixelBuffer2);

CFRelease(inBitmapData);

CGImageRelease(imageRef);

return returnImage;

}2.手勢處理

主要是實現縮放的手勢,在處理 UIPanGestureRecognizer 的函數- (void)handlePinch:(UIPinchGestureRecognizer *)gestureRecognizer 中,當 state 為 UIGestureRecognizerStateChanged 時候調用核心縮放函數- (void)scaleMask:(CGFloat)scale,代碼具體如下:

- (void)scaleMask:(CGFloat)scale

{

CGFloat mS = MIN(self.imageView.frame.size.width/self.brushView.frame.size.width, self.imageView.frame.size.height/self.brushView.frame.size.height);

CGFloat s = MIN(scale, mS);

[CATransaction setDisableActions:YES];

CGAffineTransform zoomTransform = CGAffineTransformScale(self.brushView.layer.affineTransform, s, s);

self.brushView.layer.affineTransform = zoomTransform;

zoomTransform = CGAffineTransformScale(self.maskLayer.affineTransform, s, s);

self.maskLayer.affineTransform = zoomTransform;

[CATransaction setDisableActions:false];

}在這個函數中,更新maskLayer 的 affineTransform 就可以擴大或者縮小 mask 的範圍。表現出來就是不模糊的區域擴大或者縮小。

3. 處理相機圖片自動旋轉問題

- (void)setRawImage:(UIImage *)rawImage

{

UIImage *newImage = [self fixedOrientation:rawImage]; //從相冊出來的自帶90度旋轉

_rawImage = newImage;

CGFloat w,h;

if (newImage.size.width >= newImage.size.height) {

w = self.frame.size.width;

h = newImage.size.height/newImage.size.width * w;

}

else {

h = self.frame.size.height;

w = newImage.size.width/newImage.size.height * h;

}

self.imageView.frame = CGRectMake(0.5 *(self.frame.size.width-w), 0.5 *(self.frame.size.height-h), w, h);

self.rawImageLayer.frame = self.imageView.bounds;

self.imageView.image = [self blurryImage:newImage withBlurLevel:self.blurRadius];

self.rawImageLayer.contents = (id)newImage.CGImage;

}補充

暫時沒有

[1]: http://www.ruanyifeng.com/blog/2012/11/gaussian_blur.htmliOS 圖片部分模糊,類似於美圖秀秀

代碼地址如下:

http://www.demodashi.com/demo/14277.html

註:本文著作權歸作者,由demo大師代發,拒絕轉載,轉載需要作者授權

iOS 圖片部分模糊,類似於美圖秀秀