(Opencv C++)數字影象處理——影象分割壓縮

阿新 • • 發佈:2019-01-04

我們從下面三個方面來進行相應的數字影象處理

一、圖象噪聲估計

二、圖象分割

三、影象壓縮及解壓

一、圖象噪聲估計

•常見的噪聲模型:

•1、高斯噪聲;

•2、瑞利噪聲;

•3、伽馬噪聲;

•4、指數分佈噪聲;

•5、均勻分佈噪聲;

•6、脈衝噪聲;

圖中噪聲分別是:

-

•a.高斯噪聲分佈圖;

•b.瑞利噪聲分佈圖;

•c.伽馬噪聲分佈圖;

•d.指數噪聲分佈圖;

•e.均勻噪聲分佈圖;

•f.脈衝噪聲分佈圖;

-

我們將用到的庫函式是:

CV_EXPORTS_W void blur( InputArray src, OutputArray dst, Size ksize, Point anchor = Point(-1,-1), int borderType = BORDER_DEFAULT );(均值濾波函式) CV_EXPORTS_W void GaussianBlur( InputArray src, OutputArray dst, Size ksize,double sigmaX, double sigmaY = 0,int borderType = BORDER_DEFAULT );(高斯濾波函式) CV_EXPORTS_W void medianBlur( InputArray src, OutputArray dst, int ksize );(中值濾波函式) CV_EXPORTS_W void bilateralFilter( InputArray src, OutputArray dst, int d,double sigmaColor, double sigmaSpace,int borderType = BORDER_DEFAULT );(雙邊濾波函式) CV_EXPORTS_W int getOptimalDFTSize(int vecsize);(得到最佳IDFT變換尺寸函式) CV_EXPORTS_W void dft(InputArray src, OutputArray dst, int flags = 0, int nonzeroRows = 0);(dft變換函式) CV_EXPORTS void calcHist( const Mat* images, int nimages,const int* channels, InputArray mask,OutputArray hist, int dims, const int* histSize,const float** ranges, bool uniform = true, bool accumulate = false );(計算直方圖函式)這裡我們準備了五種濾波器,分別是均值濾波器,高斯濾波器,中值濾波器,雙邊濾波器,頻域高斯濾波器(主要是濾一些週期噪聲)

-

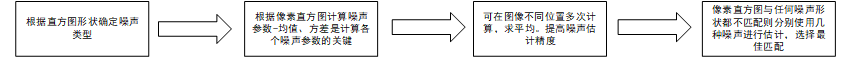

影象噪聲的估計流程如下:

-

相應的程式如下:

/*均值濾波函式*/ Mat Blurefilterfunction(Mat &img) { namedWindow("均值濾波【效果圖】", CV_WINDOW_AUTOSIZE); Mat out; blur(img, out,Size(7, 7)); /*這裡選用7*7的核心進行均值濾波*/ imshow("均值濾波【效果圖】", out); return out; } /*高斯濾波函式*/ Mat GaussianBlurfilterfunction(Mat &img) { namedWindow("高斯濾波【效果圖】", CV_WINDOW_AUTOSIZE); Mat out; GaussianBlur(img, out, Size(3, 3),0,0); /*這裡選用3*3的核心進行高斯濾波*/ imshow("高斯濾波【效果圖】", out); return out; } /*中值濾波函式*/ Mat MedianBlurfilterfunction(Mat &img) { namedWindow("中值濾波【效果圖】", CV_WINDOW_AUTOSIZE); Mat out; medianBlur(img, out, 7); imshow("中值濾波【效果圖】", out); return out; } /*雙邊濾波函式*/ Mat BilateralBlurfilterfunction(Mat &img) { namedWindow("雙邊濾波【效果圖】", CV_WINDOW_AUTOSIZE); Mat out; bilateralFilter(img, out, 25, 25 * 2, 25 / 2); imshow("雙邊濾波【效果圖】", out); return out; } /*高斯頻域低通濾波器*/ //************************************** //頻率域濾波——以高斯低通為例 //************************************** Mat gaussianlbrf(Mat scr, float sigma);//高斯低通濾波器函式 Mat freqfilt(Mat scr, Mat blur);//頻率域濾波函式 Mat FrequencyDomainGaussFiltering(Mat &input) { //Mat input = imread("p3-00-01.tif", CV_LOAD_IMAGE_GRAYSCALE); int w = getOptimalDFTSize(input.cols); //獲取進行dtf的最優尺寸 int h = getOptimalDFTSize(input.rows); //獲取進行dtf的最優尺寸 Mat padded; copyMakeBorder(input, padded, 0, h - input.rows, 0, w - input.cols, BORDER_CONSTANT, Scalar::all(0)); //邊界填充 padded.convertTo(padded, CV_32FC1); //將影象轉換為flaot型 Mat gaussianKernel = gaussianlbrf(padded, 30);//高斯低通濾波器 Mat out = freqfilt(padded, gaussianKernel);//頻率域濾波 imshow("結果圖 sigma=30", out); return out; } //*****************高斯低通濾波器*********************** Mat gaussianlbrf(Mat scr, float sigma) { Mat gaussianBlur(scr.size(), CV_32FC1); //,CV_32FC1 float d0 = 2 * sigma*sigma;//高斯函式引數,越小,頻率高斯濾波器越窄,濾除高頻成分越多,影象就越平滑 for (int i = 0; i < scr.rows; i++) { for (int j = 0; j < scr.cols; j++) { float d = pow(float(i - scr.rows / 2), 2) + pow(float(j - scr.cols / 2), 2);//分子,計算pow必須為float型 gaussianBlur.at<float>(i, j) = expf(-d / d0);//expf為以e為底求冪(必須為float型) } } imshow("高斯低通濾波器", gaussianBlur); return gaussianBlur; } //*****************頻率域濾波******************* Mat freqfilt(Mat scr, Mat blur) { //***********************DFT******************* Mat plane[] = { scr, Mat::zeros(scr.size() , CV_32FC1) }; //建立通道,儲存dft後的實部與虛部(CV_32F,必須為單通道數) Mat complexIm; merge(plane, 2, complexIm);//合併通道 (把兩個矩陣合併為一個2通道的Mat類容器) dft(complexIm, complexIm);//進行傅立葉變換,結果儲存在自身 //***************中心化******************** split(complexIm, plane);//分離通道(陣列分離) int cx = plane[0].cols / 2; int cy = plane[0].rows / 2;//以下的操作是移動影象 (零頻移到中心) Mat part1_r(plane[0], Rect(0, 0, cx, cy)); //元素座標表示為(cx,cy) Mat part2_r(plane[0], Rect(cx, 0, cx, cy)); Mat part3_r(plane[0], Rect(0, cy, cx, cy)); Mat part4_r(plane[0], Rect(cx, cy, cx, cy)); Mat temp; part1_r.copyTo(temp); //左上與右下交換位置(實部) part4_r.copyTo(part1_r); temp.copyTo(part4_r); part2_r.copyTo(temp); //右上與左下交換位置(實部) part3_r.copyTo(part2_r); temp.copyTo(part3_r); Mat part1_i(plane[1], Rect(0, 0, cx, cy)); //元素座標(cx,cy) Mat part2_i(plane[1], Rect(cx, 0, cx, cy)); Mat part3_i(plane[1], Rect(0, cy, cx, cy)); Mat part4_i(plane[1], Rect(cx, cy, cx, cy)); part1_i.copyTo(temp); //左上與右下交換位置(虛部) part4_i.copyTo(part1_i); temp.copyTo(part4_i); part2_i.copyTo(temp); //右上與左下交換位置(虛部) part3_i.copyTo(part2_i); temp.copyTo(part3_i); //*****************濾波器函式與DFT結果的乘積**************** Mat blur_r, blur_i, BLUR; multiply(plane[0], blur, blur_r); //濾波(實部與濾波器模板對應元素相乘) multiply(plane[1], blur, blur_i);//濾波(虛部與濾波器模板對應元素相乘) Mat plane1[] = { blur_r, blur_i }; merge(plane1, 2, BLUR);//實部與虛部合併 //*********************得到原圖頻譜圖*********************************** magnitude(plane[0], plane[1], plane[0]);//獲取幅度影象,0通道為實部通道,1為虛部,因為二維傅立葉變換結果是複數 plane[0] += Scalar::all(1); //傅立葉變o換後的圖片不好分析,進行對數處理,結果比較好看 log(plane[0], plane[0]); // float型的灰度空間為[0,1]) normalize(plane[0], plane[0], 1, 0, CV_MINMAX); //歸一化便於顯示 imshow("原影象頻譜圖", plane[0]); idft(BLUR, BLUR); //idft結果也為複數 split(BLUR, plane);//分離通道,主要獲取通道 magnitude(plane[0], plane[1], plane[0]); //求幅值(模) normalize(plane[0], plane[0], 1, 0, CV_MINMAX); //歸一化便於顯示 return plane[0];//返回引數 } /*繪製直方圖函式*/ void Drawing_one_dimensionalhistogram(Mat &img) { /*輸入影象轉化為灰度影象*/ //cvtColor(img, img, CV_BGR2GRAY); /*定義變數*/ MatND dstHist; int dims = 1; float hranges[] = { 0,255 }; const float *ranges[] = { hranges }; /*這裡需要為const型別*/ int size = 256; int channels = 0; /*計算影象的直方圖*/ calcHist(&img, 1, &channels, Mat(), dstHist, dims, &size, ranges); int scale = 1; Mat dstImage(size * scale, size, CV_8U, Scalar(0)); /*獲取最大值和最小值*/ double minValue = 0; double maxValue = 0; minMaxLoc(dstHist, &minValue, &maxValue, 0, 0); /*繪製出直方圖*/ int hpt = saturate_cast<int>(0.9 * size); for (int i = 0; i < 256; i++) { float binValue = dstHist.at<float>(i); int realValue = saturate_cast<int>(binValue * hpt / maxValue); rectangle(dstImage, Point(i*scale, size - 1), Point((i + 1)*scale - 1, size - realValue), Scalar(255)); } imshow("影象的一維直方圖", dstImage); }例如下列圖片的直方圖:

-

-

-

-

從上到下分別為瑞利噪聲(週期噪聲),高斯噪聲,均勻噪聲(週期噪聲)

-

首先我們先對瑞利噪聲在空域濾波,效果圖如下:

-

-

頻域濾波,效果圖如下:

-

-

對於高斯噪聲,我們只需要對其高斯濾波即可,但是這裡需要進行相應的引數調節,效果圖如下:

-

-

最後一個均勻噪聲,也屬於週期噪聲的一種,這裡我們需要對其在頻域濾波,效果圖如下:

-

-

二、圖象分割

-

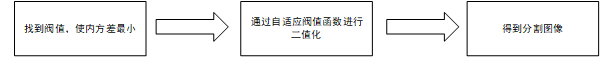

大津分割流程圖:

-

-

我們使用到的庫函式為:

CV_EXPORTS_W double threshold( InputArray src, OutputArray dst,double thresh, double maxval, int type );(閥值化函式)相應的程式如下:

/*計算大津分割的閾值*/ int OtsuAlgThreshold(const Mat image) { if (image.channels() != 1) { cout << "Please input Gray-image!" << endl; return 0; } int T = 0; //Otsu演算法閾值 double varValue = 0; //類間方差中間值儲存 double w0 = 0; //前景畫素點數所佔比例 double w1 = 0; //背景畫素點數所佔比例 double u0 = 0; //前景平均灰度 double u1 = 0; //背景平均灰度 double Histogram[256] = { 0 }; //灰度直方圖,下標是灰度值,儲存內容是灰度值對應的畫素點總數 int Histogram1[256] = { 0 }; uchar *data = image.data; double totalNum = image.rows*image.cols; //畫素總數 //計算灰度直方圖分佈,Histogram陣列下標是灰度值,儲存內容是灰度值對應畫素點數 for (int i = 0; i < image.rows; i++) //為表述清晰,並沒有把rows和cols單獨提出來 { for (int j = 0; j < image.cols; j++) { Histogram[data[i*image.step + j]]++; Histogram1[data[i*image.step + j]]++; } } //***********畫出影象直方圖******************************** Mat image1(255, 255, CV_8UC3); for (int i = 0; i < 255; i++) { Histogram1[i] = Histogram1[i] % 200; line(image1, Point(i, 235), Point(i, 235 - Histogram1[i]), Scalar(255, 0, 0), 1, 8, 0); if (i % 50 == 0) { char ch[255]; sprintf_s(ch, "%d", i); string str = ch; putText(image1, str, Point(i, 250), 1, 1, Scalar(0, 0, 255)); } } //***********畫出影象直方圖******************************** for (int i = 0; i < 255; i++) { //每次遍歷之前初始化各變數 w1 = 0; u1 = 0; w0 = 0; u0 = 0; //***********背景各分量值計算************************** for (int j = 0; j <= i; j++) //背景部分各值計算 { w1 += Histogram[j]; //背景部分畫素點總數 u1 += j * Histogram[j]; //背景部分畫素總灰度和 } if (w1 == 0) //背景部分畫素點數為0時退出 { break; } u1 = u1 / w1; //背景畫素平均灰度 w1 = w1 / totalNum; // 背景部分畫素點數所佔比例 //***********背景各分量值計算************************** //***********前景各分量值計算************************** for (int k = i + 1; k < 255; k++) { w0 += Histogram[k]; //前景部分畫素點總數 u0 += k * Histogram[k]; //前景部分畫素總灰度和 } if (w0 == 0) //前景部分畫素點數為0時退出 { break; } u0 = u0 / w0; //前景畫素平均灰度 w0 = w0 / totalNum; // 前景部分畫素點數所佔比例 //***********前景各分量值計算************************** //***********類間方差計算****************************** double varValueI = w0 * w1*(u1 - u0)*(u1 - u0); //當前類間方差計算 if (varValue < varValueI) { varValue = varValueI; T = i; } } //畫出以T為閾值的分割線 line(image1, Point(T, 235), Point(T, 0), Scalar(0, 0, 255), 2, 8); imshow("直方圖", image1); return T; } //***************Otsu演算法通過求類間方差極大值求自適應閾值***************** Mat Otsusegmentation(Mat &img) { cvtColor(img, img, CV_RGB2GRAY); Mat imageOutput; Mat imageOtsu; int thresholdValue = OtsuAlgThreshold(img); cout << "類間方差為: " << thresholdValue << endl; threshold(img, imageOutput, thresholdValue, 255, CV_THRESH_BINARY); threshold(img, imageOtsu, 0, 255, CV_THRESH_OTSU); //Opencv Otsu演算法; imshow("大津分割【效果圖】", imageOutput); imshow("Opencv Otsu【效果圖】", imageOtsu); return imageOutput; }首先我們先計算一張圖片的閾值,得到其最小類方差:

-

-

然後進行相應的閾值值化操作:

-

-

影象分割還有一種稱為互動式分割,這裡我們直接使用庫函式:

CV_EXPORTS_W void grabCut( InputArray img, InputOutputArray mask, Rect rect, InputOutputArray bgdModel, InputOutputArray fgdModel, int iterCount, int mode = GC_EVAL ); img:待分割的源影象,必須是8位3通道(CV_8UC3)影象,在處理的過程中不會被修改; mask:掩碼影象,大小和原影象一致。可以有如下幾種取值: GC_BGD(=0),背景; GC_FGD(=1),前景; GC_PR_BGD(=2),可能的背景; GC_PR_FGD(=3),可能的前景。 rect:用於限定需要進行分割的影象範圍,只有該矩形視窗內的影象部分才被處理; bgdModel:背景模型,如果為null,函式內部會自動建立一個bgdModel; fgdModel:前景模型,如果為null,函式內部會自動建立一個fgdModel; iterCount:迭代次數,必須大於0; mode:用於指示grabCut函式進行什麼操作。可以有如下幾種選擇: GC_INIT_WITH_RECT(=0),用矩形窗初始化GrabCut; GC_INIT_WITH_MASK(=1),用掩碼影象初始化GrabCut; GC_EVAL(=2),執行分割。程式碼如下:

/*grath_cut*/ void grathcutfunctiong(Mat &img) { Mat bgModel, fgModel, mask; Rect rect; rect.x = 50; rect.y = 45; rect.width = img.cols - (rect.x << 1); rect.height = img.rows - (rect.y << 1); rectangle(img, rect, Scalar(0, 0, 255), 3, 8, 0);//用矩形畫矩形窗 //迴圈執行3次,這個可以自己設定 grabCut(img, mask, rect, bgModel, fgModel, 3, GC_INIT_WITH_RECT); compare(mask, GC_PR_FGD, mask, CMP_EQ); Mat foreground(img.size(), CV_8UC3, Scalar(255, 255, 255)); img.copyTo(foreground, mask); imshow("foreground", foreground); }相應的效果圖如下:

-

-

-

這裡再附一個分水嶺演算法的程式碼,有興趣的可以試一試:

Mat g_maskImage, g_scrImage; Point prevPt(-1, -1); static void ShowHelpText(); static void on_Mouse(int event, int x, int y, int flags, void*); /*分水嶺演算法*/ void Watershedfunction(Mat &g_scrImage) { /*初始化掩膜和灰度圖*/ Mat scrImage, grayImage; grayImage.copyTo(scrImage); cvtColor(g_scrImage, g_maskImage, COLOR_BGR2GRAY); cvtColor(g_maskImage, grayImage, COLOR_GRAY2BGR); g_maskImage = Scalar::all(0); /*設定滑鼠回撥函式*/ setMouseCallback(WINDOW_NAME, on_Mouse, 0); /*輪詢按鍵,進行處理*/ while (1) { /*獲取鍵值*/ int c = waitKey(0); /*若按鍵值為ESC時,退出*/ if ((char)c == 27) { break; } /*若按鍵值為2時,恢復原圖*/ if ((char)c == '2') { g_maskImage = Scalar::all(0); scrImage.copyTo(g_scrImage); imshow("image",g_scrImage); } /*若按鍵值為1或者空格,則進行處理*/ if ((char)c == '1' || (char)c == ' ') { /*定義一些引數*/ int i, j, compCount = 0; vector<vector<Point>> contours; vector<Vec4i> hierarchy; /*尋找輪廓*/ findContours(g_maskImage, contours, hierarchy, CV_RETR_CCOMP, CV_CHAIN_APPROX_SIMPLE); /*輪廓為空時的處理*/ if (contours.empty()) continue; /*複製掩膜*/ Mat maskImage(g_maskImage.size(), CV_32S); maskImage = Scalar::all(0); /*迴圈繪製出輪廓*/ for (int index = 0; index > 0; index = hierarchy[index][0], compCount++) drawContours(maskImage, contours, index, Scalar::all(compCount + 1), -1, 8, hierarchy, INT_FAST16_MAX); /*compCount為0時的處理*/ if (compCount == 0) continue; /*生成隨機顏色*/ vector<Vec3b> colorTab; for (i = 0; i < compCount; i++) { int b = theRNG().uniform(0, 255); int g = theRNG().uniform(0, 255); int r = theRNG().uniform(0, 255); colorTab.push_back(Vec3b((uchar)b, (uchar)g, (uchar)r)); } /*計算處理時間並輸出到視窗中*/ double dTime = (double)getTickCount(); watershed(scrImage, maskImage); dTime = (double)getTickCount() - dTime; printf("\t處理時間 = %gms\n",dTime*1000./getTickFrequency()); /*雙層迴圈,將分水嶺影象遍歷存入watershedImage中*/ Mat watershedImage(maskImage.size(), CV_8UC3); for(i=0;i<maskImage.rows;i++) for (j = 0; j < maskImage.cols; j++) { int index = maskImage.at<int>(i, j); if (index == -1) watershedImage.at<Vec3b>(i, j) = Vec3b(255, 255, 255); else if (index <= 0 || index > compCount) watershedImage.at<Vec3b>(i, j) = Vec3b(0, 0, 0); else watershedImage.at<Vec3b>(i, j) = colorTab[index - 1]; } watershedImage = watershedImage * 0.5 + grayImage * 0.5; imshow("watershed transform",watershedImage); } } } static void on_Mouse(int event, int x, int y, int flags, void *) { /*處理滑鼠不在視窗中的情況*/ if (x < 0 || x >= g_scrImage.cols || y < 0 || y >= g_maskImage.rows) return; /*數理滑鼠左鍵相關資訊*/ if (event == EVENT_LBUTTONUP || !(flags & EVENT_FLAG_LBUTTON)) prevPt = Point(-1, -1); else if (event == EVENT_LBUTTONDOWN) prevPt = Point(x, y); /*滑鼠左鍵按下並移動,繪製出白色線條*/ else if (event == EVENT_MOUSEMOVE && (flags & EVENT_FLAG_LBUTTON)) { Point pt(x, y); if (prevPt.x < 0) prevPt = pt; line(g_maskImage, prevPt, pt, Scalar::all(255), 5, 8, 0); line(g_scrImage, prevPt, pt, Scalar::all(255), 5, 8, 0); prevPt = pt; imshow(WINDOW_NAME,g_scrImage); } }三、影象壓縮及解壓

-

(1)、霍夫曼編碼壓縮圖片:

-

其流程圖如下:

-

-

程式碼如下:

-

/*哈夫曼編碼*/ //幾個全域性變數,存放讀入影象的點陣圖資料、寬、高、顏色表及每畫素所佔位數(位元) //此處定義全域性變數主要為了後面的影象資料訪問及影象儲存作準備 unsigned char *pBmpBuf;//讀入影象資料的指標 int bmpWidth;//影象的寬 int bmpHeight;//影象的高 int imgSpace;//影象所需空間 RGBQUAD *pColorTable;//顏色表指標 int biBitCount;//影象型別 char str[100];//檔名稱 int Num[300];//各灰度值出現的次數 float Feq[300];//各灰度值出現的頻率 unsigned char *lpBuf;//指向影象畫素的指標 unsigned char *m_pDib;//存放開啟檔案的DIB int NodeNum; //Huffman樹總節點個數 int NodeStart; //Huffman樹起始節點 struct Node { //Huffman樹節點 int color; //記錄葉子節點的灰度值(非葉子節點為 -1) int lson, rson; //節點的左右兒子(若沒有則為 -1) int num; //節點的數值(編碼依據) int mark; //記錄節點是否被用過(用過為1,沒用過為0) }node[600]; char CodeStr[300][300]; //記錄編碼值 int CodeLen[300]; //編碼長度 bool ImgInf[8000000]; //影象資訊 int InfLen; //影象資訊長度 /*********************************************************************** * 函式名稱: * readBmp() * *函式引數: * char *bmpName -檔名字及路徑 * *返回值: * 0為失敗,1為成功 * *說明:給定一個影象檔名及其路徑,讀影象的點陣圖資料、寬、高、顏色表及每畫素 * 位數等資料進記憶體,存放在相應的全域性變數中 ***********************************************************************/ bool readBmp(char *bmpName) { //二進位制讀方式開啟指定的影象檔案 FILE *fp = fopen(bmpName, "rb"); if (fp == 0) { printf("未找到指定檔案!\n"); return 0; } //跳過點陣圖檔案頭結構BITMAPFILEHEADER fseek(fp, sizeof(BITMAPFILEHEADER), 0); //定義點陣圖資訊頭結構變數,讀取點陣圖資訊頭進記憶體,存放在變數head中 BITMAPINFOHEADER head; fread(&head, sizeof(BITMAPINFOHEADER), 1, fp); //獲取影象寬、高、每畫素所佔位數等資訊 bmpWidth = head.biWidth; bmpHeight = head.biHeight; biBitCount = head.biBitCount; //定義變數,計算影象每行畫素所佔的位元組數(必須是4的倍數) int lineByte = (bmpWidth * biBitCount / 8 + 3) / 4 * 4; //灰度影象有顏色表,且顏色表表項為256 if (biBitCount == 8) { //申請顏色表所需要的空間,讀顏色表進記憶體 pColorTable = new RGBQUAD[256]; fread(pColorTable, sizeof(RGBQUAD), 256, fp); } //申請點陣圖資料所需要的空間,讀點陣圖資料進記憶體 pBmpBuf = new unsigned char[lineByte * bmpHeight]; fread(pBmpBuf, 1, lineByte * bmpHeight, fp); //關閉檔案 fclose(fp); return 1; } /*********************************************************************** 儲存資訊 ***********************************************************************/ //二進位制轉十進位制 int Change2to10(int pos) { int i, j, two = 1; j = 0; for (i = pos + 7; i >= pos; i--) { j += two * ImgInf[i]; two *= 2; } return j; } //儲存Huffman編碼樹 int saveInfo(char *writePath, int lineByte) { int i, j ; FILE *fout; fout = fopen(writePath, "w"); fprintf(fout, "%d %d %d\n", NodeStart, NodeNum, InfLen);//輸出起始節點、節點總數、影象所佔空間 for (i = 0; i < NodeNum; i++) { //輸出Huffman樹 fprintf(fout, "%d %d %d\n", node[i].color, node[i].lson, node[i].rson); } fclose(fout); return 0; } //儲存檔案 bool saveBmp(char *bmpName, unsigned char *imgBuf, int width, int height, int biBitCount, RGBQUAD *pColorTable) { //如果點陣圖資料指標為0,則沒有資料傳入,函式返回 if (!imgBuf) return 0; //顏色表大小,以位元組為單位,灰度影象顏色表為1024位元組,彩色影象顏色表大小為0 int colorTablesize = 0; if (biBitCount == 8) colorTablesize = 1024; //待儲存影象資料每行位元組數為4的倍數 int lineByte = (width * biBitCount / 8 + 3) / 4 * 4; //以二進位制寫的方式開啟檔案 FILE *fp = fopen(bmpName, "wb"); if (fp == 0) return 0; //申請點陣圖檔案頭結構變數,填寫檔案頭資訊 BITMAPFILEHEADER fileHead; fileHead.bfType = 0x4D42;//bmp型別 //bfSize是影象檔案4個組成部分之和 fileHead.bfSize = sizeof(BITMAPFILEHEADER) + sizeof(BITMAPINFOHEADER) + colorTablesize + lineByte * height; fileHead.bfReserved1 = 0; fileHead.bfReserved2 = 0; //bfOffBits是影象檔案前三個部分所需空間之和 fileHead.bfOffBits = 54 + colorTablesize; //寫檔案頭進檔案 fwrite(&fileHead, sizeof(BITMAPFILEHEADER), 1, fp); //申請點陣圖資訊頭結構變數,填寫資訊頭資訊 BITMAPINFOHEADER head; head.biBitCount = biBitCount; head.biClrImportant = 0; head.biClrUsed = 0; head.biCompression = 0; head.biHeight = height; head.biPlanes = 1; head.biSize = 40; head.biSizeImage = lineByte * height; head.biWidth = width; head.biXPelsPerMeter = 0; head.biYPelsPerMeter = 0; //寫點陣圖資訊頭進記憶體 fwrite(&head, sizeof(BITMAPINFOHEADER), 1, fp); //如果灰度影象,有顏色表,寫入檔案 if (biBitCount == 8) fwrite(pColorTable, sizeof(RGBQUAD), 256, fp); //寫點陣圖資料進檔案 fwrite(imgBuf, InfLen / 8, 1, fp); //關閉檔案 fclose(fp); return 1; } /********************* Huffman編碼影象解碼 *********************/ //讀入編碼影象 bool readHuffman(char *Name) { int i; char NameStr[100]; //讀取Huffman編碼資訊和編碼樹 strcpy(NameStr, Name); strcat(NameStr, ".bpt"); FILE *fin = fopen(NameStr, "r"); if (fin == 0) { printf("未找到指定檔案!\n"); return 0; } fscanf(fin, "%d %d %d", &NodeStart, &NodeNum, &InfLen); //printf("%d %d %d\n",NodeStart,NodeNum,InfLen); for (i = 0; i < NodeNum; i++) { fscanf(fin, "%d %d %d", &node[i].color, &node[i].lson, &node[i].rson); } //二進位制讀方式開啟指定的影象檔案 strcpy(NameStr, Name); strcat(NameStr, ".bhd"); FILE *fp = fopen(NameStr, "rb"); if (fp == 0) { printf("未找到指定檔案!\n"); return 0; } //跳過點陣圖檔案頭結構BITMAPFILEHEADER fseek(fp, sizeof(BITMAPFILEHEADER), 0); //定義點陣圖資訊頭結構變數,讀取點陣圖資訊頭進記憶體,存放在變數head中 BITMAPINFOHEADER head; fread(&head, sizeof(BITMAPINFOHEADER), 1, fp); //獲取影象寬、高、每畫素所佔位數等資訊 bmpWidth = head.biWidth; bmpHeight = head.biHeight; biBitCount = head.biBitCount; //定義變數,計算影象每行畫素所佔的位元組數(必須是4的倍數) int lineByte = (bmpWidth * biBitCount / 8 + 3) / 4 * 4; //灰度影象有顏色表,且顏色表表項為256 if (biBitCount == 8) { //申請顏色表所需要的空間,讀顏色表進記憶體 pColorTable = new RGBQUAD[256]; fread(pColorTable, sizeof(RGBQUAD), 256, fp); } //申請點陣圖資料所需要的空間,讀點陣圖資料進記憶體 pBmpBuf = new unsigned char[lineByte * bmpHeight]; fread(pBmpBuf, 1, InfLen / 8, fp); //關閉檔案 fclose(fp); return 1; } void HuffmanDecode() { //獲取編碼資訊 int i, j, tmp; int lineByte = (bmpWidth * biBitCount / 8 + 3) / 4 * 4; for (i = 0; i < InfLen / 8; i++) { j = i * 8 + 7; tmp = *(pBmpBuf + i); while (tmp > 0) { ImgInf[j] = tmp % 2; tmp /= 2; j--; } } //解碼 int p = NodeStart; //遍歷指標位置 j = 0; i = 0; do { if (node[p].color >= 0) { *(pBmpBuf + j) = node[p].color; j++; p = NodeStart; } if (ImgInf[i] == 1) p = node[p].lson; else if (ImgInf[i] == 0) p = node[p].rson; i++; } while (i <= InfLen); } /********************* Huffman編碼 *********************/ //Huffman編碼初始化 void HuffmanCodeInit() { int i; for (i = 0; i < 256; i++)//灰度值記錄清零 Num[i] = 0; //初始化哈夫曼樹 for (i = 0; i < 600; i++) { node[i].color = -1; node[i].lson = node[i].rson = -1; node[i].num = -1; node[i].mark = 0; } NodeNum = 0; } //深搜遍歷Huffman樹獲取編碼值 char CodeTmp[300]; void dfs(int pos, int len) { //遍歷左兒子 if (node[pos].lson != -1) { CodeTmp[len] = '1'; dfs(node[pos].lson, len + 1); } else { if (node[pos].color != -1) { CodeLen[node[pos].color] = len; CodeTmp[len] = '\0'; strcpy(CodeStr[node[pos].color], CodeTmp); } } //遍歷右兒子 if (node[pos].lson != -1) { CodeTmp[len] = '0'; dfs(node[pos].rson, len + 1); } else { if (node[pos].color != -1) { CodeLen[node[pos].color] = len; CodeTmp[len] = '\0'; strcpy(CodeStr[node[pos].color], CodeTmp); } } } //尋找值最小的節點 int MinNode() { int i, j = -1; for (i = 0; i < NodeNum; i++) if (!node[i].mark) if (j == -1 || node[i].num < node[j].num) j = i; if (j != -1) { NodeStart = j; node[j].mark = 1; } return j; } //編碼主函式 void HuffmanCode() { int i, j, k, a, b; for (i = 0; i < 256; i++) {//建立初始節點 Feq[i] = (float)Num[i] / (float)(bmpHeight * bmpWidth);//計算灰度值頻率 if (Num[i] > 0) { node[NodeNum].color = i; node[NodeNum].num = Num[i]; node[NodeNum].lson = node[NodeNum].rson = -1; //葉子節點無左右兒子 NodeNum++; } } while (1) { //找到兩個值最小的節點,合併成為新的節點 a = MinNode(); if (a == -1) break; b = MinNode(); if (b == -1) break; //構建新節點 node[NodeNum].color = -1; node[NodeNum].num = node[a].num + node[b].num; node[NodeNum].lson = a; node[NodeNum].rson = b; NodeNum++; } //根據建好的Huffman樹編碼(深搜實現) dfs(NodeStart, 0); //螢幕輸出編碼 int sum = 0; printf("Huffman編碼資訊如下:\n"); for (i = 0; i < 256; i++) if (Num[i] > 0) { sum += CodeLen[i] * Num[i]; printf("灰度值:%3d 頻率: %f 碼長: %2d 編碼: %s\n",i,Feq[i],CodeLen[i],CodeStr[i]); } printf("原始總碼長:%d\n",bmpWidth * bmpHeight * 8); printf("Huffman編碼總碼長:%d\n",sum); printf("壓縮比:%.3f : 1\n",(float)(bmpWidth * bmpHeight * 8) / (float)sum); for (i = 0; i < 256; i++) if (Num[i] > 0) { sum += CodeLen[i] * Num[i]; } //記錄影象資訊 InfLen = 0; int lineByte = (bmpWidth * biBitCount / 8 + 3) / 4 * 4; for (i = 0; i < bmpHeight; i++) for (j = 0; j < bmpWidth; j++) { lpBuf = (unsigned char *)pBmpBuf + lineByte * i + j; for (k = 0; k < CodeLen[*(lpBuf)]; k++) { ImgInf[InfLen++] = (int)(CodeStr[*(lpBuf)][k] - '0'); } } //再編碼資料 j = 0; for (i = 0; i < InfLen;) { *(pBmpBuf + j) = Change2to10(i); i += 8; j++; } } /****************************** 主函式 ******************************/ void Huffmanfunction() { int ord;//命令 char c; int i, j; clock_t start, finish; int total_time; // CString str; while (1) { printf("本程式提供以下功能\n\n\t1.256色灰度BMP影象Huffman編碼\n\t2.Huffman編碼BMP檔案解碼\n\t3.退出\n\n請選擇需要執行的命令:"); scanf("%d%c", &ord, &c); if (ord == 1) { printf("\n---256色灰度BMP影象Huffman編碼---\n"); printf("\n請輸入要編碼影象名稱:"); scanf("%s", str); //讀入指定BMP檔案進記憶體 char readPath[100]; strcpy(readPath, str); strcat(readPath, ".bmp"); if (readBmp(readPath)) { int lineByte = (bmpWidth * biBitCount / 8 + 3) / 4 * 4; if (biBitCount == 8) { //編碼初始化 HuffmanCodeInit(); //計算每個灰度值出現的次數 for (i = 0; i < bmpHeight; i++) for (j = 0; j < bmpWidth; j++) { lpBuf = (unsigned char *)pBmpBuf + lineByte * i + j; Num[*(lpBuf)] += 1; } //呼叫編碼 start = clock(); HuffmanCode(); finish = clock(); total_time = (finish - start); printf("識別一張耗時: %d毫秒", total_time); //將影象資料存檔 char writePath[100]; //儲存編碼後的bmp strcpy(writePath, str); strcat(writePath, "_Huffman.bhd"); saveBmp(writePath, pBmpBuf, bmpWidth, bmpHeight, biBitCount, pColorTable); //儲存Huffman編碼資訊和編碼樹 strcpy(writePath, str); strcat(writePath, "_Huffman.bpt"); saveInfo(writePath, lineByte); printf("\n編碼完成!編碼資訊儲存在 %s_Huffman 檔案中\n\n", str); } else { printf("本程式只支援256色BMP編碼!\n"); } //清除緩衝區,pBmpBuf和pColorTable是全域性變數,在檔案讀入時申請的空間 delete[]pBmpBuf; if (biBitCount == 8) delete[]pColorTable; } printf("\n-----------------------------------------------\n\n\n"); } else if (ord == 2) { printf("\n---Huffman編碼BMP檔案解碼---\n"); printf("\n請輸入要解碼檔名稱:"); scanf("%s", str); //編碼解碼初始化 HuffmanCodeInit(); if (readHuffman(str)) { //讀取檔案 HuffmanDecode(); //Huffman解碼 //將影象資料存檔 char writePath[100]; //儲存解碼後的bmp strcpy(writePath, str); strcat(writePath, "_Decode.bmp"); InfLen = bmpWidth * bmpHeight * 8; saveBmp(writePath, pBmpBuf, bmpWidth, bmpHeight, biBitCount, pColorTable); system(writePath); printf("\n解碼完成!儲存為 %s_Decode.bmp\n\n", str); } printf("\n-----------------------------------------------\n\n\n"); } else if (ord == 3) break; } } -

效果圖如下:

-

-

-

-

(2)、使用DCT變換進行影象壓縮

-

其流程圖如下:

-

-

程式碼如下:

-

/*DCT壓縮*/ void blkproc_DCT(Mat); //功能等同於Matlab的blkproc函式(blkproc(I,[8 8],'P1*x*P2',g,g')),用於對影象做8*8分塊DCT Mat blkproc_IDCT(Mat); //功能等同於Matlab的blkproc函式(blkproc(I,[8 8],'P1*x*P2',g',g)),用於做8*8分塊DCT逆變換,恢復原始影象 void regionalCoding(Mat); //區域編碼函式,功能等同於Matlab的blkproc函式(blkproc(I1,[8 8],'P1.*x',a)) void thresholdCoding(Mat); //閾值編碼函式,功能等同於Matlab的blkproc函式(blkproc(I1,[8 8],'P1.*x',a)) double get_medianNum(Mat &); //獲取矩陣的中值,用於閾值編碼 void ImageCompression_DCT(Mat &ucharImg) { //Mat ucharImg = imread("3.jpg", 0); //以灰度圖的形式讀入原始的影象 //imshow("srcImg", ucharImg); Mat doubleImg,OrignalImg; OrignalImg = ucharImg.clone(); ucharImg.convertTo(doubleImg, CV_64F); //將原始影象轉換成double型別的影象,方便後面的8*8分塊DCT變換 blkproc_DCT(doubleImg); //對原圖片做8*8分塊DCT變換 //分別進行區域編碼和閾值編碼 Mat doubleImgRegion, doubleImgThreshold; doubleImgRegion = doubleImg.clone(); doubleImgThreshold = doubleImg.clone(); regionalCoding(doubleImgRegion); //對DCT變換後的係數進行區域編碼 thresholdCoding(doubleImgThreshold); //對DCT變換後的係數進行閾值編碼 //進行逆DCT變換 Mat ucharImgRegion, ucharImgThreshold, differenceimage; ucharImgRegion = blkproc_IDCT(doubleImgRegion); //namedWindow("RegionalCoding", CV_WINDOW_AUTOSIZE); imshow("RegionalCoding", ucharImgRegion); ucharImgThreshold = blkproc_IDCT(doubleImgThreshold); //namedWindow("ThresholdCoding", CV_WINDOW_AUTOSIZE); imshow("ThresholdCoding", ucharImgThreshold); absdiff(ucharImgRegion, OrignalImg, differenceimage); imshow("兩幅影象的差值",differenceimage); } void blkproc_DCT(Mat doubleImgTmp) { Mat ucharImgTmp; Mat DCTMat = Mat(8, 8, CV_64FC1); //用於DCT變換的8*8的矩陣 Mat DCTMatT; //DCTMat矩陣的轉置 Mat ROIMat = Mat(8, 8, CV_64FC1); //用於分塊處理的時候在原影象上面移動 double a = 0, q; //DCT變換的係數 for (int i = 0; i < DCTMat.rows; i++) { for (int j = 0; j < DCTMat.cols; j++) { if (i == 0) { a = pow(1.0 / DCTMat.rows, 0.5); } else { a = pow(2.0 / DCTMat.rows, 0.5); } q = ((2 * j + 1)*i*M_PI) / (2 * DCTMat.rows); DCTMat.at<double>(i, j) = a * cos(q); } } DCTMatT = DCTMat.t(); //ROIMat在doubleImgTmp以8為步長移動,達到與Matlab中的分塊處理函式blkproc相同的效果 //此程式中,若圖片的高或者寬不是8的整數倍的話,最後的不足8的部分不進行處理 int rNum = doubleImgTmp.rows / 8; int cNum = doubleImgTmp.cols / 8; for (int i = 0; i < rNum; i++) { for (int j = 0; j < cNum; j++) { ROIMat = doubleImgTmp(Rect(j * 8, i * 8, 8, 8)); ROIMat = DCTMat * ROIMat*DCTMatT; } } doubleImgTmp.convertTo(ucharImgTmp, CV_8U); imshow("DCTImg", ucharImgTmp); } Mat blkproc_IDCT(Mat doubleImgTmp) { //與blkproc_DCT幾乎一樣,唯一的差別在於:ROIMat = DCTMatT*ROIMat*DCTMat(轉置矩陣DCTMatT和DCTMat交換了位置) Mat ucharImgTmp; Mat DCTMat = Mat(8, 8, CV_64FC1); Mat DCTMatT; Mat ROIMat = Mat(8, 8, CV_64FC1); double a = 0, q; for (int i = 0; i < DCTMat.rows; i++) { for (int j = 0; j < DCTMat.cols; j++) { if (i == 0) { a = pow(1.0 / DCTMat.rows, 0.5); } else { a = pow(2.0 / DCTMat.rows, 0.5); } q = ((2 * j + 1)*i*M_PI) / (2 * DCTMat.rows); DCTMat.at<double>(i, j) = a * cos(q); } } DCTMatT = DCTMat.t(); int rNum = doubleImgTmp.rows / 8; int cNum = doubleImgTmp.cols / 8; for (int i = 0; i < rNum; i++) { for (int j = 0; j < cNum; j++) { ROIMat = doubleImgTmp(Rect(j * 8, i * 8, 8, 8)); ROIMat = DCTMatT * ROIMat*DCTMat; } } doubleImgTmp.convertTo(ucharImgTmp, CV_8U); return ucharImgTmp; } void regionalCoding(Mat doubleImgTmp) { int rNum = doubleImgTmp.rows / 8; int cNum = doubleImgTmp.cols / 8; Mat ucharImgTmp; Mat ROIMat = Mat(8, 8, CV_64FC1); //用於分塊處理的時候在原影象上面移動 for (int i = 0; i < rNum; i++) { for (int j = 0; j < cNum; j++) { ROIMat = doubleImgTmp(Rect(j * 8, i * 8, 8, 8)); for (int r = 0; r < ROIMat.rows; r++) { for (int c = 0; c < ROIMat.cols; c++) { //8*8塊中,後四行置0 if (r > 4) { ROIMat.at<double>(r, c) = 0.0; } } } } } doubleImgTmp.convertTo(ucharImgTmp, CV_8U); imshow("regionalCodingImg", ucharImgTmp); } void thresholdCoding(Mat doubleImgTmp) { int rNum = doubleImgTmp.rows / 8; int cNum = doubleImgTmp.cols / 8; double medianNumTmp = 0; Mat ucharImgTmp; Mat ROIMat = Mat(8, 8, CV_64FC1); //用於分塊處理的時候在原影象上面移動 for (int i = 0; i < rNum; i++) { for (int j = 0; j < cNum; j++) { ROIMat = doubleImgTmp(Rect(j * 8, i * 8, 8, 8)); medianNumTmp = get_medianNum(ROIMat); for (int r = 0; r < ROIMat.rows; r++) { for (int c = 0; c < ROIMat.cols; c++) { if (abs(ROIMat.at<double>(r, c)) < 0) { ROIMat.at<double>(r, c) = 0; } } } } } doubleImgTmp.convertTo(ucharImgTmp, CV_8U); imshow("thresholdCodingImg", ucharImgTmp); } double get_medianNum(Mat & imageROI) //獲取矩陣的中值 { vector<double> vectorTemp; double tmpPixelValue = 0; for (int i = 0; i < imageROI.rows; i++) //將感興趣區域矩陣拉成一個向量 { for (int j = 0; j < imageROI.cols; j++) { vectorTemp.push_back(abs(imageROI.at<double>(i, j))); } } for (int i = 0; i < vectorTemp.size() / 2; i++) //進行排序 { for (int j = i + 1; j < vectorTemp.size(); j++) { if (vectorTemp.at(i) > vectorTemp.at(j)) { double temp; temp = vectorTemp.at(i); vectorTemp.at(i) = vectorTemp.at(j); vectorTemp.at(j) = temp; } } } return vectorTemp.at(vectorTemp.size() / 2 - 1); //返回中值 }效果圖如下:

-

-

-

-

-

-

-

(3)、影象JPEG的壓縮:

-

所用到的庫函式:

CV_EXPORTS_W Mat imdecode( InputArray buf, int flags );程式如下:

/*JPEG圖片壓縮*/ double getPSNR(Mat& src1, Mat& src2, int bb = 0); void ImageCompression_JPEG(Mat &src) { //Mat src = imread("lena.png"); cout << "origin image size: " << src.dataend - src.datastart << endl; cout << "height: " << src.rows << endl << "width: " << src.cols << endl << "depth: " << src.channels() << endl; cout << "height*width*depth: " << src.rows*src.cols*src.channels() << endl << endl; //(1) jpeg compression vector<uchar> buff;//buffer for coding vector<int> param = vector<int>(2); param[0] = CV_IMWRITE_JPEG_QUALITY; param[1] = 1;//default(95) 0-100 imencode(".jpg", src, buff, param); cout << "coded file size(jpg): " << buff.size() << endl;//fit buff size automatically. Mat jpegimage = imdecode(Mat(buff), CV_LOAD_IMAGE_COLOR); //(2) png compression param[0] = CV_IMWRITE_PNG_COMPRESSION; param[1] = 8;//default(3) 0-9. imencode(".png", src, buff, param); cout << "coded file size(png): " << buff.size() << endl; Mat pngimage = imdecode(Mat(buff), CV_LOAD_IMAGE_COLOR); //(3) intaractive jpeg compression char name[64]; namedWindow("jpg"); int q = 95; createTrackbar("quality", "jpg", &q, 100); int key = 0; while (key != 'q') { param[0] = CV_IMWRITE_JPEG_QUALITY; param[1] = q; imencode(".jpg", src, buff, param); Mat show = imdecode(Mat(buff), CV_LOAD_IMAGE_COLOR); double psnr = getPSNR(src, show);//get PSNR double bpp = 8.0*buff.size() / (show.size().area());//bit/pixe; sprintf_s(name, "quality:%03d, %.1fdB, %.2fbpp", q, psnr, bpp); putText(show, name, Point(15, 50), FONT_HERSHEY_SIMPLEX, 1, CV_RGB(255, 255, 255), 2); imshow("jpg", show); key = waitKey(33); if (key == 's') { //(4) data writing sprintf_s(name, "q%03d_%.2fbpp.png", q, bpp); imwrite(name, show); sprintf_s(name, "q%03d_%.2fbpp.jpg", q, bpp); param[0] = CV_IMWRITE_JPEG_QUALITY; param[1] = q; imwrite(name, src, param);; } } } double getPSNR(Mat& src1, Mat& src2, int bb) { int i, j; double sse, mse, psnr; sse = 0.0; Mat s1, s2; cvtColor(src1, s1, CV_BGR2GRAY); cvtColor(src2, s2, CV_BGR2GRAY); int count = 0; for (j = bb; j < s1.rows - bb; j++) { uchar* d = s1.ptr(j); uchar* s = s2.ptr(j); for (i = bb; i < s1.cols - bb; i++) { sse += ((d[i] - s[i])*(d[i] - s[i])); count++; } } if (sse == 0.0 || count == 0) { return 0; } else { mse = sse / (double)(count); psnr = 10.0*log10((255 * 255) / mse); return psnr; } }效果圖如下:

-

-

-

-

-

主函式如下:

#pragma warning(disable:4996) #include "opencv2/opencv.hpp" #include "opencv2/core/core.hpp" #include "opencv2/highgui/highgui.hpp" #include "opencv2/imgproc/imgproc.hpp" #include "iostream" #include "fstream" #include "regex" #include "string.h" #include "time.h" #include "Windows.h" #include "math.h" #include "stdio.h" #define WINDOW_NAME "(【程式視窗1】)" #define MAX_CHARS 256//最大字元數 #define MAX_SIZE 1000000 #define M_PI 3.141592653 using namespace cv; using namespace std; const double EPS = 1e-12; const double PI = 3.141592653; class tmpNode { public: unsigned char uch;//取值範圍是0~255,用來表示一個8位的字元 unsigned long freq = 0;//字元出現的頻率 }; int main() { //Mat img = imread("3.jpg", CV_LOAD_IMAGE_GRAYSCALE); //Mat img = imread("3.jpg", 1); //Mat img = imread("3.jpg",0); //Mat img = imread("1.jpg"); // //Mat img_1 = imread("p3-01-00_Huffman_Decode.bmp"); //Mat differenceimage; //absdiff(img, img_1, differenceimage); /*if (img.empty()) { std::cout << "圖片讀取失敗!" << "\n"; return -1; }*/ //namedWindow("【原始影象】", CV_WINDOW_AUTOSIZE); //imshow("【原始影象】", img); //namedWindow("【壓縮後解碼影象】", CV_WINDOW_AUTOSIZE); //imshow("【壓縮後解碼影象】", img_1); /*計算直方圖判斷噪聲並且選擇相應的濾波器進行濾波*/ //Mat dstImage = GaussianBlurfilterfunction(img); //Mat dstImage = Blurefilterfunction(img); //Mat dstImage = MedianBlurfilterfunction(img); //Mat dstImage = BilateralBlurfilterfunction(img); //Mat dstImage = Inversefiltering(img); //namedWindow("【逆濾波影象】", CV_WINDOW_AUTOSIZE); //imshow("【逆濾波影象】", dstImage); //Mat img1 = img.clone(); //Drawing_one_dimensionalhistogram(img1); //Otsusegmentation(img); //Graphcutfunction(img); //Watershedfunction(img); //ImageCompression(); //ImageCompression_DCT(img); //ImageCompression_JPEG(img); //Huffmanfunction(); //Mat dstImg = FrequencyDomainGaussFiltering(img); //Drawing_one_dimensionalhistogram(dstImg); //grathcutfunctiong(img); //namedWindow("【原始影象】", CV_WINDOW_AUTOSIZE); //imshow("【原始影象】", img); //namedWindow("【差值影象】", CV_WINDOW_AUTOSIZE); //imshow("【差值影象】", differenceimage); waitKey(0); //destroyAllWindows(); return 0; }完。