Chuhui Xue_ECCV2018_Accurate Scene Text Detection through Border Semantics Awareness and Bootstrapping

Chuhui Xue_ECCV2018_Accurate Scene Text Detection through Border Semantics Awareness and Bootstrapping

作者和程式碼

關鍵詞

文字檢測、多方向、FCN、$$xywh\theta$$、multi-stage、border

方法亮點

- 採用Bootstrapping進行資料擴增

- 增加border-loss

方法概述

本文方法是直接回歸的方法,除了學習text/non-text分類任務,四個點到邊界的迴歸任務(類似EAST),還增加了四條邊界的border學習任務,最後輸出不是直接用prediction的bounding box,而是用了text score map和四個border map來獲得textline。

方法細節

bootstrapping樣本擴增

簡單說其實就是對文字的polygon做一些重複區域性取樣,豐富文字patch的多樣性。

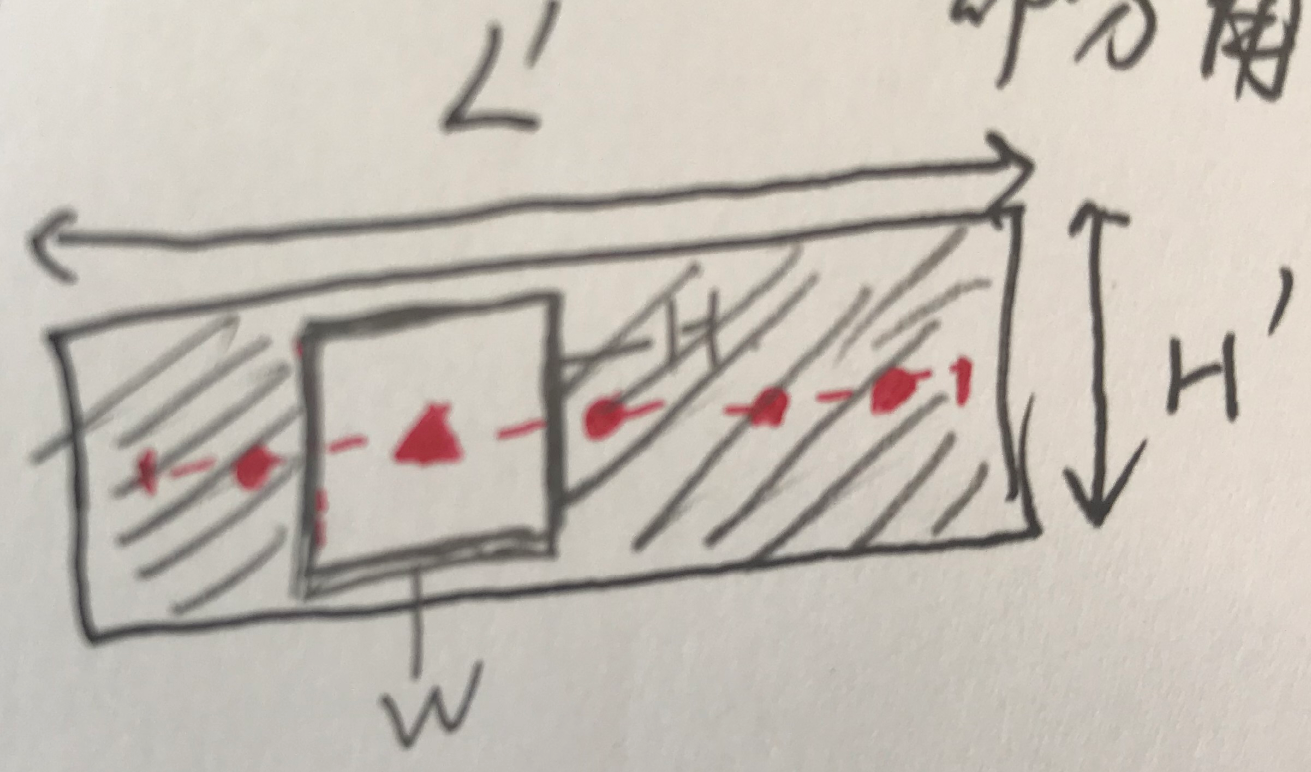

Fig. 2: Illustration of the bootstrapping based scene text sampling: Given an image with a text line as annotated by the green box, three example text line segments are extracted as highlighted by red boxes where the centers of the sampling windows are taken randomly along the center line of the text line (the shrunk part in yellow color). The rest text regions outside of the sampling windows are filled by inpainting.

具體步驟:

- 確定中心線,進行shrink 0.1L'

- 沿中心線隨機均勻取樣點(確定框中心)

- 確定框大小(H = 0.9H',W 從[0.2L',2$$d_{min}$$]選取隨機值。$$d_{min}$$表示中心點到兩條短邊的距離的最小值)

- 把處在原文字框內,但在取樣框外(上圖的綠色框內紅色框外)的部分都進行inpainting(塗抹成單色),以此得到新的訓練圖和text的groundTruth

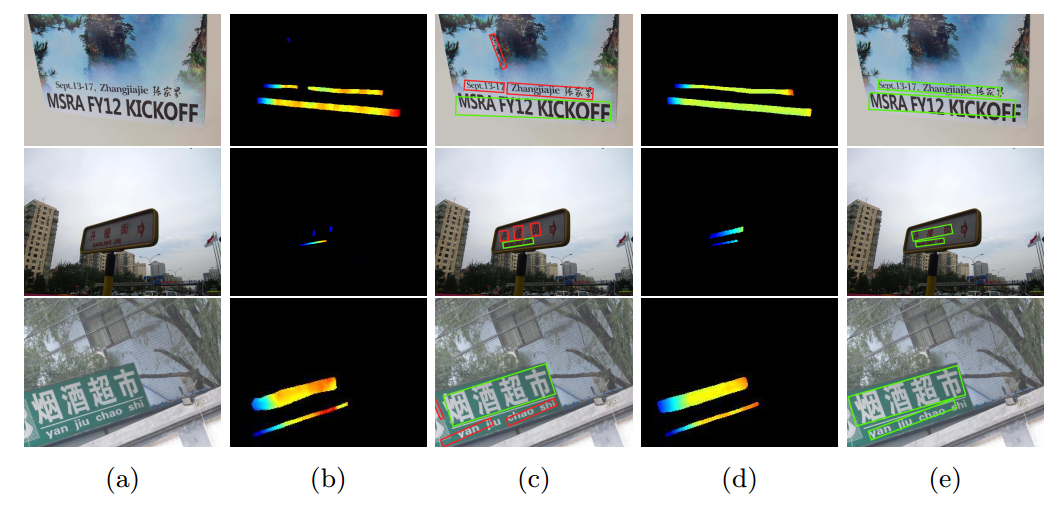

是否有進行bootstrapping的效果圖如下:

作者認為,進行augmentation之後,feature map上特徵更具有一致性,所以distance map更加平滑。

It can be seen that the inclusion of the augmented images helps to produce more consistent text feature maps as well as smoother geometrical distance maps (for regression of text boxes) which leads to more complete instead of broken scene text detections.

我的理解是,一段很長的文字,處在不同段的文字appearance特徵可能很不一樣。例如Fig 2中有的地方光很強,有的地方光照很弱,因此,bootstrapping取樣的是某個文字的segment,越短則這段text的特徵越一致,特徵學習就會越concentrate在更一致的區域上(其實是把一個學習問題難度降低了)

Fig. 3: The inclusion of augmented images improves the scene text detection: With the inclusion of the augmented images in training, more consistent text feature maps and more complete scene text detections are produced as shown in (d) and (e), as compared with those produced by the baseline model (trained using original training images only) shown in (b) and (c). The coloring in the text feature maps shows the distance information predicted by regressor (blue denotes short distances and red denotes long distance).

增加四個border-pixel的classification

增加4個要學習的border/non-border的classification map。

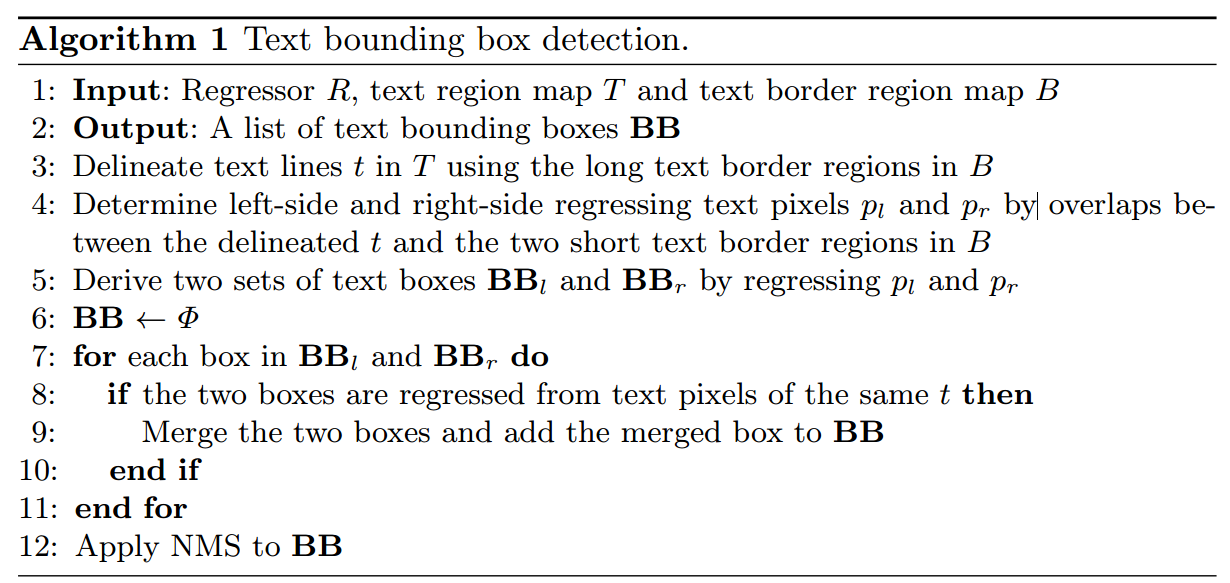

Fig. 4: Semantics-aware text border detection: Four text border segments are automatically extracted for each text annotation box including a pair of shortedge text border segments in yellow and red colors and a pair of long-edge text border segments in green and blue colors. The four types of text border segments are treated as four types of objects and used to train deep network models, and the trained model is capable of detecting the four types of text border segments as illustrated in Fig. 5c.

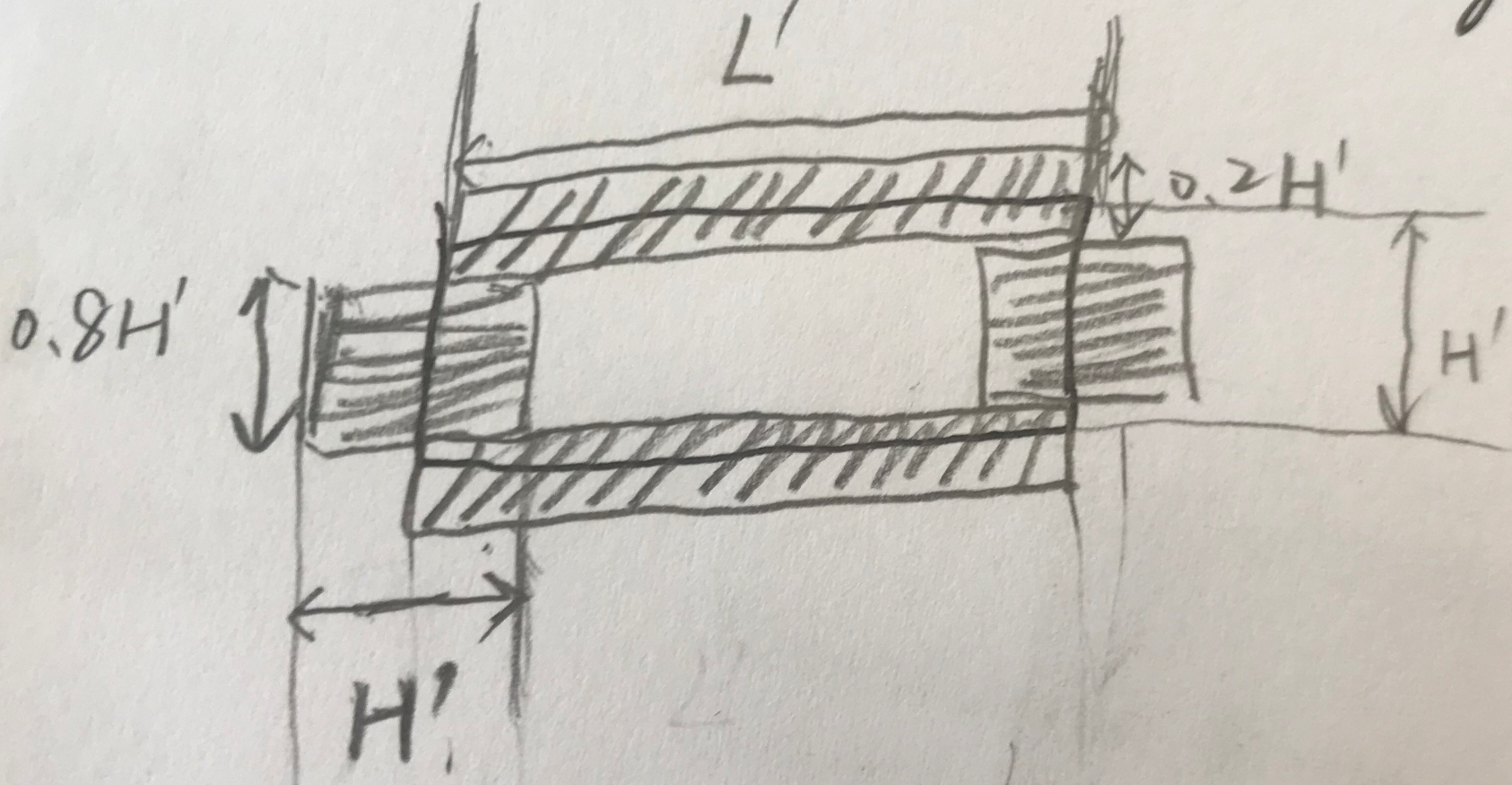

具體的四個border的大小如下。上下取0.2H',左右取0.8H',比較特別的是左右的寬度取得比較大,是H'。主要是擔心同一文字行文字黏連問題比較多,而且不像上下border具有很長的邊(面積大一些)。上下邊界主要為了解決多行文字黏連問題。border要學習的是從text到background的transition(...the extracted text border segments capture the transition from text to background or vice versa...)。

作者認為增加短的border之所以可以提升效果是因為處在文字中心部分的畫素離兩條短邊比較遠(長條文字),容易產生迴歸誤差,導致檢測結果不精確,而增加border畫素的loss可以幫助解決這個問題。另一方面,增加兩條長border的目的是為了解決挨的比較近的上下兩行文字行的黏連問題。

The reason is that text pixels around the middle of texts are far from the text box vertices for long words or text lines which can easily introduce regression errors and lead to inaccurate localization as illustrated in Fig. 5b. At the other end, the long text border segments also help for better scene text detection performance. In particular, the long text border segments can be exploited to separate text lines when neighboring text lines are close to each other.

是否增加border的效果對比如下圖:

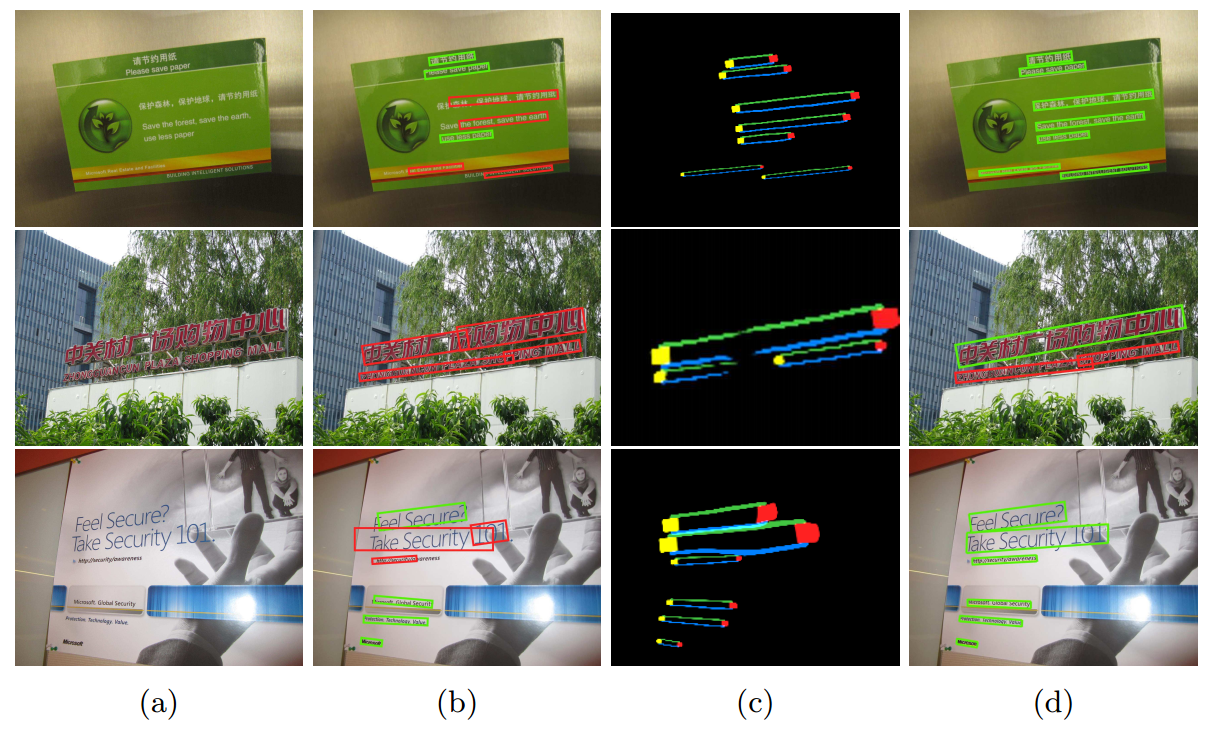

Fig. 5: The use of semantics-aware text borders improves scene text detection: With the identified text border semantics information as illustrated in (c), scene texts can be localized much more accurately as illustrated in (d) as compared with the detections without using the border semantics information as illustrated in (b). Green boxes give correct detections and red boxes give false detections.

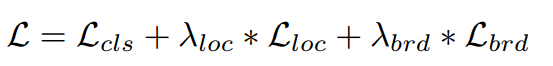

損失函式

- 總的損失

分類損失:採用DIces Coefficient損失(confidence score of each pixel being a text pixel )

- 迴歸損失:採用IOU損失(distances from each pixel to four sides of text boundaries)

邊界損失:採用DIces Coefficient損失(confidence score of each pixel being a border pixel)

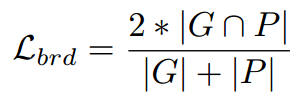

後處理文字線形成演算法

- 二值化五個map(1個text region map,4個text border map):採用mean_score

- 算出region map和4個border map的overlap

- 提取文字行(上下邊)

- 提取左右邊界

- merge四個邊界組成的boungding box

- NMS

實驗結果

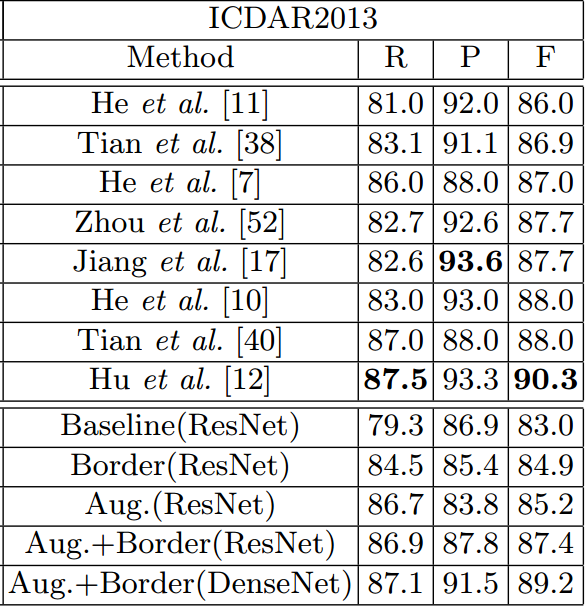

- ICDAR2013

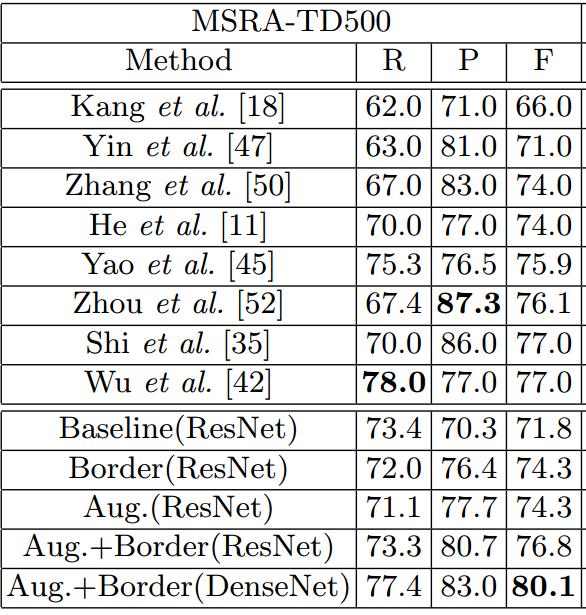

- MSRA-TD500

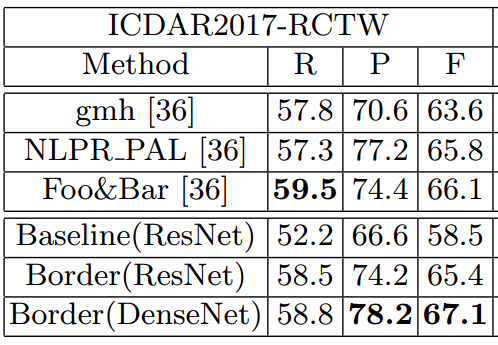

- ICDAR2017-RCTW

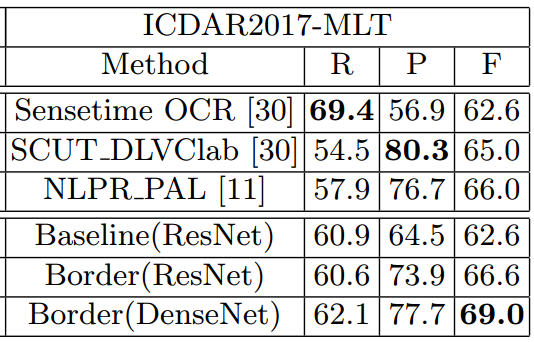

- ICDAR2017-MLT

總結與收穫

這篇方法的bootstrap來擴增樣本的方式很有意思,雖然是在目標檢測領域裡早有人這麼用了,但這是第一次引入到OCR。另外,增加border loss的思路也很直接,與Yue Wu_ICCV2017_Self-Organized Text Detection With Minimal Post-Processing via Border Learning的border有點像。