CS229 6.16 Neurons Networks linear decoders and its implements

Sparse AutoEncoder是一個三層結構的網路,分別為輸入輸出與隱層,前邊自編碼器的描述可知,神經網路中的神經元都採用相同的激勵函式,Linear Decoders 修改了自編碼器的定義,對輸出層與隱層採用了不用的激勵函式,所以 Linear Decoder 得到的模型更容易應用,而且對模型的引數變化有更高的魯棒性。

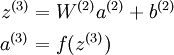

在網路中的前向傳導過程中的公式:

其中 a(3) 是輸出. 在自編碼器中, a(3) 近似重構了輸入 x = a(1) 。

對於最後一層為 sigmod(tanh) 啟用函式的 autoencoder ,會直接將資料歸一化到 [0,1] ,所以當 f

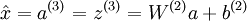

另 a(3) = z(3) 可以很簡單的解決上述問題。即在輸出端使用恆等函式 f(z) = z 作為激勵函式,於是有 a(3) = f(z(3)) = z(3)。該特殊的激勵函式叫做 線性激勵 (恆等激勵)函式

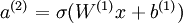

Linear Decoder 中隱含層的神經元依然使用 sigmod(tanh)激勵函式。隱含單元的激勵公式為  ,其中

,其中  是 S 型函式, x 是入, W(1) 和 b(1) 分別是隱單元的權重和偏差項。即僅在輸出層中使用線性激勵函式。這用一個 S 型或 tanh 隱含層以及線性輸出層構成的自編碼器,叫做線性解碼器。

是 S 型函式, x 是入, W(1) 和 b(1) 分別是隱單元的權重和偏差項。即僅在輸出層中使用線性激勵函式。這用一個 S 型或 tanh 隱含層以及線性輸出層構成的自編碼器,叫做線性解碼器。

線上性解碼器中, 。因為輸出

。因為輸出  是隱單元激勵輸出的線性函式,改變 W(2) ,即可使輸出值 a(3) 大於 1 或者小於 0。這樣就可以避免在 sigmod 對輸出層的值縮放到 [0,1] 。

是隱單元激勵輸出的線性函式,改變 W(2) ,即可使輸出值 a(3) 大於 1 或者小於 0。這樣就可以避免在 sigmod 對輸出層的值縮放到 [0,1] 。

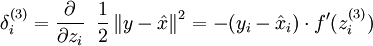

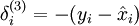

隨著輸出單元的激勵函式的改變,輸出單元的梯度也相應變化。之前每一個輸出單元誤差項定義為:

其中 y = x 是所期望的輸出,  是自編碼器的輸出,

是自編碼器的輸出,  是激勵函式.因為在輸出層激勵函式為 f(z) = z, 這樣 f'(z) = 1,所以上述公式可以簡化為

是激勵函式.因為在輸出層激勵函式為 f(z) = z, 這樣 f'(z) = 1,所以上述公式可以簡化為

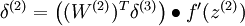

當然,若使用反向傳播演算法來計算隱含層的誤差項時:

因為隱含層採用一個 S 型(或 tanh)的激勵函式 f,在上述公式中, 依然是 S 型(或 tanh)函式的導數。即Linear Decoder中只有輸出層殘差是不同於autoencoder 的。

依然是 S 型(或 tanh)函式的導數。即Linear Decoder中只有輸出層殘差是不同於autoencoder 的。

Liner Decoder 程式碼:

%% CS294A/CS294W Linear Decoder Exercise % Instructions % ------------ % % This file contains code that helps you get started on the % linear decoder exericse. For this exercise, you will only need to modify % the code in sparseAutoencoderLinearCost.m. You will not need to modify % any code in this file. %%====================================================================== %% STEP 0: Initialization % Here we initialize some parameters used for the exercise. imageChannels = 3; % number of channels (rgb, so 3) patchDim = 8; % patch dimension(需要 8*8 的小patches) numPatches = 100000; % number of patches % 把8 * 8 * rgb_size 的小patchs 共同作為可見層的unit數目 visibleSize = patchDim * patchDim * imageChannels; % number of input units outputSize = visibleSize; % number of output units hiddenSize = 400; % number of hidden units sparsityParam = 0.035; % desired average activation of the hidden units. lambda = 3e-3; % weight decay parameter beta = 5; % weight of sparsity penalty term epsilon = 0.1; % epsilon for ZCA whitening %%====================================================================== %% STEP 1: Create and modify sparseAutoencoderLinearCost.m to use a linear decoder, % and check gradients % You should copy sparseAutoencoderCost.m from your earlier exercise % and rename it to sparseAutoencoderLinearCost.m. % Then you need to rename the function from sparseAutoencoderCost to % sparseAutoencoderLinearCost, and modify it so that the sparse autoencoder % uses a linear decoder instead. Once that is done, you should check % your gradients to verify that they are correct. % NOTE: Modify sparseAutoencoderCost first! % To speed up gradient checking, we will use a reduced network and some % dummy patches debugHiddenSize = 5; debugvisibleSize = 8; patches = rand([8 10]); theta = initializeParameters(debugHiddenSize, debugvisibleSize); [cost, grad] = sparseAutoencoderLinearCost(theta, debugvisibleSize, debugHiddenSize, ... lambda, sparsityParam, beta, ... patches); % Check gradients numGrad = computeNumericalGradient( @(x) sparseAutoencoderLinearCost(x, debugvisibleSize, debugHiddenSize, ... lambda, sparsityParam, beta, ... patches), theta); % Use this to visually compare the gradients side by side disp([numGrad grad]); diff = norm(numGrad-grad)/norm(numGrad+grad); % Should be small. In our implementation, these values are usually less than 1e-9. disp(diff); assert(diff < 1e-9, 'Difference too large. Check your gradient computation again'); % NOTE: Once your gradients check out, you should run step 0 again to % reinitialize the parameters %} %%====================================================================== %% STEP 2: Learn features on small patches % In this step, you will use your sparse autoencoder (which now uses a % linear decoder) to learn features on small patches sampled from related % images. %% STEP 2a: Load patches % In this step, we load 100k patches sampled from the STL10 dataset and % visualize them. Note that these patches have been scaled to [0,1] load stlSampledPatches.mat displayColorNetwork(patches(:, 1:100)); %% STEP 2b: Apply preprocessing % In this sub-step, we preprocess the sampled patches, in particular, % ZCA whitening them. % % In a later exercise on convolution and pooling, you will need to replicate % exactly the preprocessing steps you apply to these patches before % using the autoencoder to learn features on them. Hence, we will save the % ZCA whitening and mean image matrices together with the learned features % later on. % Subtract mean patch (hence zeroing the mean of the patches) meanPatch = mean(patches, 2); patches = bsxfun(@minus, patches, meanPatch);% - mean % Apply ZCA whitening sigma = patches * patches' / numPatches; [u, s, v] = svd(sigma); %一下是打算對資料做ZCA變換,資料需要做的變換的矩陣 ZCAWhite = u * diag(1 ./ sqrt(diag(s) + epsilon)) * u'; %這一步是ZCA變換 patches = ZCAWhite * patches; displayColorNetwork(patches(:, 1:100)); %% STEP 2c: Learn features % You will now use your sparse autoencoder (with linear decoder) to learn % features on the preprocessed patches. This should take around 45 minutes. theta = initializeParameters(hiddenSize, visibleSize); % Use minFunc to minimize the function addpath minFunc/ options = struct; options.Method = 'lbfgs'; options.maxIter = 400; options.display = 'on'; [optTheta, cost] = minFunc( @(p) sparseAutoencoderLinearCost(p, ... visibleSize, hiddenSize, ... lambda, sparsityParam, ... beta, patches), ... theta, options); % Save the learned features and the preprocessing matrices for use in % the later exercise on convolution and pooling fprintf('Saving learned features and preprocessing matrices...\n'); save('STL10Features.mat', 'optTheta', 'ZCAWhite', 'meanPatch'); fprintf('Saved\n'); %% STEP 2d: Visualize learned features %這裡為什麼要用(W*ZCAWhite)'呢?首先,使用W*ZCAWhite是因為每個樣本x輸入網路, %其輸出等價於W*ZCAWhite*x;另外,由於W*ZCAWhite的每一行才是一個隱含節點的變換值 %而displayColorNetwork函式是把每一列顯示一個小影象塊的,所以需要對其轉置。 W = reshape(optTheta(1:visibleSize * hiddenSize), hiddenSize, visibleSize); b = optTheta(2*hiddenSize*visibleSize+1:2*hiddenSize*visibleSize+hiddenSize); displayColorNetwork( (W*ZCAWhite)'); function [cost,grad,features] = sparseAutoencoderLinearCost(theta, visibleSize, hiddenSize, ... lambda, sparsityParam, beta, data) % -------------------- YOUR CODE HERE -------------------- % Instructions: % Copy sparseAutoencoderCost in sparseAutoencoderCost.m from your % earlier exercise onto this file, renaming the function to % sparseAutoencoderLinearCost, and changing the autoencoder to use a % linear decoder. % -------------------- YOUR CODE HERE -------------------- %將資料由向量轉化為矩陣: W1 = reshape(theta(1:hiddenSize*visibleSize), hiddenSize, visibleSize); W2 = reshape(theta(hiddenSize*visibleSize+1:2*hiddenSize*visibleSize), visibleSize, hiddenSize); b1 = theta(2*hiddenSize*visibleSize+1:2*hiddenSize*visibleSize+hiddenSize); b2 = theta(2*hiddenSize*visibleSize+hiddenSize+1:end); %樣本數 m = size(data ,2); %%%%%%%%%%% forward %%%%%%%%%%% z2 = W1*data + repmat(b1, [1,m]); a2 = f(z2); z3 = W2*a2 + repmat(b2, [1,m]); a3 = z3; %求當前網路的平均啟用度 rho_hat = mean(a2 ,2); rho = sparsityParam; %對隱層所有節點的散度求和。 KL_Divergence = sum(rho * log(rho ./ rho_hat) + log((1- rho) ./ (1-rho_hat))); squares = (a3- data).^2; J_square_err = (1/2)*(1/m)* sum(squares(:)); J_weight_decay = (lambd/2)*(sum(W1(:).^2) + sum(W2(:).^2)); J_sparsity = beta * KL_Divergence; cost = J_square_err + J_weight_decay + J_sparsity; %%%%%%%%%%% backward %%%%%%%%%%% delta3 = -(data-a3);% 注意 linear decoder beta_term = beta * (- rho ./ rho_hat + (1-rho) ./ (1-rho_hat)); delta2 = (W2' * delta3) * repmat(beta_term, [1,m]) .* a2 .*(1-a2); W2grad = (1/m) * delta3 * a2' + lambda * W2; b2grad = (1/m) * sum(delta3, 2); W1grad = (1/m) * delta2 * data' + lambda * W1; b1grad = (1/m) * sum(delta2, 2); %------------------------------------------------------------------- % Convert weights and bias gradients to a compressed form % This step will concatenate and flatten all your gradients to a vector % which can be used in the optimization method. grad = [W1grad(:) ; W2grad(:) ; b1grad(:) ; b2grad(:)]; end %------------------------------------------------------------------- % We are giving you the sigmoid function, you may find this function % useful in your computation of the loss and the gradients. function sigm = sigmoid(x) sigm = 1 ./ (1 + exp(-x)); endView Code